Learning to create a simple custom ReLU activation function using lambda layers in TensorFlow 2

Previously we’ve seen how to create custom loss functions — Creating custom Loss functions using TensorFlow 2

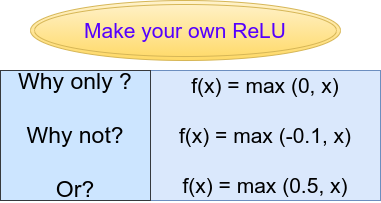

Customizing the ReLU function (Source: Image created by author)

Introduction

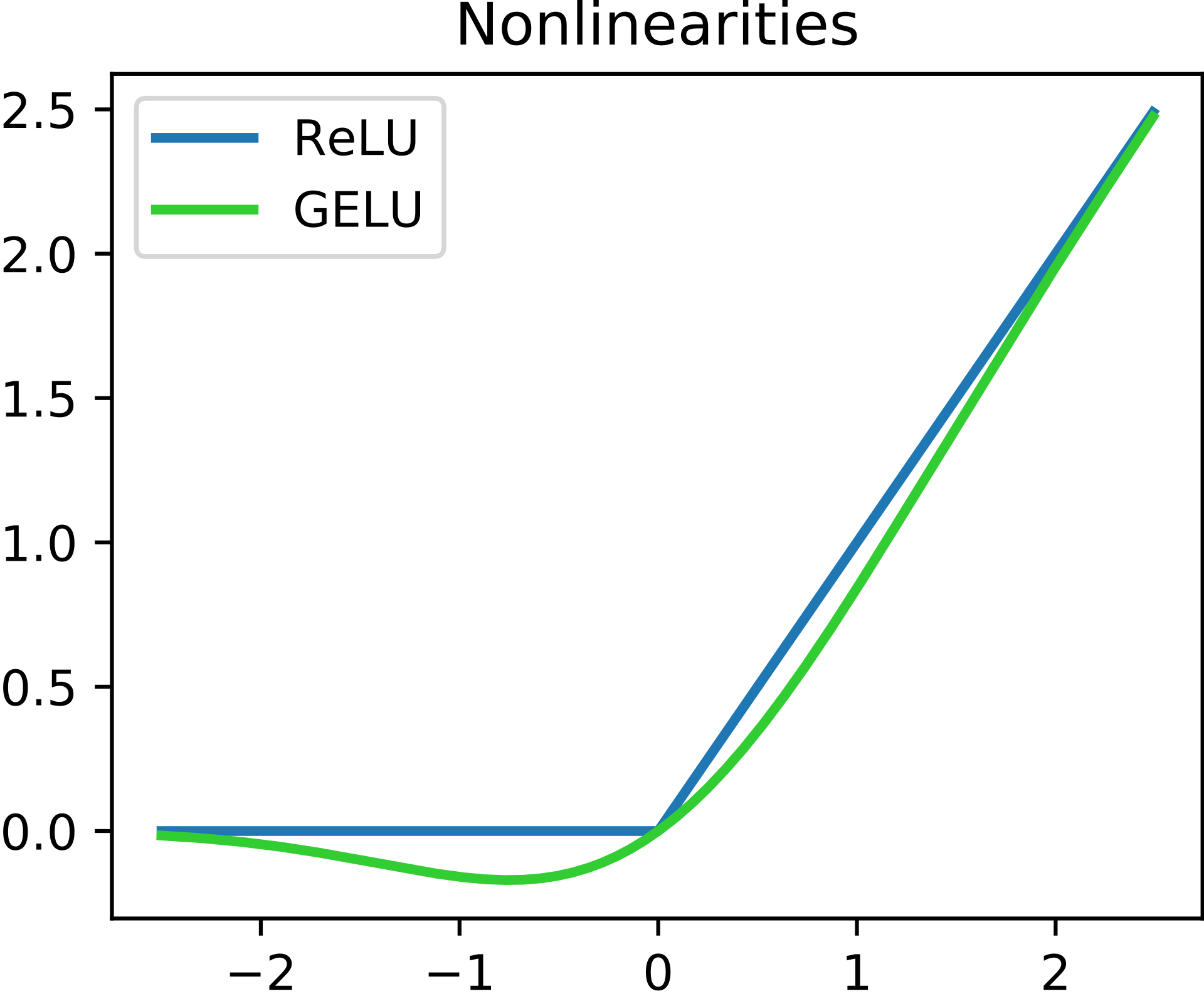

In this article, we look at how to create custom activation functions. While TensorFlow already contains a bunch of activation functions inbuilt, there are ways to create your own custom activation function or to edit an existing activation function.

ReLU (Rectified Linear Unit) is still the most common activation function used in the hidden layers of any neural network architecture. ReLU can also be represented as a function f(x) where,

f(x) = 0, when x <0,

and, f(x) = x, when x ≥ 0.

Thus the function takes into consideration only the positive part, and is written as,

f(x) = max(0,x)

or in a code representation,

if input > 0:

return input

else:

return 0

But this ReLU function is predefined. What if we want to customize this function or create our own ReLU activation. There is a very simple way to do this in TensorFlow — we just have to use Lambda layers.

#neural-networks #machine-learning #tensorflow #deep-learning #lambda #creating custom activation functions with lambda layers in tensorflow 2