In this article, I’ll introduce how the Go program to achieve extremely high concurrent performance, and its internal scheduler implementation architecture (G-P-M model). I’ll also explain how Go can make full use of computing resources, and how the Go scheduler deals with threads step by step:

- Introduce concurrency, explain concept related in the following article

- Introduce Go GPM model

- Describe cases about Go scheduler deals multiple threads

Introduce Concurrency

With the rapid development of information technology, the processing power of a single server is getting stronger, forcing the programming mode to upgrade from the previous serial to the concurrent.

The concurrency model includes IO multiplexing, multi-process, and multi-threading. These models have their advantages and disadvantages. Most of the modern complex high-concurrency architectures are used in conjunction with different models. Different models are used in different scenarios to promote strengths and avoid weaknesses to achieve maximum performance.

Multi-threading, because of its lightweight and ease of use, has become the most frequently used concurrency model in concurrent programming, including other sub-products such as post-derived coroutines.

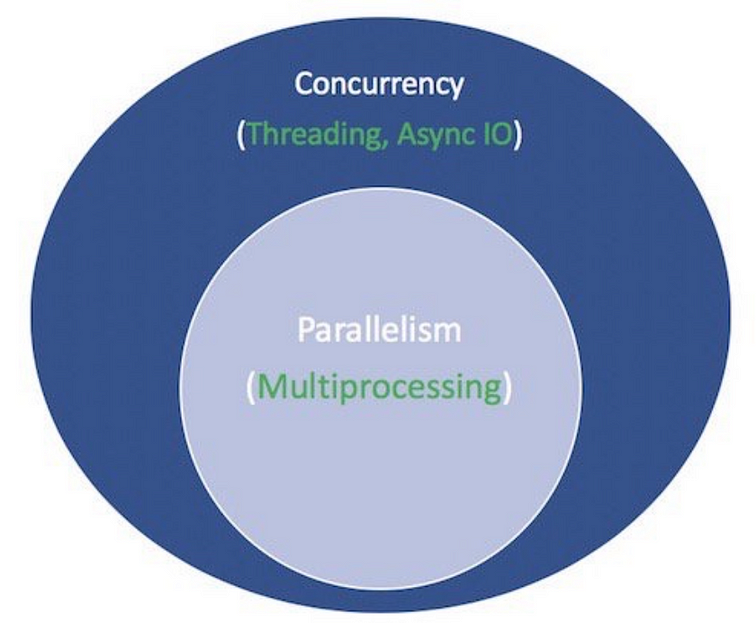

Concurrency ≠ Parallelism

On a single CPU core, threads switch tasks through time slices or give up control to achieve the purpose of running multiple tasks “simultaneously.” It is called concurrency. But in fact, only one task is executed at any time, and the others wait in the queue.

Multi-core CPUs can allow “multiple threads” in the same process to run simultaneously, which is parallelism.

Process, Thread, Coroutine

- Process: Process is the basic unit of resource allocation by the system, with independent memory space.

- Threads: Thread is the basic unit of CPU scheduling and dispatch. Threads depend on the existence of processes, and each thread shares the resources of the parent process.

- Coroutine: Coroutine is a lightweight thread in user mode. The user completely controls the scheduling of coroutine. Shifting between coroutines only needs to save the context of the task without the overhead of the kernel.

Thread Context Switch

Interrupt processing, multi-tasking, user-mode switching, and other reasons will cause the CPU to switch from one thread to another thread. The switching process needs to save the state of the current process and restore the state of another.

The cost of context switching is high since swapping threads on the core takes a lot of time. The delay of context switching depends on different factors, probably between 50 and 100 nanoseconds. Considering that the hardware performs an average of 12 instructions per nanosecond on each core, then a context switch may take between 600 and 1200 instructions of latency. In fact, context switching takes up a lot of time for the program to execute instructions.

If there is a Cross-Core Context Switch, it may cause the CPU cache to fail. (the cost of CPU accessing data from the cache is about 3 to 40 clock cycles, and the cost of accessing data from main memory is about 100 to 300 clock cycles ). The switching cost of this scenario will be more expensive.

Go is born for concurrency

Since its official release in 2009, Golang has quickly gained market share due to its extremely high operating speed and efficient development efficiency. Golang supports concurrency from the language level and uses lightweight coroutines to implement concurrent programs.

Goroutine is very lightweight, mainly reflected in the following two aspects:

- The cost of context switching is low: Goroutine context switching involves only the modification of the value of three registers(PC / SP / DX) while the context switching of the comparison thread needs to include mode switching (switching from user mode to kernel mode) and 16 registers, PC, SP, etc. register refresh

- Less memory usage: thread stack space is usually 2M, Goroutine stack space is at least 2K;

Go programs can easily support six figures concurrent Goroutine operation, and when the number of threads reaches 1k, the memory consumption has reached 2G.

#golang-tutorial #golang #programing #go