A fascinating thing about Neural networks, especially Convolutional Neural Networks is that in the final layers of the network where the layers are fully connected, each layer can be treated as a vector space. All the useful and required information that has been extracted from the image by the ConvNet has been stored in a **compact version **in the final layer in the form of a feature vector. When this final layer is treated as a vector space and is subjected to vector algebra, it starts to yield some very interesting and useful properties.

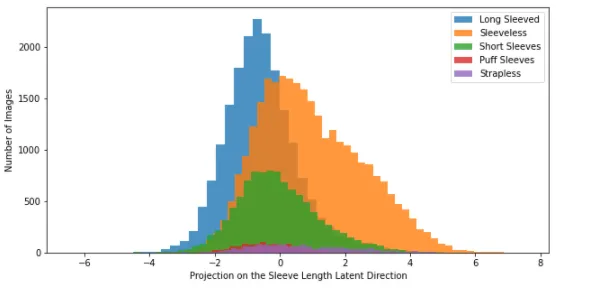

I trained a Resnet50 classifier on the iMaterialist Challenge(Fashion) dataset from Kaggle. The challenge here is to classify every apparel image into appropriate attributes like pattern, neckline, sleeve length, style, etc. I used the pre-trained Resnet model and applied transfer learning on this dataset with added layers for each of the labels. Once the model got trained, I wish to look at the vector space of the last layer and search for patterns.

Latent Space Directions

The last FC layer of the Resnet50 is of length 1000. After transfer learning on the Fashion dataset, I apply PCA on this layer and reduce the dimension to 50 which retains more than 98% of the variance.

The concept of Latent Space Directions is popular with GANs and Variational Auto Encoders where the input is a vector and the output is an image, it is observed that by translating the input vector in a certain direction, a certain attribute of the output image changes. The below example is from StyleGAN trained by NVIDIA, you can have a look at this website where a fake face gets generated from a random vector every time you refresh the page.

#classification #convolutional-network #deep-learning #fashion-technology