Keras provides a set of deep learning models that are made available alongside pre-trained weights on ImageNet dataset. These models can be used for prediction, feature extraction, and fine-tuning. Here I’m going to discuss how to extract features, visualize filters and feature maps for the pretrained models VGG16 and VGG19 for a given image.

Extract Features with VGG16

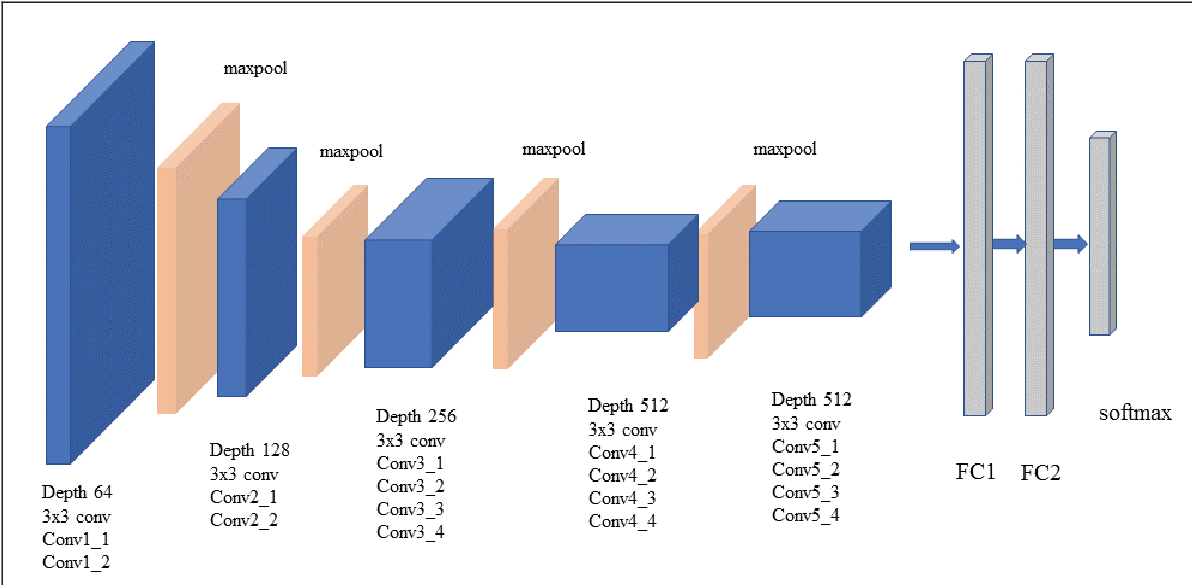

Here we first import the VGG16 model from tensorflow keras. The image module is imported to preprocess the image object and the preprocess_input module is imported to scale pixel values appropriately for the VGG16 model. The numpy module is imported for array-processing. Then the VGG16 model is loaded with the pretrained weights for the imagenet dataset. VGG16 model is a series of convolutional layers followed by one or a few dense (or fully connected) layers. Include_top lets you select if you want the final dense layers or not. False indicates that the final dense layers are excluded when loading the model. From the input layer to the last max pooling layer (labeled by 7 x 7 x 512) is regarded as **feature extraction part **of the model, while the rest of the network is regarded as **classification part **of the model. After defining the model, we need to load the input image with the size expected by the model, in this case, 224×224. Next, the image PIL object needs to be converted to a NumPy array of pixel data and expanded from a 3D array to a 4D array with the dimensions of [samples, rows, cols, channels], where we only have one sample. The pixel values then need to be scaled appropriately for the VGG model. We are now ready to get the features.

Extract Features from an Arbitrary Intermediate Layer with VGG16

Here also we first import the VGG16 model from tensorflow keras. The image module is imported to preprocess the image object and the preprocess_input module is imported to scale pixel values appropriately for the VGG16 model. The numpy module is imported for array-processing. In addition the Model module is imported to design a new model that is a subset of the layers in the full VGG16 model. The model would have the same input layer as the original model, but the output would be the output of a given convolutional layer, which we know would be the activation of the layer or the feature map. Then the VGG16 model is loaded with the pretrained weights for the imagenet dataset. For example, after loading the VGG model, we can define a new model that outputs a feature map from the block4 pooling layer. After defining the model, we need to load the input image with the size expected by the model, in this case, 224×224. Next, the image PIL object needs to be converted to a NumPy array of pixel data and expanded from a 3D array to a 4D array with the dimensions of [samples, rows, cols, channels], where we only have one sample. The pixel values then need to be scaled appropriately for the VGG model. We are now ready to get the features.

For example here we extract features of block4_pool layer.

#feature-extraction #convolutional-network #deep-learning #vgg19 #vgg16