It’s completely fine if you feel confused between the topics** “Standardization” **vs **“Normalization”. **A few months ago, I was one of you, therefore, I can completely understand this feeling of confusion & sometimes frustrated too, because there was no good & easy resource to explain the topic.

But, there is no need to worry because this blog will not only clear all the doubts between these topics but also provide their use-case i.e. when to use which.

Important Prior Knowledge!

Before explaining the difference between **“Standardization” & “Normalization”, **let me build the context for that.

Standardization & Normalization, both are part of Feature Engineering which in turn a part of Data Science.

Feature Engineering means to apply your engineering mind & skills to optimize the features so that the model can be effectively & easily trained on those features.

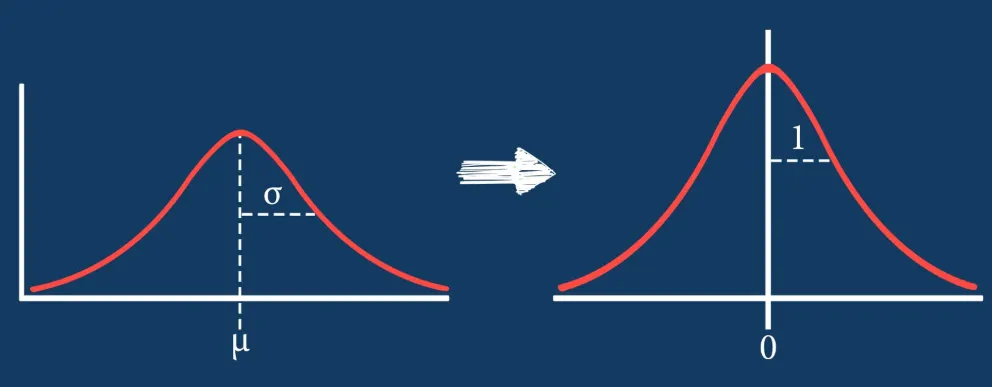

Standardization & Normalization both are used for **Feature Scaling(Scaling the features to a specified range instead of being in a large range which is very complex for the model to understand), **but both differ in the way they work, & also, they should be used in the specific use-cases(discussed later in this blog).

This much information is enough for setting up the context before explaining the topics. Now, let us jump directly to the main topics.

#data-science #normalization #machine-learning #deep-learning #feature-engineering