Naive Bayes is a trivial machine learning algorithm. It is an eager learner, which means that it takes more time for training than testing. This algorithm works on the assumption that all the features are independent of each other.

It is based on the Bayes theorem. It is easy to build. It is a collection of models and not just a single model.

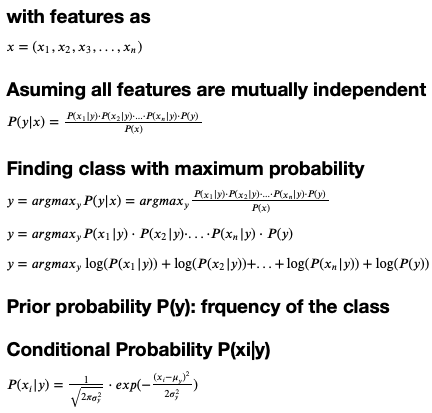

Bayes Theorem

Here,

P(A|B): posterior probability of class given predictor

P(A): prior probability of the class

P(B|A): likelihood which is the probability of predictor given the class

P(B): Prior probability of the predictor

Naive Bayes theorem are of three types, Gaussian, Multinomial, and Benoulli. We would be developing the Gaussian Naive Bayes theorem from scratch. In Gaussian Naive Bayes, the distribution of features are assumed to be Gaussian or normal. When plotted it gives a bell shaped curve which is symmetric about the mean of the feature values.

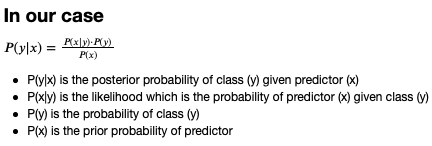

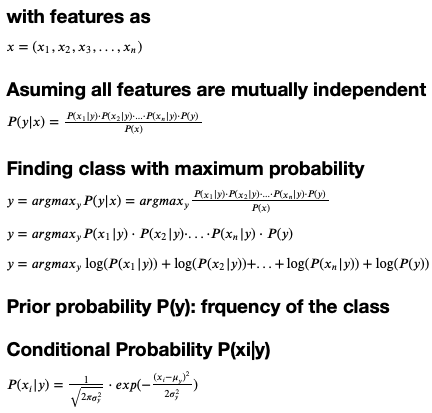

Starting with the theory

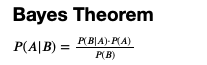

Bayes Theorem for our case

After assuming the features to be mutually independent, we can multiply these probabilities with the prior probability, directly. To get the class of the datapoint, we find the maximum probability of the target classes, i.e.if class A has the highest probability then the datapoint belongs to the class A. Since the prior probability is neglected then only the numerator is important. As the conditional probability gives us very small values, therefore we apply log to the entire numerator, making it the summation of the conditional probabilities. These conditional probabilities are found out using the Gaussian Function.

#data-preprocessing #naive-bayes-classifier #python #machine-learning #data-science