What is LSTM?

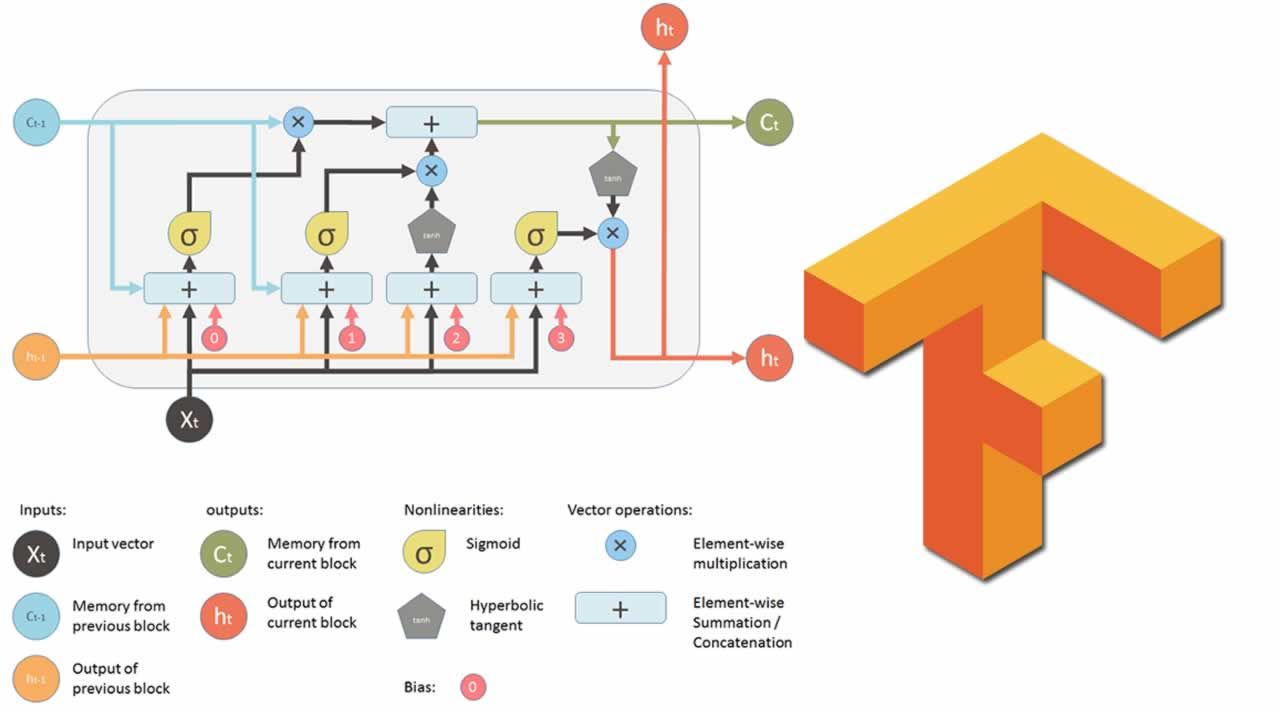

Long Short Term Memory networks, also known as “LSTMs” — are a complex area of deep learning, which are special kind of RNNs (recurrent neural networks), capable of learning long-term dependencies by utilizing their cell state, and are required in domains like machine translation and speech recognition. They can retain information for long periods of time.

Why are LSTM networks needed?

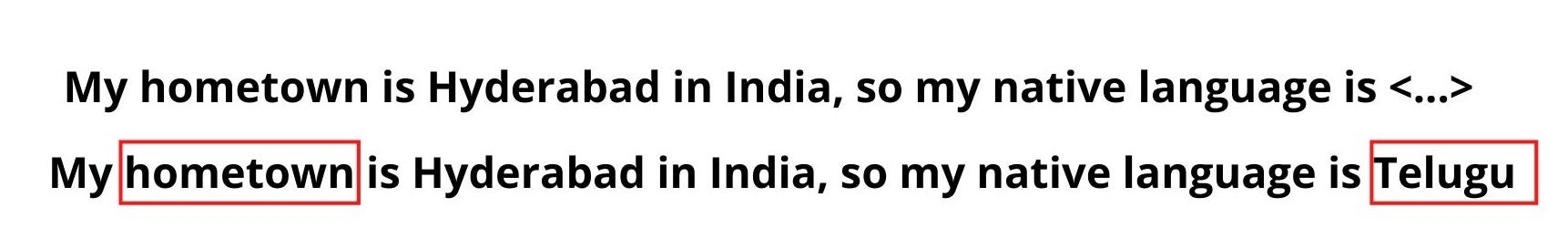

Consider a real life example to understand this. As you read this article, you are able to understand the context based on your understanding of the previous words you read. You don’t abruptly start understanding but as you read further, your brain eventually forms the connections.Traditional neural networks are unable to work like neurons in our brains, which is a major shortcoming. They suffer from short-term memory. If a sequence is too long, they have a hard time carrying information from earlier stage to later ones.This is where LSTM networks come into picture. Consider the two sentences below. If we have to predict the text in the first sentence marked by “<…>”, the best prediction would be “Telugu” because according to the context of the sentence we are talking about the city “Hyderabad” where the native language is Telugu. This was easily done by our human brain but it is pretty difficult for the neural network models to identify the semantics.

The word “Hyderabad”, which indicates that the language will be “Telugu” is appearing in the start of the sentence. So for the neural network to predict correctly, it will have to remember the sequence of the words which is done by LSTM networks.

#nlp #lstm #deep-learning #tensorflow