In the previous post, we explained how we can reduce the dimensions by applying PCA and t-SNE and how we can apply Non-Negative Matrix Factorization for the same scope. In this post, we will provide a concrete example of how we can apply Autoeconders for Dimensionality Reduction. We will work with Python and TensorFlow 2.x.

Autoencoders on MNIST Dataset

We will use the MNIST dataset of TensorFlow, where the images are 28 x 28 dimensions, in other words, if we flatten the dimensions, we are dealing with 784 dimensions. Our goal is to reduce the dimensions, from 784 to 2, by including as much information as possible.

Let’s get our hands dirty!

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense,Flatten,Reshape

from tensorflow.keras.optimizers import SGD

from tensorflow.keras.datasets import mnist

(X_train, y_train), (X_test, y_test) = mnist.load_data()

X_train = X_train/255.0 X_test = X_test/255.0

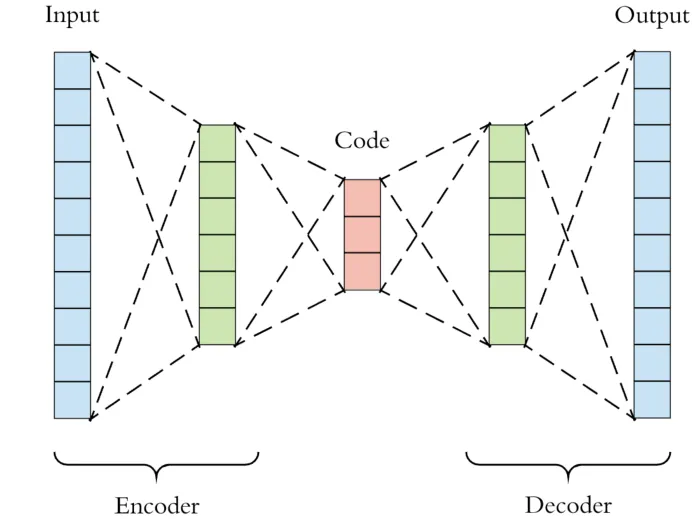

#### Encoder

encoder = Sequential()

encoder.add(Flatten(input_shape=[28,28])) encoder.add(Dense(400,activation="relu")) encoder.add(Dense(200,activation="relu")) encoder.add(Dense(100,activation="relu")) encoder.add(Dense(50,activation="relu")) encoder.add(Dense(2,activation="relu"))

#### Decoder

decoder = Sequential()

decoder.add(Dense(50,input_shape=[2],activation='relu')) decoder.add(Dense(100,activation='relu')) decoder.add(Dense(200,activation='relu')) decoder.add(Dense(400,activation='relu'))

decoder.add(Dense(28 * 28, activation="relu")) decoder.add(Reshape([28, 28]))

#### Autoencoder

autoencoder = Sequential([encoder,decoder]) autoencoder.compile(loss="mse") autoencoder.fit(X_train,X_train,epochs=50)

encoded_2dim = encoder.predict(X_train)

## The 2D

AE = pd.DataFrame(encoded_2dim, columns = ['X1', 'X2'])

AE['target'] = y_train

sns.lmplot(x='X1', y='X2', data=AE, hue='target', fit_reg=False, size=10)

#autoencoder #dimensionality-reduction #data-science #data-visualization #tensorflow

1.75 GEEK