Standard, Recurrent, Convolutional, & Autoencoder Networks

With the rapid development of deep learning, an entire host of neural network architectures have been created to address a wide variety of tasks and problems. Although there are countless neural network architectures, here are eleven that are essential for any deep learning engineer to understand, split into four general categories: the standard networks, the recurrent networks, the convolutional networks, and the autoencoders.

All diagrams created by Author.

The Standard Networks

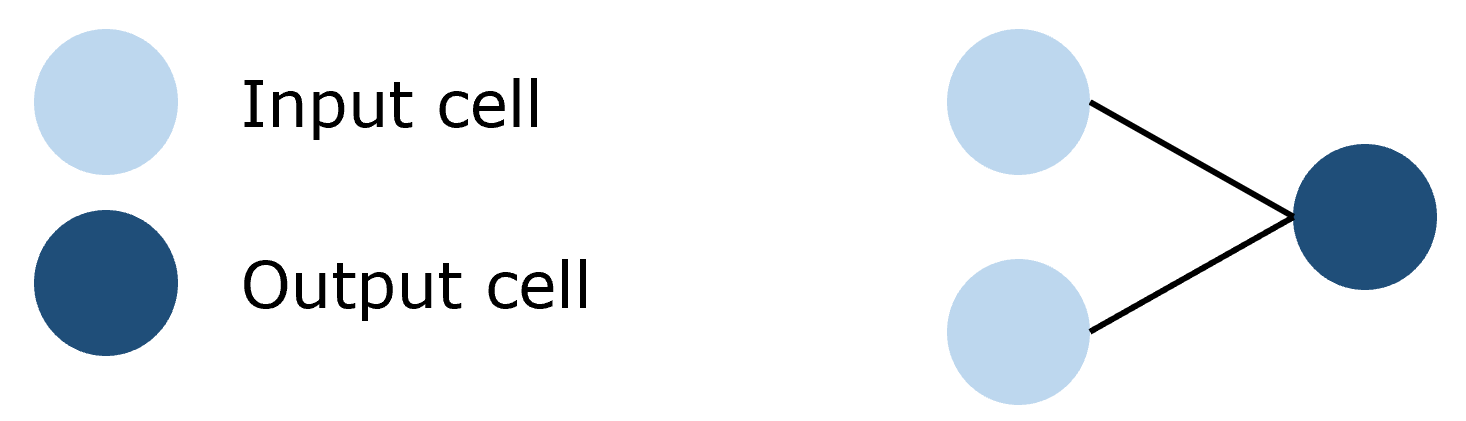

1 | The Perceptron

The perceptron is the most basic of all neural networks, being a fundamental building block of more complex neural networks. It simply connects an input cell and an output cell.

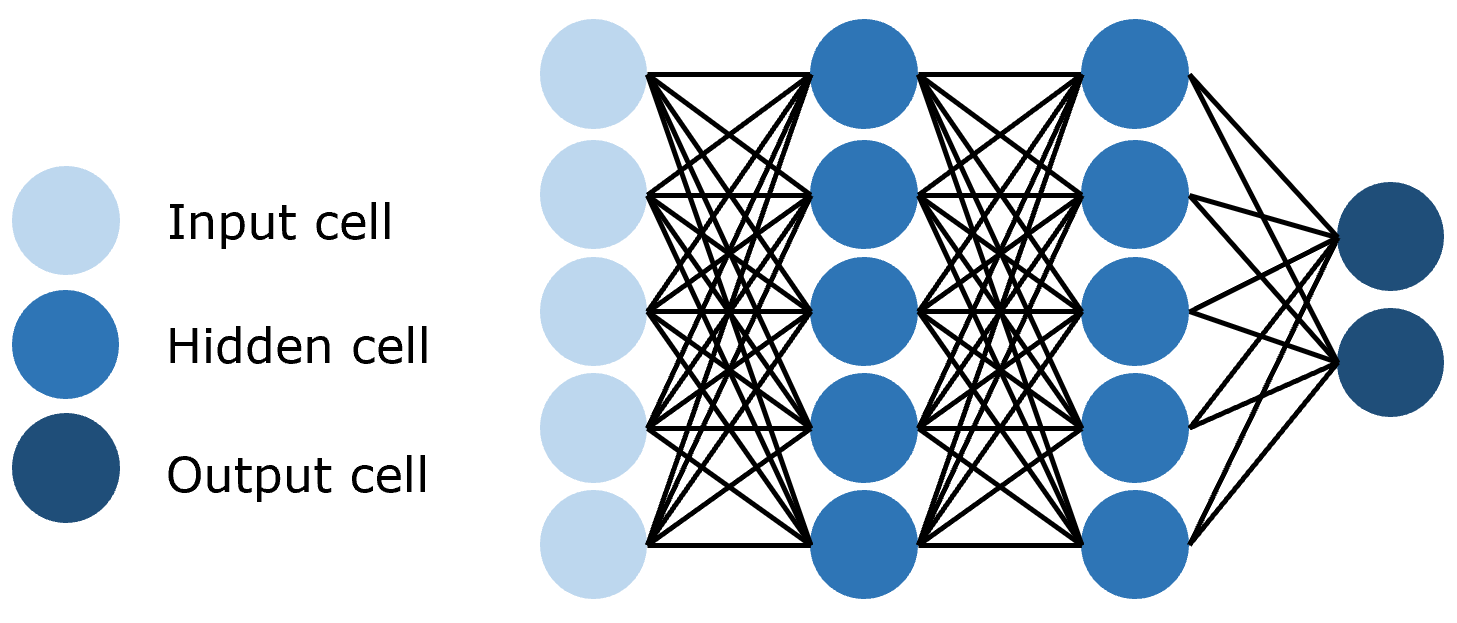

2 | The Feed-Forward Network

The feed-forward network is a collection of perceptrons, in which there are three fundamental types of layers — input layers, hidden layers, and output layers. During each connection, the signal from the previous layer is multiplied by a weight, added to a bias, and passed through an activation function. Feed-forward networks use backpropagation to iteratively update the parameters until it achieves a desirable performance.

3 | Residual Networks (ResNet)

One issue with deep feed-forward neural networks is called the vanishing gradient problem, which is when networks are too long for useful information to be backpropagated throughout the network. As the signal that updates the parameters travels through the network, it gradually diminishes until weights at the front of the network are not changed or utilized at all.

To address this problem, a Residual Network employs skip connections, which propagate signals across a ‘jumped’ layer. This reduces the vanishing gradient problem by employing connections that are less vulnerable to it. Over time, the network learns to restore skipped layers as it learns the feature space, but is more efficient in training as it is less vulnerable to vanishing gradients and needs to explore less of the feature space.

#machine-learning #ai #data-science #data analysis #data analysis