Problem Statement

Twitter has become an important communication channel in times of emergency. The ubiquitousness of smartphones enables people to announce an emergency they’re observing in real-time. Because of this, more agencies are interested in programmatically monitoring Twitter (i.e. disaster relief organizations and news agencies). However, identifying such tweets has always been a difficult task because of the ambiguity in the linguistic structure of the tweets and hence it is not always clear whether an individual’s words are actually announcing a disaster. For example, if a person tweets:

“On the plus side look at the sky last night, it was ablaze”

The person explicitly uses the word “ABLAZE” here but means it metaphorically. For a human, it is easier to understand it especially if there is some visual aid provided as well. However, for machines, it is not always clear.Kaggle hosted a challenge named Real or Not whose aim was to use the Twitter data of disaster tweets, originally created by the company figure-eight, to classify Tweets talking about real disaster against the ones talking about it metaphorically.

Approach

BERT (Bidirectional Encoder Representations from Transformers) is a deep learning model developed by Google. Ever since it was open-sourced by Google, it has been adopted by many researchers and industries and has applied in solving many NLP tasks. The model has been able to achieve state of the art performance on most of the problems it has been applied upon.ktrain is a lightweight wrapper for the deep learning library TensorFlow Keras (and other libraries) to help build, train, and deploy neural networks and other machine learning models. Inspired by ML framework extensions like fastai and ludwig, it is designed to make deep learning and AI more accessible and easier to apply for both newcomers and experienced practitioners._ktrain _provides support for applying many pre-trained deep learning architectures in the domain of Natural Language Processing and BERT is one of them. To solve this problem, we will be using the implementation of pre-trained BERT provided by _ktrain _and fine-tune it to classify whether the disaster tweets are real or not.

Solution

The dataset consist of three files:

- train.csv

- test.csv

- sample_submission.csv

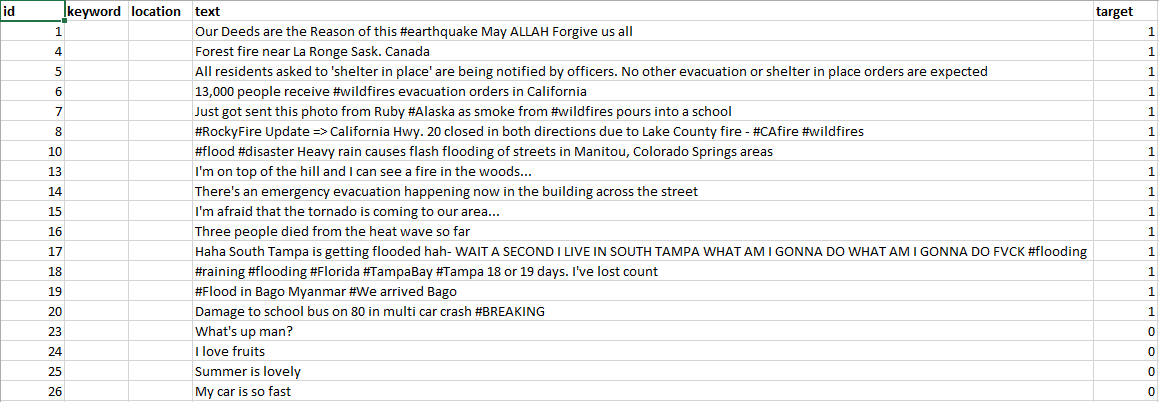

The structure of the file train.csv is as follows:

id- a unique identifier for each tweettext- the text of the tweetlocation- the location the tweet was sent from (may be blank)keyword- a particular keyword from the tweet (may be blank)target- in train.csv only, this denotes whether a tweet is about a real disaster (1) or not (0)

We are only interested in the text and the **target **column and will be using them for the classification of tweets.

#deep-learning #bert #twitter #supervised-learning #deep learning