The word “experiment” means different things to different people. For scientists (and hopefully for rigorous data scientists), an experiment is an empirical procedure to determine if an outcome agrees or conflicts with some hypothesis. In a machine learning experiment, your hypothesis could be that a specific algorithm (say gradient boosted trees) is better than alternatives (such as random forests, SVM, linear models). By conducting an experiment and running multiple trials by changing variable values, you can collect data and interpret results to accept or reject your hypothesis. Scientists call this process the scientific method.

Regardless of whether you follow the scientific method or not, conducting and managing machine learning experiments is hard. It’s challenging because of the sheer number of variables and artifacts to track and manage. Here’s a non-exhaustive list of things you may want to keep track of:

- Parameters: hyperparameters, model architectures, training algorithms

- Jobs: pre-processing job, training job, post-processing job — these consume other infrastructure resources such as compute, networking and storage

- Artifacts: training scripts, dependencies, datasets, checkpoints, trained models

- Metrics: training and evaluation accuracy, loss

- Debug data: Weights, biases, gradients, losses, optimizer state

- Metadata: experiment, trial and job names, job parameters (CPU, GPU and instance type), artifact locations (e.g. S3 bucket)

As a developer or data scientist, the last thing you want to do is to spend more time managing spreadsheets or databases to track experiments and the associated entities and their relationships with each other.

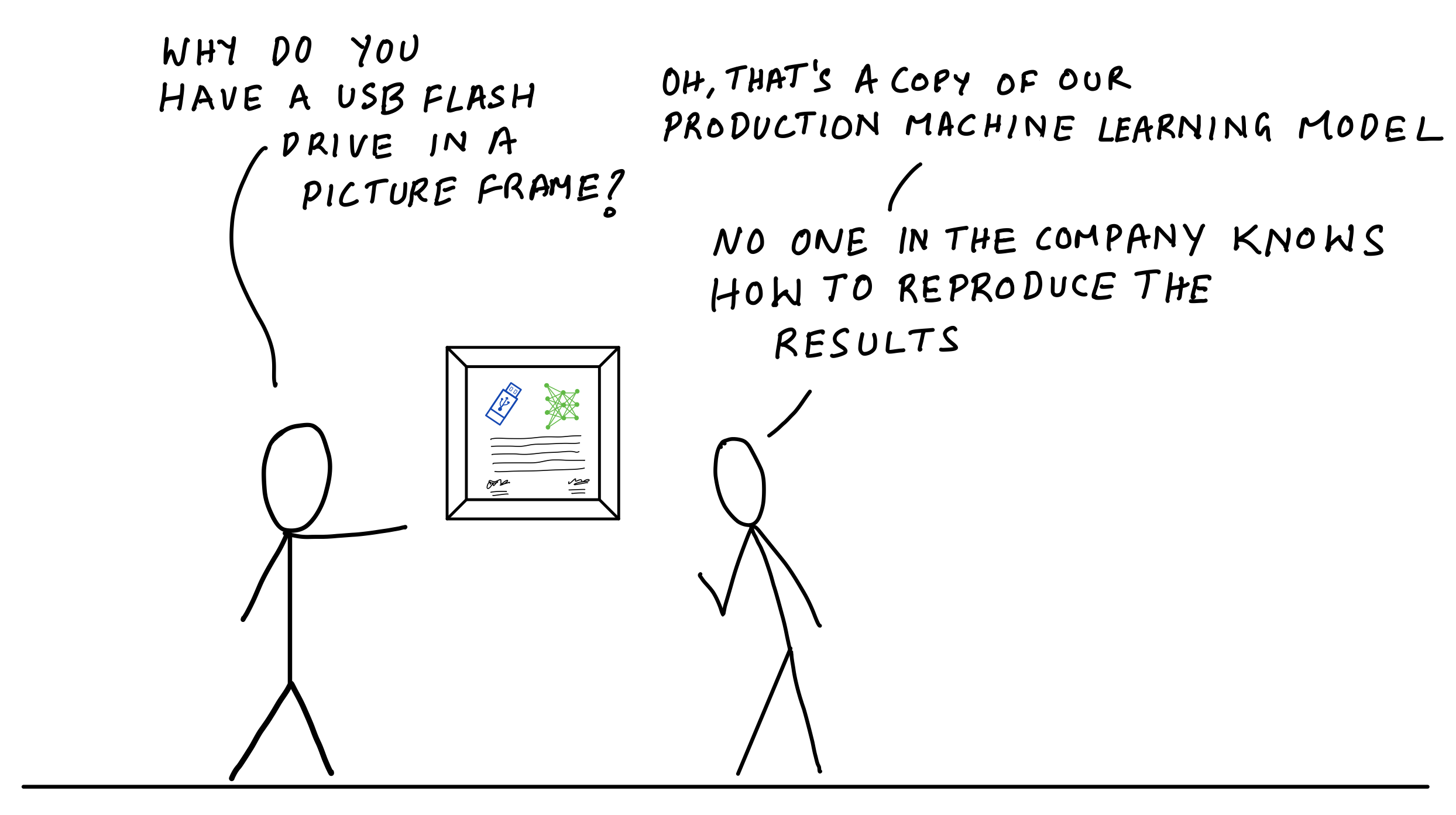

Illustration by author

How often have you struggled to figure out what dataset, training scripts and model hyperparameters were used for a model that you trained a week ago? a month ago? a year ago? You can look through your notes, audit trails, logs and Git commits, and try to piece together the conditions that resulted in that model, but you can never be sure if you didn’t have everything organized in the first place. I’ve had more than one developer tell me something to the effect of “we don’t know how to reproduce our production model, the person who worked on this isn’t around anymore — but it works, and we don’t want to mess with it”.

In this blog post, I’ll discuss how you can define and organize your experiments so that you don’t end up in such a situation. Through a code example, I’ll show how you can run experiments, track experiment data and retrieve it for analysis using Amazon SageMaker Experiments. The data you need about a specific experiment or training job will always be quickly accessible whenever you need it without you having to the bookkeeping.

#deep-learning #sagemaker #machine-learning #experiment-management #aws