Table of Contents:

- Introduction

- Data Preparation and Pre-processing

- Long Short Term Memory (LSTM) — Under the Hood

- Encoder Model Architecture (Seq2Seq)

- Encoder Code Implementation (Seq2Seq)

- Decoder Model Architecture (Seq2Seq)

- Decoder Code Implementation (Seq2Seq)

- Seq2Seq (Encoder + Decoder) Interface

- Seq2Seq (Encoder + Decoder) Code Implementation

- Seq2Seq Model Training

- Seq2Seq Model Inference

- Resources & References

1. Introduction

Neural machine translation_ (NMT) is an approach to machine translation that uses an artificial neural network to predict the likelihood of a sequence of words, typically modeling entire sentences in a single integrated model._

It was one of the hardest problems for computers to translate from one language to another with a simple rule-based system because they were not able to capture the nuances involved in the process. Then shortly we were using statistical models but after the entry of deep learning the field is collectively called Neural Machine Translation and now it has achieved State-Of-The-Art results.

I want this post to be beginner-friendly, so a specific kind of architecture (Seq2Seq) showed a good sign of success, is what we are going to implement here.

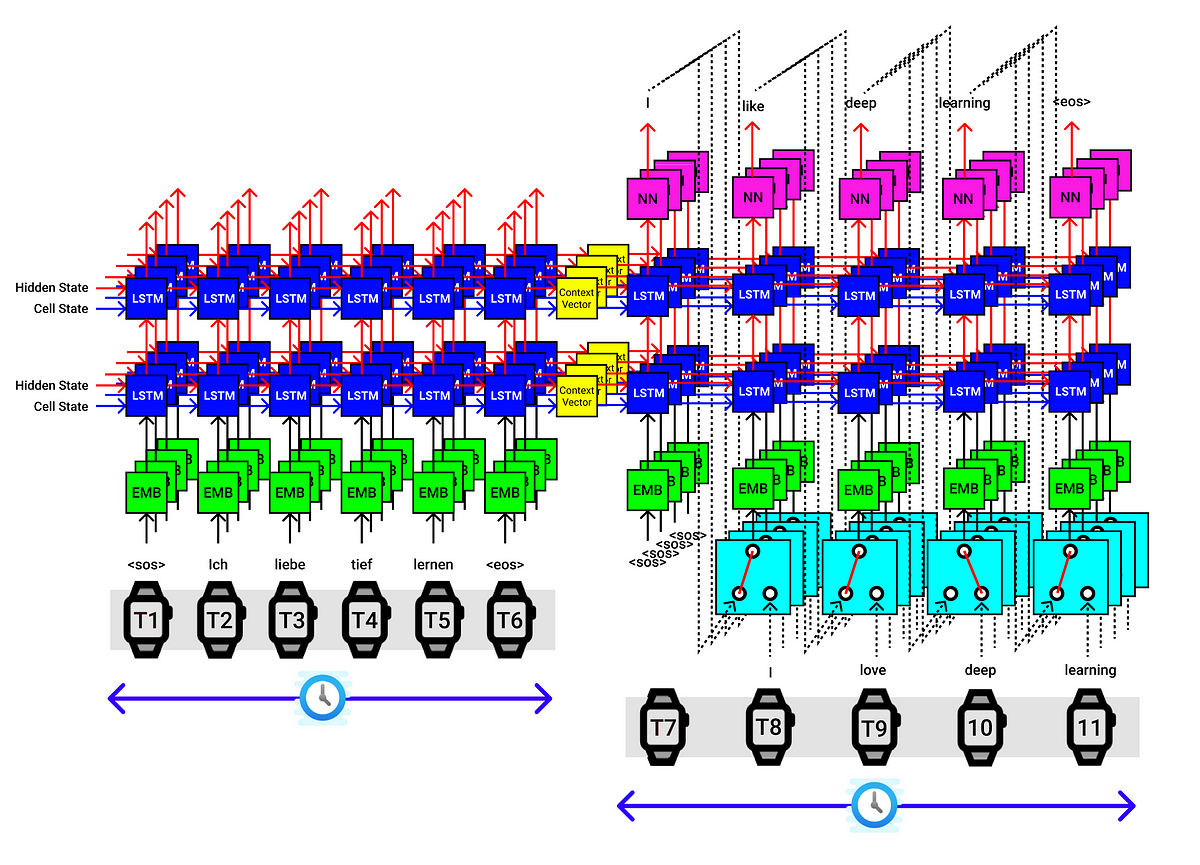

So the Sequence to Sequence (seq2seq) model in this post uses an encoder-decoder architecture, which uses a type of RNN called LSTM (Long Short Term Memory), where the encoder neural network encodes the input language sequence into a single vector, also called as a Context Vector.

This C**ontext Vector** is said to contain the abstract representation of the input language sequence.

This vector is then passed into the decoder neural network, which is used to output the corresponding output language translation sentence, one word at a time.

Here I am doing a German to English neural machine translation. But the same concept can be extended to other problems such as Named Entity Recognition (NER), Text Summarization, even other language models, etc.

#machine-translation #encoder-decoder #lstm #deep-learning #machine-learning