“Laziness isn’t that bad….”

In the previous pose(link below), I highlighted how functional programming simplifies the abstract idea of exploring graphs. I demonstrated one of the salient features of Haskell (a FP language) that unburdens us from explicitly managing states while gradually discovering paths between nodes.

A holistic perspective into exploring graphs using functional programming

Here, I want to take this concept of “laziness” a bit further. Lazy evaluation or laziness as it is colloquially known is delayed evaluation of an expression until we really require its result. It’s like making a promise, fulfilling it only at the nick of time. It lets us define infinite data structures without explicit allocating memory to create and store them. Imagine you are given an infinite steam of large integers **I ( I :: Integer). **For some arcane reason, you want to sort them as they come in. How will you accomplish this? If you’re familiar with traditional sorting algorithms like QuickSort, Merge sort, you will jump and say use them. STOP! Both require data to be in memory. Your stream can look like this : 13,1,2,4,1,9,0,8,11,4,5,3,66,77,112,1091,99,11,2,3,4,5,6…

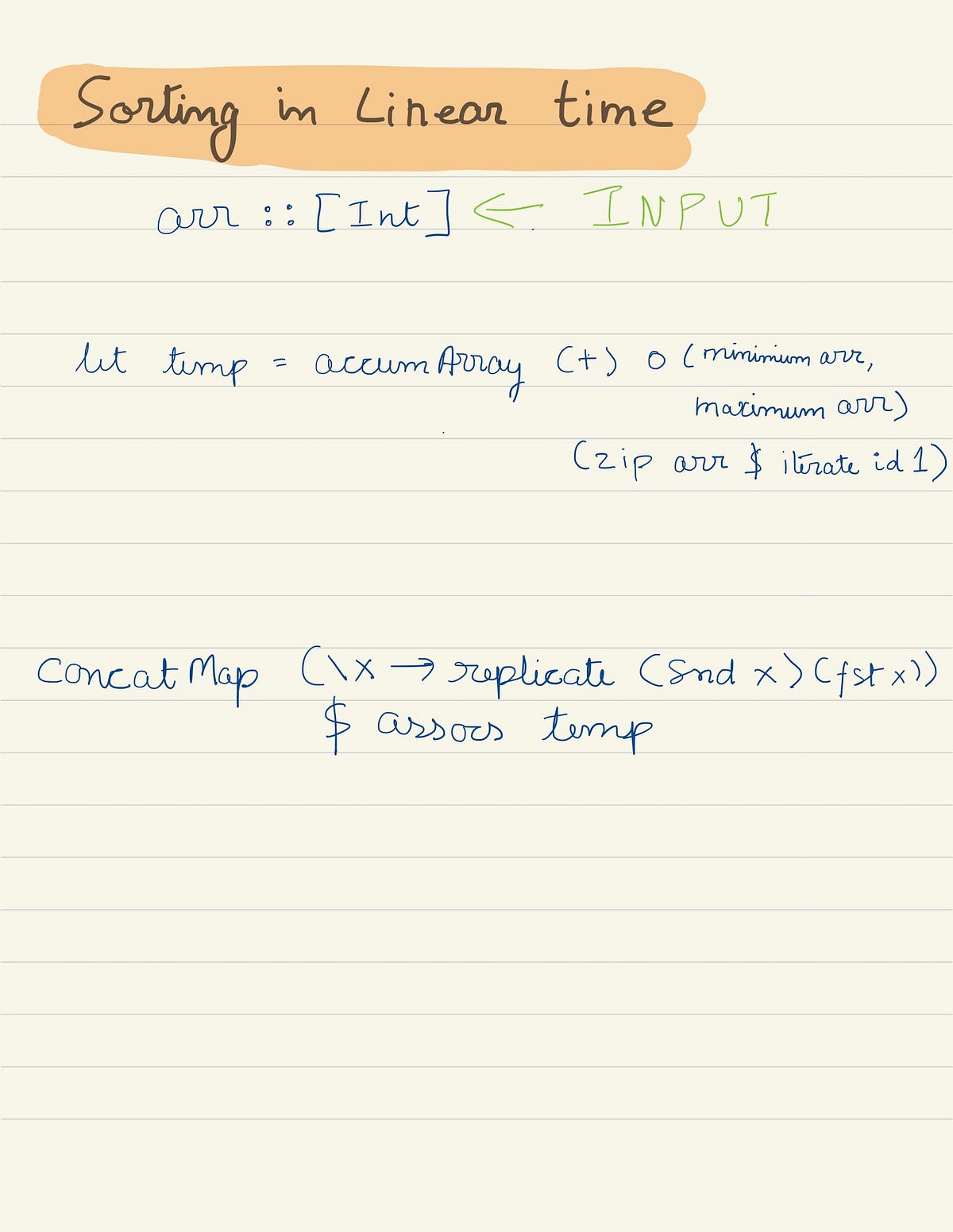

The only information you have is the bounds of this array, i.e., the lowest value and the highest one. A useful strategy in such a scenario is not to think about any comparison based sorting algorithms — they all have lower bound of Ω(n lg n) (note: unlike Big O, the Big Omega Ω denotes that our function is bounded below). Instead, if we could just ‘tag’ integers as they come by their respective keys which are just integers between lower and upper bound, we would be able to ‘almost’ solve this problem. It’s similar to counting the number of occurrences of each integer in incoming stream, tagging them as they come and accumulating the count of each element. Finally, in the last step we could just exapnd the respective count of each element in the stream to get the sorted array. Data.Array module in Haskell has accumArray function which takes a binary function, initial accumulator, and array to perform the above task.

accumArray :: Ix i = > (e → a → e) → e → (i, i) →[(i, a)] → Array i e

Here, Ix is the class of all **index **types(those that can be used as indices in arrays) — there must be a mapping of a value of type _a _to an offset in a linear array. (e → a →e) denotes the accumulating function, which in our case is simply (+) :: Num l=> l→ l→ l. It’s followed by initial entry at each index with type e (an Integer). (i,i) are bounds of the array. They must be indexable, i.e., have total order property. Finally, as the last argument, we have list of type [(i,a)], an association list of [(1, element1), (1, element2)…]. This is accomplished by zipping our list with 1’s — zip arr $ iterate id 1

accumArray f e (l,u) list creates an array with indices l…u, in time proportional to u-l, provided f can be computed in constant time. The result is an array whose size is determined by bounds, and whose values are denoted by separating all the values in the list according to their index, and then performing a left-fold operation, using f, on each collection, starting with the value e. You might be thinking that this can be readily used for creating a histogram or performing sorting using bins; well, you’re right.

#haskell #function