Last year, BERT was released by researchers at Google, which proved to be one of the efficient and most effective algorithm changes since RankBrain. Looking at the initial results, BigBird is showing similar signs!

In this article, I’ve covered:

- A brief overview of Transformers-based Models,

- Limitations of Transformers-based Models,

- What is BigBird, and

- Potential applications of BigBird.

Let’s begin!

A Brief Overview of Transformers-Based Models

Natural Language Processing (NLP) has improved quite drastically over the past few years and Transformers-based Models have a significant role to play in this. Still, there is a lot to uncover.

Transformers — a Natural Language Processing Model launched in 2017, are primarily known for increasing the efficiency of handling & comprehending sequential data for tasks like text translation & summarization.

Unlike Recurrent Neural Networks (RNNs) that process the beginning of input before its ending, Transformers can parallelly process input and thus, significantly reduce the complexity of computation.

BERT, one of the biggest milestone achievements in NLP, is an open-sourced Transformers-based Model. A paper introducing BERT, like BigBird, was published by Google Researchers on 11th October 2018.

Bidirectional Encoder Representations from Transformers (BERT) is one of the advanced Transformers-based models. It is pre-trained on a huge amount of data (pre-training data sets) with BERT-Large trained on over 2500 million words.

Having said that, BERT, being open-sourced, allowed anyone to create their own question answering system. This too contributed to its wide popularity.

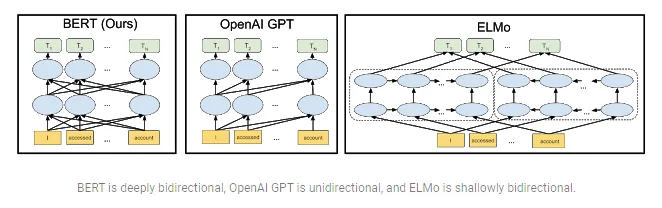

But BERT is not the only contextual pre-trained model. It is, however, deeply bidirectional, unlike other models. This is also one of the reasons for its success and diverse applications.

#bert #big-bird #nlp #big-data