Welcome to this medium article. Computer Vision can essentially be broken down into a three step cycle.

- The first step is to sense or perceive the world.

- The second step is to decide what to do based on that perception.

- The third step is to perform an action to carry out that decision.

Detecting Lane Lines (Image by author)

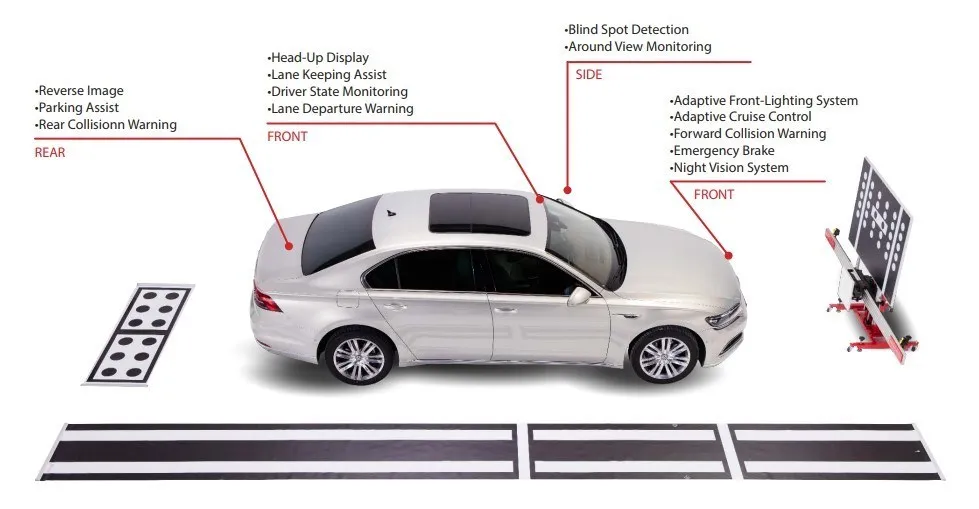

Computer vision is a major part of the perception step in that cycle. 80% of the challenge of building a self-driving car is perception. Computer vision is the art and science of perceiving and understanding the world around us through images. In the case of self-driving cars, computer vision helps us detect lane markings, vehicles, pedestrians, and other elements in the environment in order to navigate safely.

Self-driven cars employ a suite of sophisticated sensors, but humans do the job of driving with just two eyes and one good brain. In fact, we can even do it with one eye closed. Indeed, we can. So, let’s take a closer look at why using cameras instead of other sensors might be an advantage in developing self-driving cars. Radar and Lidar see the world in 3D, which can be a big advantage for knowing where we are relative to our environment. A camera sees in 2D, but at much higher spatial resolution than Radar and Lidar such that it’s actually possible to infer depth information from camera images. The big difference, however, comes down to cost, where cameras are significantly cheaper.

That’s right. It is altogether possible that self-driving cars will eventually be outfitted with just a handful of cameras and a really smart algorithm to do the driving.

on the road from above. But, in order to get this perspective transformation right, we first have to correct for the effect of image distortion. Well hopefully, the distortion we’re dealing with isn’t quite that bad, but yes, that’s the idea. Cameras don’t create perfect images. Some of the objects in the images, especially ones near the edges, can get stretched or skewed in various ways and we need to correct for that. Cool, let’s jump into step one, how to undistort our distorted camera images.

#machine-learning #self-driving-cars #artificial-intelligence #deep-learning #computer-vision