Encoder-decoder models have provided state of the art results in sequence to sequence NLP tasks like language translation, etc. Multistep time-series forecasting can also be treated as a seq2seq task, for which the encoder-decoder model can be used. This article provides an encoder-decoder model to solve a time series forecasting task from Kaggle along with the steps involved in getting a top 10% result.

The solution code can be found in my Github repo. The model implementation is inspired by Pytorch seq2seq translation tutorial and the time-series forecasting ideas were mainly from a Kaggle winning solution of a similar competition.

gautham20/pytorch-ts

Tutorials on using encoder-decoder architecture for time series forecasting - gautham20/pytorch-ts

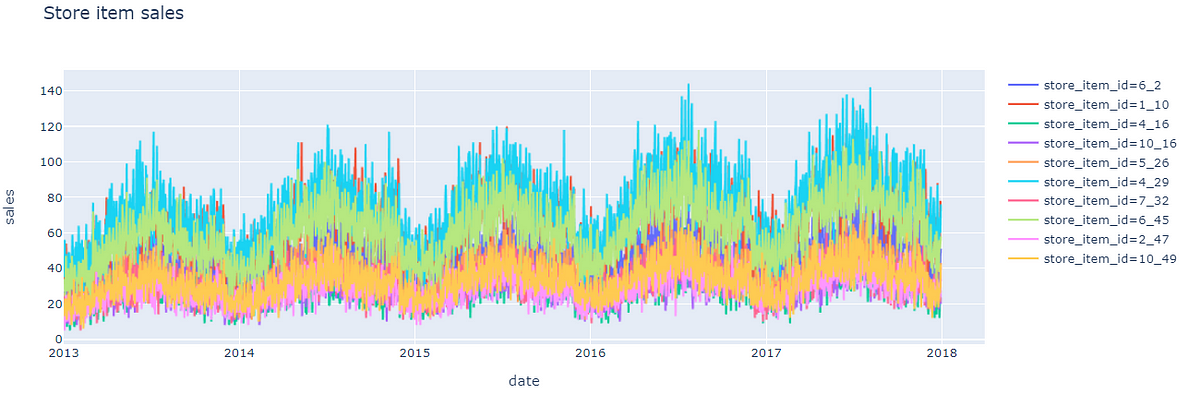

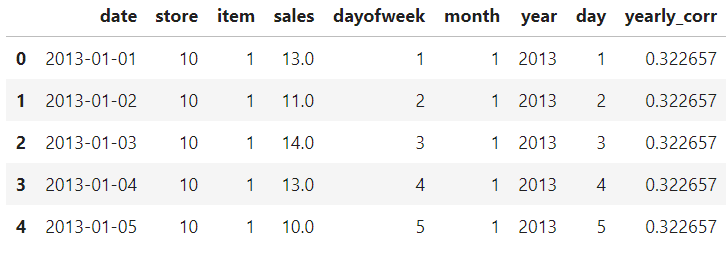

The dataset used is from a past Kaggle competition — Store Item demand forecasting challenge, given the past 5 years of sales data (from 2013 to 2017) of 50 items from 10 different stores, predict the sale of each item in the next 3 months (01/01/2018 to 31/03/2018). This is a multi-step multi-site time series forecasting problem.

The features provided are quite minimal:

There are 500 unique store-item combinations, meaning that we are forecasting 500 time-series.

Data Preprocessing

Feature Engineering

Deep learning models are good at uncovering features on its own, so feature engineering can be kept to a minimum.

From the plot, it can be seen that our data has weekly and monthly seasonality and yearly trend, to capture these, DateTime features are provided to the model. In order to capture the yearly trend of each item’s sale better, yearly autocorrelation is also provided.

Many of these features are cyclical in nature, in order to provide this information to the model, sine and cosine transformations are applied to the DateTime features. A detailed explanation of why this is beneficial can be found here — Encoding cyclical continuous features — 24-hour time

#deep-learning #machine-learning #pytorch #data analysis