Argo Workflows is an open source container-native workflow engine for orchestrating parallel jobs on K8s. Argo Workflows is implemented as a K8s CRD (Custom Resource Definition). As a result, Argo workflow can be managed using _kubectl_ and natively integrates with other K8s services such as volumes, secrets, and RBAC. Each step in Argo workflow is defined as a container.

- Define workflows where each step in the workflow is a container.

- Model multi-step workflows as a sequence of tasks or capture the dependencies between tasks using a graph (DAG).

- Easily run compute intensive jobs for ML or Data Processing in a fraction of time using Argo Workflows on K8s.

- Run CI/CD pipelines natively on K8s without configuring complex software development products.

You can list all workflows as:

kubectl api-resources | grep workflow

kubectl get workflow # or just `kubectl get wf`

Argo CLI

Get list of all argo commands and flags as:

argo --help

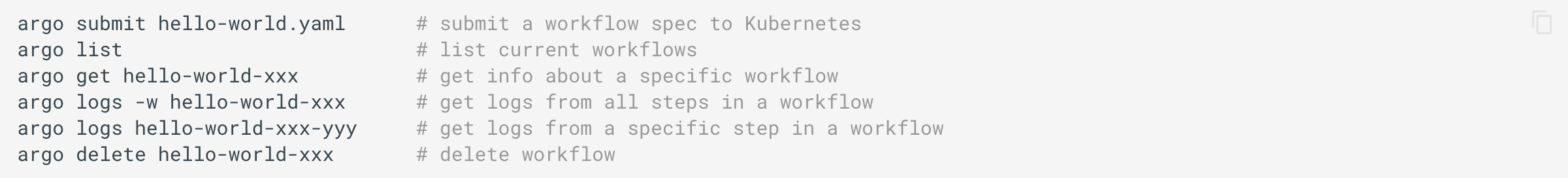

Here’s a quick overview of the most useful argo command line interface (CLI) commands.

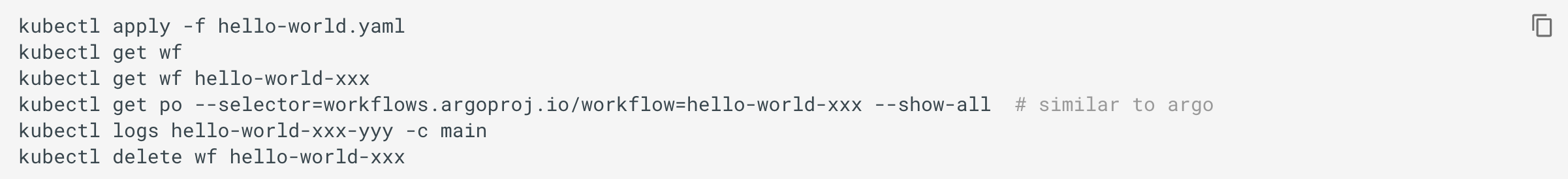

You can also run workflow specs directly using kubectl but the Argo CLI provides syntax checking, nicer output, and requires less typing.

Deploying Applications

First install argo controller:

kubectl create namespace argo

kubectl apply -n argo -f https://raw.githubusercontent.com/argoproj/argo/stable/manifests/install.yaml

Examples below will assume you’ve installed argo in the argo namespace. If you have not, adjust the commands accordingly.

NOTE: On GKE, you may need to grant your account the ability to create new clusterroles

kubectl create clusterrolebinding YOURNAME-cluster-admin-binding --clusterrole=cluster-admin

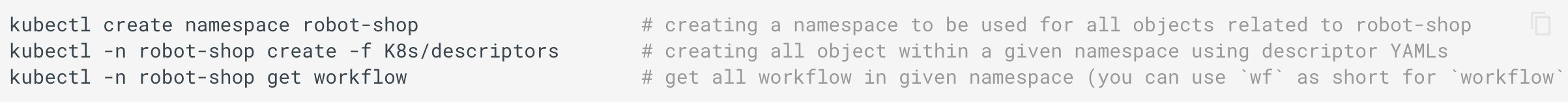

Consider sample micro-service application instana with its source code named robot-shop.

It can be deployed as easy as:

Getting a list of application Pods and wait for all of them to finish starting up:

# watching as Pods are being created withing given namespace

kubectl get pods -n robot-shop -w

Once all the pods are up, you can access the application in a browser using the public IP of one of your Kubernetes servers and port 30080:

echo http://${kube_server_public_ip}:30080

Argo Workflow Specs

For complete description of Argo workflow spec, refer to spec definitions, and check Argo Workflow Examples.

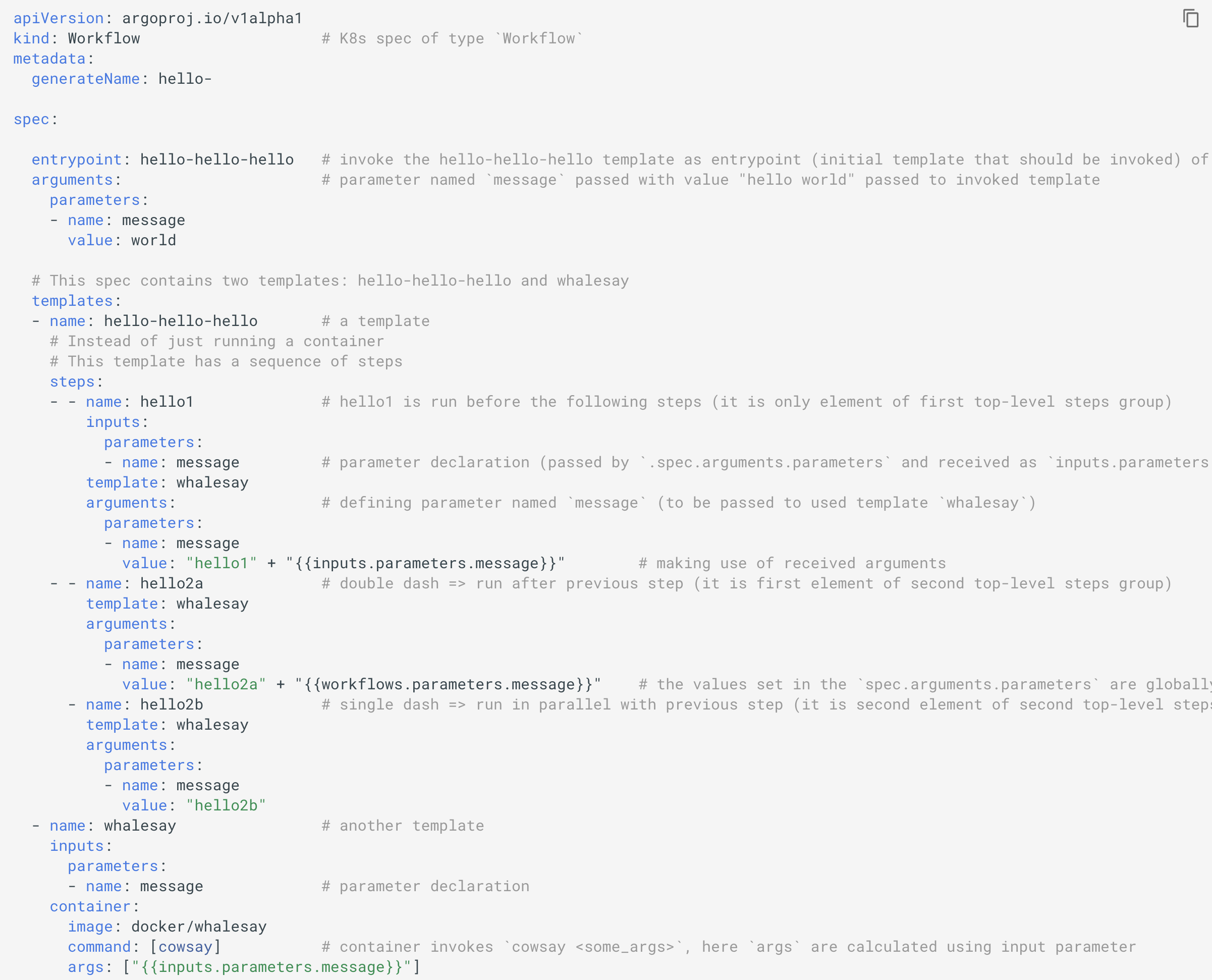

Argo adds a new _kind_ of K8s spec called a _Workflow_. The _entrypoint_ specifies the initial template that should be invoked when the workflow spec is executed by K8s. The entrypoint template generally contains _steps_ which make use of other templates. Also, each template which is _container_ based or _steps_ based (as opposed to _script_ based) can have _initContainers_.

_The _**_kubectl apply_**_ issue with _**_generateName_**_ field of _**_Workflow_**:

Argo CLI can submit the same workflow any number of times, and each times it gets a unique identifier at the end of name (generated using generateName). But if you use kubectl apply -f to apply a Argo workflow, it raises resource name may not be empty error. As a work-around, you can either use:

kubectl createinstead ofkubectl apply, or- Arogo CLI instead of

kubectl apply, or - Use

namefiled containing a unique identifier instead of usinggeneratedNamefield with generic name

ARGO STEPS

Let’s look at below workflow spec:

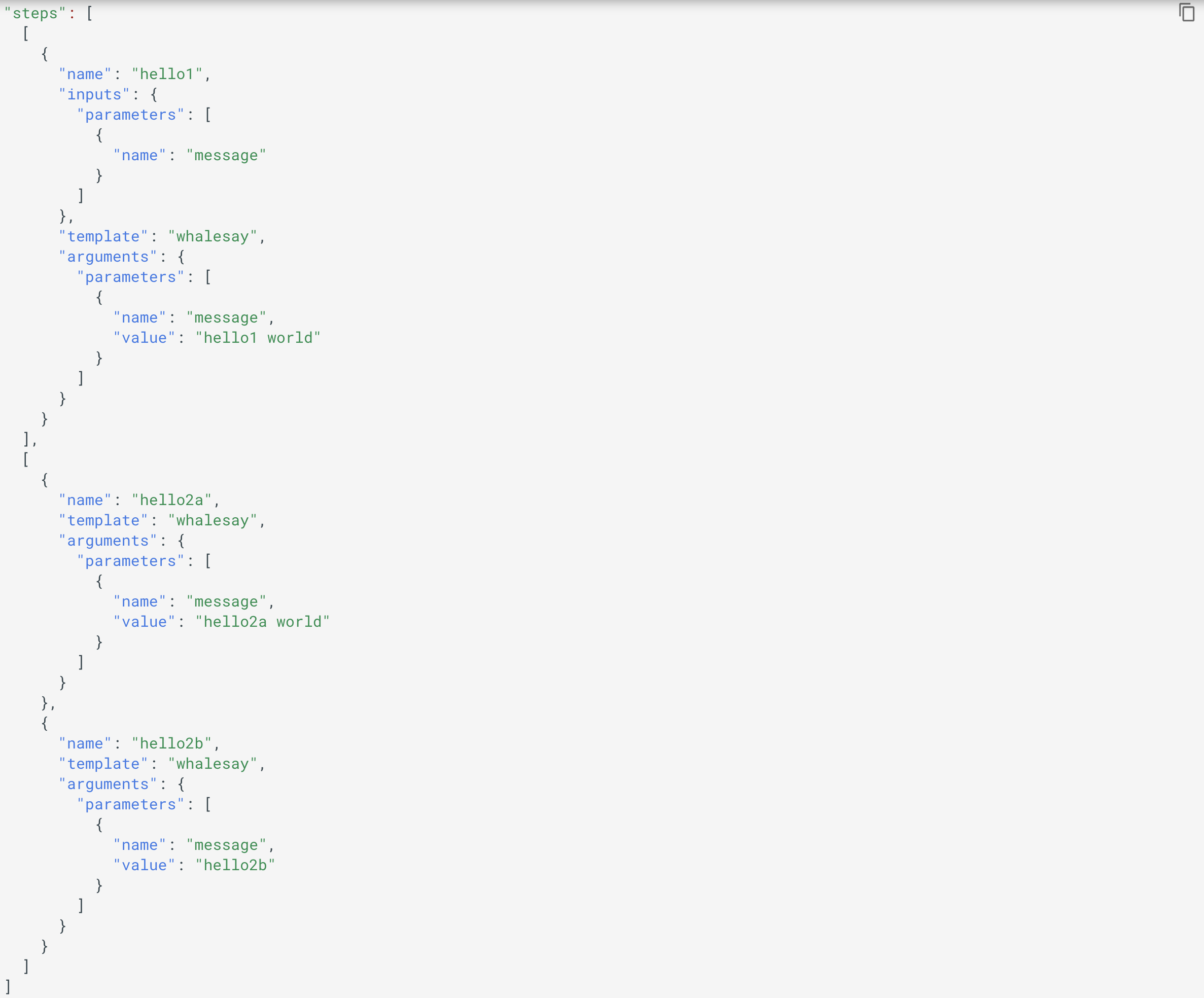

Here is how **_steps_** look like in json:

This suggests that top level elements in _steps_ have to be group of steps and they run sequentially, whereas the individual steps within each group run in parallel.

#kubernetes #data-science #workflow #argo #data-engineering #data analysis