The capability to scale large data analyses is growing in importance when it comes to data science and machine learning, and at a rapid rate. Fortunately, tools like Dask and Coiled are making it easy and fast for folks to do just that.

Dask is a popular solution for scaling up analyses when working in the PyData Ecosystem and Python. This is the case because Dask is designed to parallelize any PyData library, and hence seamlessly works with the host of PyData tools.

Scaling up your analysis to utilize all the cores of a sole workstation is the first step when starting to work with a large dataset.

Next, to leverage a cluster on the cloud (Azure, Google Cloud Platform, AWS, and so on) you might need to scale out your computation.

Read on and we will:

- Use pandas to showcase a common pattern in data science workflows,

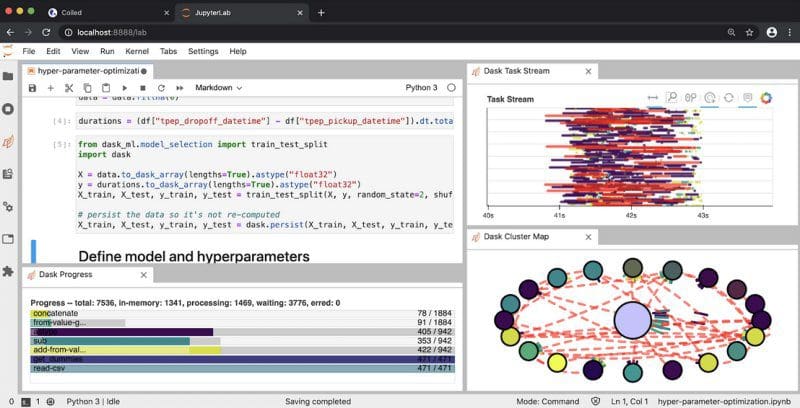

- Utilize Dask to scale up workflows, harnessing the cores of a sole workstation, and

- Demonstrate scaling out our workflow to the cloud with Coiled Cloud.

Find all the code here on github.

Note: Before you get started, it’s important to think about if scaling your computation is actually necessary. Consider making your pandas code more efficient before you jump in. With machine learning, you can measure if more data will result in model improvement by plotting learning curves before you begin.

#2020 oct tutorials # overviews #cloud #dask #data science #python