Explainability has been for sure one of the hottest topics in the area of AI — as there is more and more investment in the area of AI and the solutions are becoming increasingly effective, some businesses have found that they are not able to leverage AI at all!_ And why? S_imple, a lot of these models are considered to be “black boxes” (you’ve probably already come across this term), which means that there is no way to explain the outcome of a certain algorithm, at least in terms that we are able to understand.

A lot of AI models are considered to be “black-boxes”

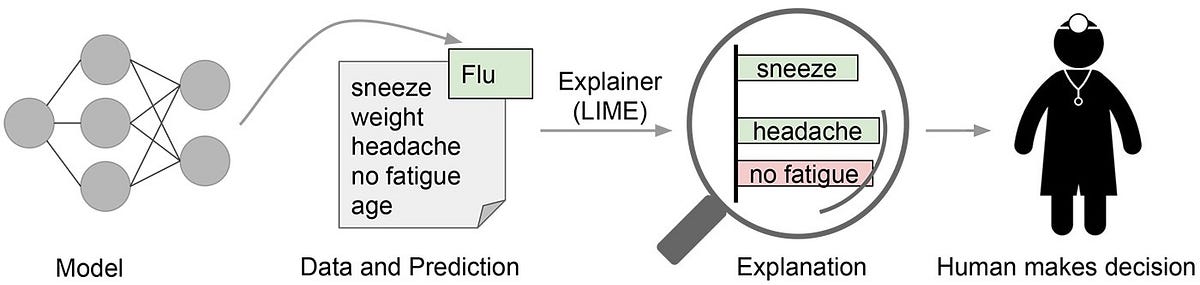

**The importance of explaining to a human the decisions made by black-box models. **Source

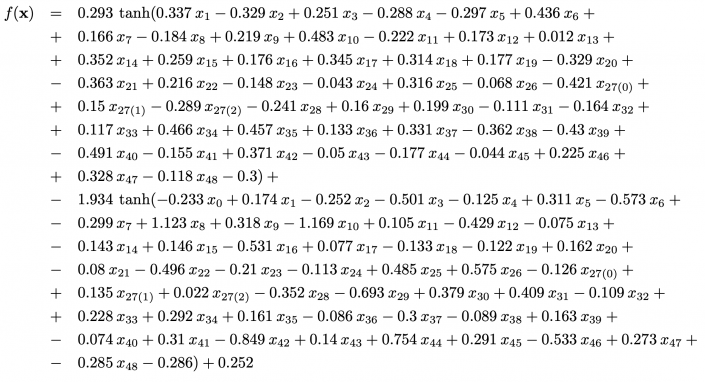

In the below picture we can see a complex mathematical expression with a lot of operations chained together. This image represents the ways the inner layers of a neural network function works. Seems too complex to be understandable, right?

**Chained mathematical expressions from a neural network — via **Rulex

What if I told you that the below expressions refer to the same neural network as the image above. Much easier to understand, right?

#data-science #artificial-intelligence #machine-learning #explainable-ai #data