Motivation

Docker and Docker-Compose are great utilities that support the microservice paradigm by allowing efficient containerization. Within the python ecosystem the package manager Conda also allows some kind of containerization that is limited to python packages. Conda environments are especially handy for data scientists working in jupyter notebooks that have different (and mutually exclusive) package dependencies.

However, due to the peculiar way in which conda environments are setup, getting them working out of the box in Docker, as it were, is not so straightforward. Furthermore, adding kernelspecs for these environments to jupyter is another very useful but complicated step. This article will clearly and concisely explain how to setup Dockerized containers with Jupyter having multiple kernels.

To gain from this article you should already know the basics of Docker, Conda and Jupyter. If you don’t and would like to there are excellent tutorials on all three on their websites.

Introduction

I like Docker in the context of data science and machine learning research as it is very easy for me to containerize my whole research setup and move it to the various cloud services that I use (my laptop, my desktop, GCP, a barebones cloud we maintain and AWS).

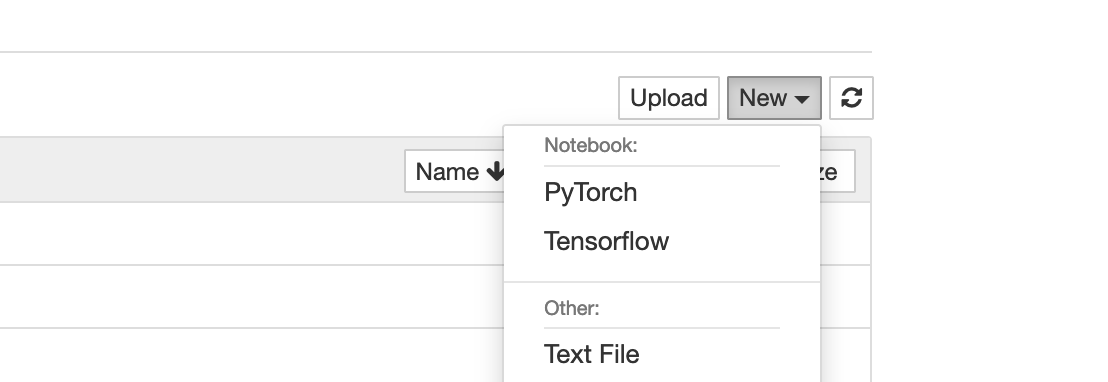

Requiring multiple conda environments and associated jupyter kernels is something one often needs in Data Science and Machine Learning. For instance when dealing with Python 2 and Python 3 code or when transitioning from Tensorflow 1 to Tensorflow 2.

#anaconda #docker-compose #conda #python #docker