Recently, we migrated a fairly large on-premises Oracle Database to Amazon Aurora PostgreSQL. To start off heterogeneous migrations between different database platforms are never easy. Coupling this with migrating the database to the cloud definitely adds on to the challenge.

The intent of this article is to share the migration methodology and highlight a few issues and pitfalls that came our way.

About the on-premises Oracle Database

Database Edition: Oracle Enterprise Edition 12

Size of Data: 1.6 TB

Largest Table Size: 9 billion rows with over 2000 partitions and sub-partitions

Migration Methodology

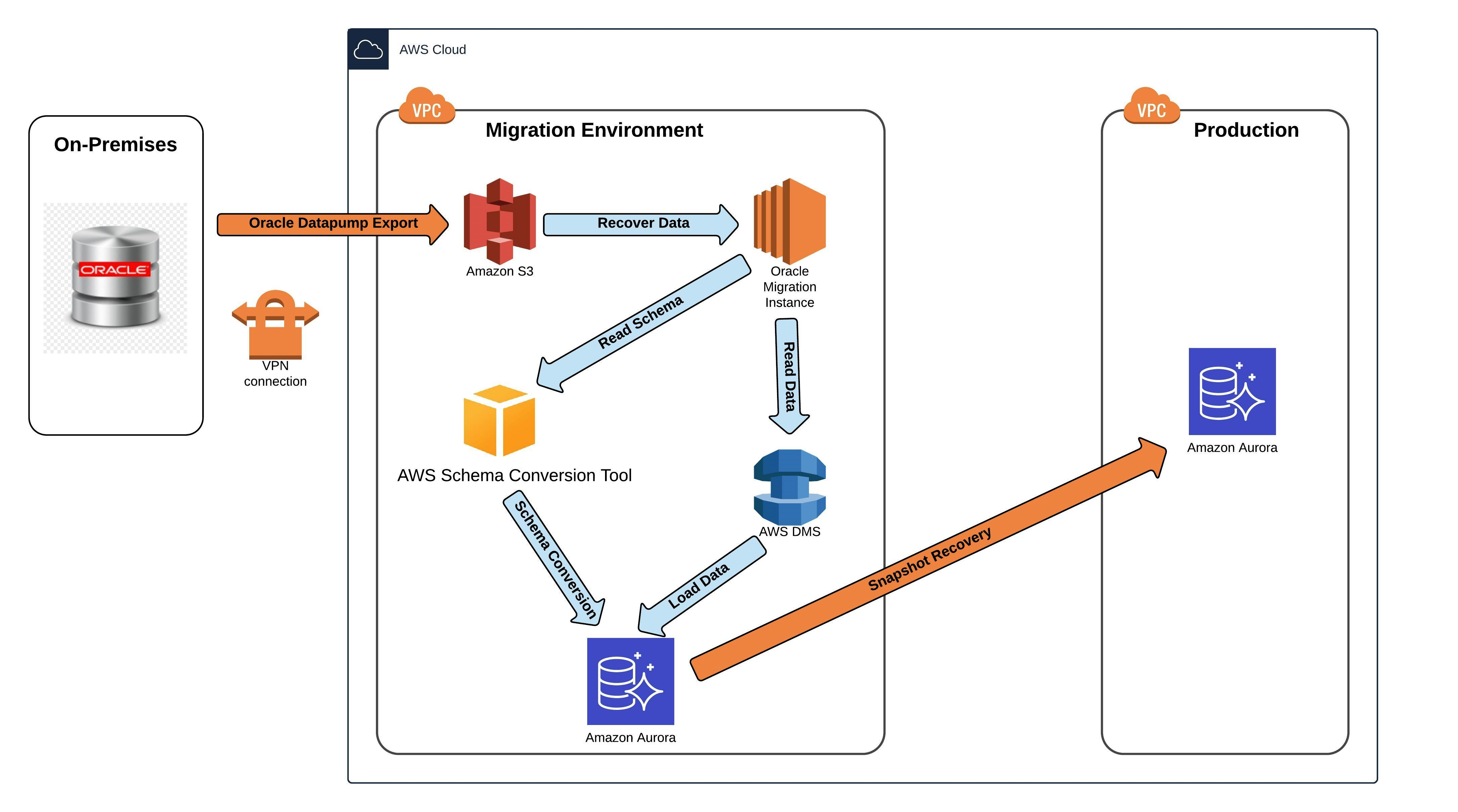

(Image by author)

Since the size of the data was fairly large we decided against the idea of having AWS DMS fetch source data from the on-premises location using a VPN connection. Instead, we opted for a temporary Oracle instance on Amazon EC2. Without a doubt that would make a lot of sense for performance reasons.

Data Recovery

- The client sent us an Oracle Data pump full database export (expdp) created on-premises and copied thedump files to Amazon S3. Dumps were created using** FILESIZE=64G**

- Once the dumps files got copied to Amazon S3 we recovered the files on a temporary Oracle migration instance. For this purpose we spun up a m5a.8xlarge RHEL 7.6 instance on AWS.

- After data recovery is complete we validated row counts between the on-premises Oracle database vs. Oracle migration database.

Schema Conversion

We used the AWS Schema Conversion Tool for the heterogeneous database migration. The tool worked pretty seamlessly. Any objects that could not be converted are clearly marked so that they can be manually converted to complete the migration. Assuming you do not have a lot of stored procedures, functions and packages you can expect that the AWS Schema Conversion Tool will convert the majority of your schema objects.

Some lessons learnt…

- Trigger code in Oracle got migrated a bit differently. The trigger code was converted to a PostgreSQL function which was in turn called from the trigger in PostgreSQL.

- _number _data types in Oracle got converted to _double_precis_ion in PostgreSQL. Under normal circumstances that should not be a issue. However we did encounter a data truncation issue during the data migration step. We will talk about this in more detail later in the article.

- If there is a reserved word in the column name the name gets enclosed in double quotes like “PRECISION”. Unfortunately, there is no easy way to flag these columns and fix them beforehand. Therefore it is a good idea to create a script and validate your column names against the PostgreSQL reserved words list. An easy way to get the reserved word list is using the SQL below:

- SELECT * FROM pg_get_keywords()

#amazon-web-services #data #aws #postgres #oracle #data analysisa