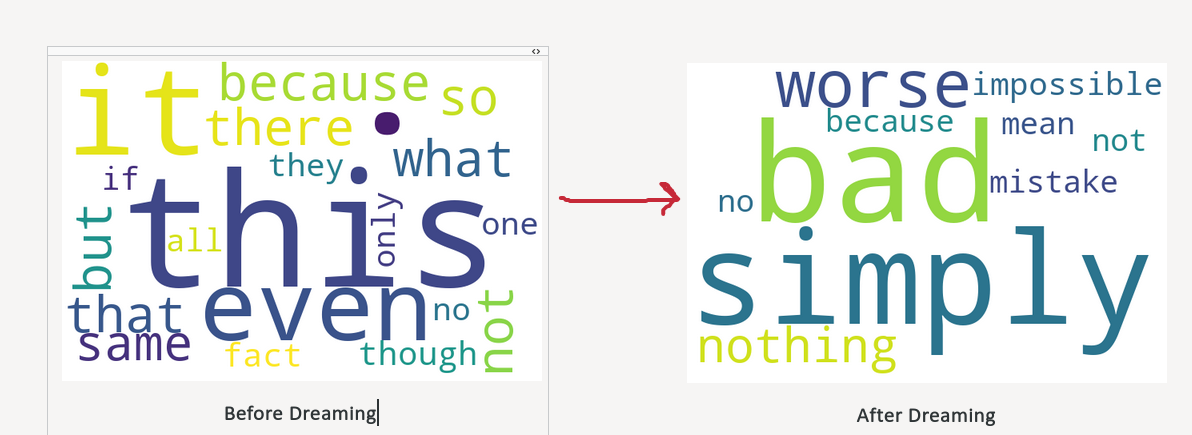

“DeepDream is an experiment that visualizes the patterns learned by a neural network. Similar to when a child watches clouds and tries to interpret random shapes, DeepDream over-interprets and enhances the patterns it sees in an image.

It does so by forwarding an image through the network, then calculating the gradient of the image with respect to the activations of a particular layer. The image is then modified to increase these activations, enhancing the patterns seen by the network, and resulting in a dream-like image. This process was dubbed “Inceptionism” (a reference to InceptionNet, and the movie Inception).”

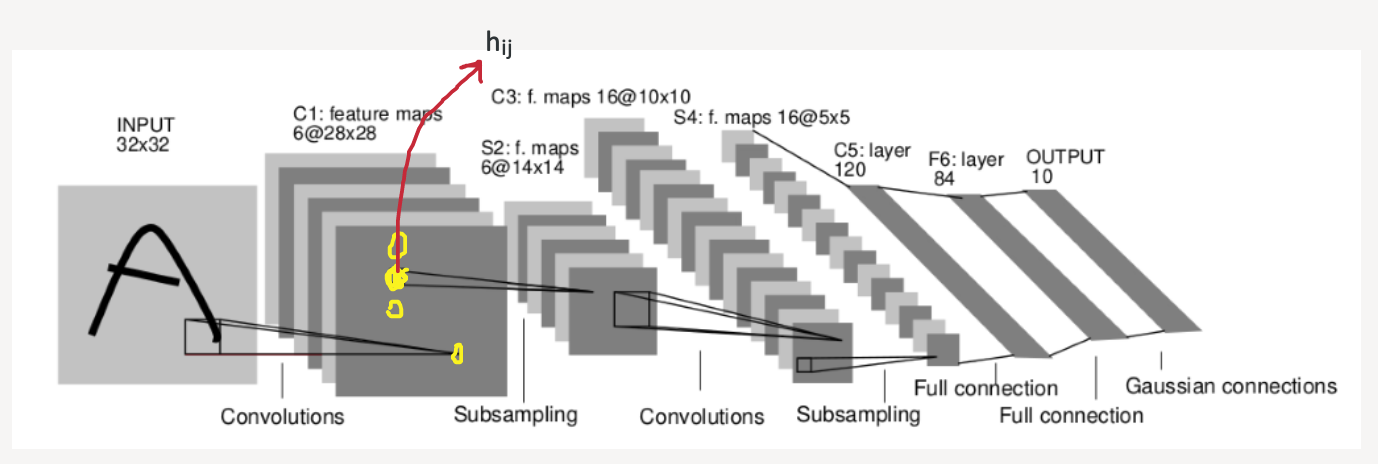

Let me break it down for you. Consider a Convolutional Neural Network.

Let us assume we want to check what happens when we increase the highlighted neuron activation h{i,j}_**, **and we want to reflect these changes onto input image when we increase these activations.

In other words, we are optimizing image so that neuron h{i,j_} fires more.

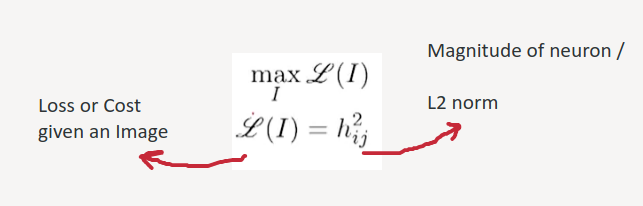

We can pose this optimization problem as:

Image by Author

That is, we need to maximize the square norm (in simple words magnitude), of h{i,j}_ by changing image.

Here is what happens when we do as said above.

#deepdream #pytorch #editors-pick #deep-learning #nlp #deep learning