For a long time we’ve (and by “we” I mean the industry) harped on test coverage. We’ve sold each other the idea that tests are the safety net developers need to code without fear. Because if the tests pass, your changes must be good, and ready for production, right? Advocates of Test Driven Development even say you should write the tests before the code. But what happens when the tests are wrong? When they’re buggy? When they don’t test what you think they do, or worse don’t test anything? In short, how do you test the tests?

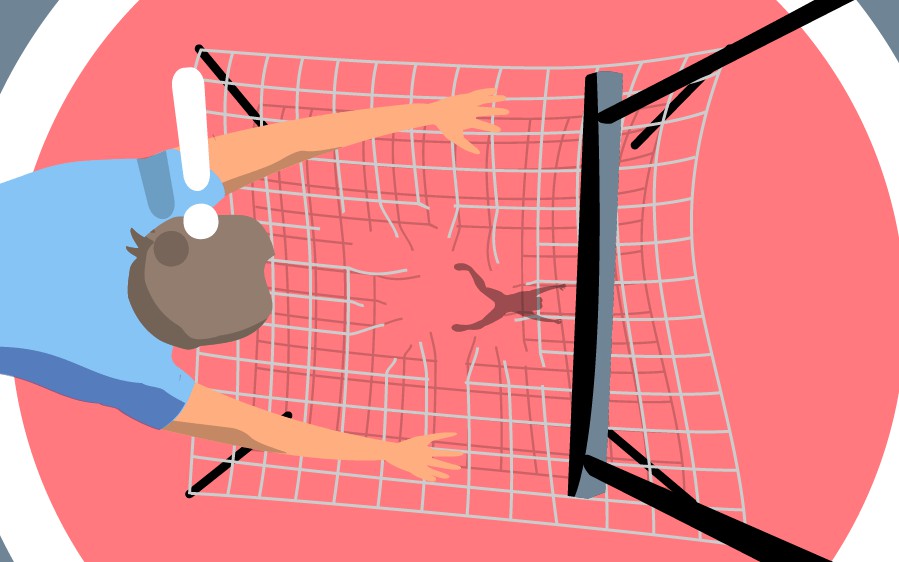

So far, the industry focus has been on test quantity, i.e. coverage, without really talking about test quality. But the time to shift focus is coming. Because if tests really are a developer’s safety net, then shouldn’t they be treated like safety gear? And once you think of them as safety gear, it becomes obvious that they should be inspected on a regular basis. After all, firefighters inspect their gear after every fire, and climbers and acrobats inspect their gear before every use.

So when’s the last time you inspected a test? And by “inspected”, I don’t mean “ran”. Running the tests is an inspection of your code, not the tests themselves. If you’re like me, it was just before you committed the last change to it. If your commit got peer review, then you were lucky enough to get a second pair of eyes on that test. But after you committed the changes, I’m guessing it was entirely “out of sight, out of mind.”

That’s a real pity if you consider some of the things that can go wrong with a test. Just as much as developers are prone to copy-paste errors during coding, we’re maybe even more so while writing tests.

#static analysis #static analysis tools #test quality #test