Are word embeddings always the best choice?

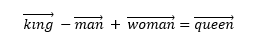

If you can challenge a well-accepted view in data science with data, that’s pretty cool, right? After all, “in data we trust”, or so we profess! Word embeddings have caused a revolution in the world of natural language processing, as a result of which we are much closer to understanding the meaning and context of text and transcribed speech today. It is a world apart from the good old bag-of-words (BoW) models, which rely on frequencies of words under the unrealistic assumption that each word occurs independently of all others. The results have been nothing short of spectacular with word embeddings, which create a vector for every word. One of the oft used success stories of word embeddings involves subtracting the man vector from the king vector and adding the woman vector, which returns the queen vector:

Very smart indeed! However, I raise the question whether word embeddings should always be preferred to bag-of-words. In building a review-based recommender system, it dawned on me that while word embeddings are incredible, they may not be the most suitable technique for my purpose. As crazy as it may sound, I got better results with the BoW approach. In this article, I show that the uber-smart feature of word embeddings in being able to understand related words actually turns out to be a shortcoming in making better product recommendations.

Word embeddings in a jiffy

Simply stated, word embeddings consider each word in its context; for example, in the word2vec approach, a popular technique developed by Tomas Mikolov and colleagues at Google, for each word, we generate a vector of words with a large number of dimensions. Using neural networks, the vectors are created by predicting for each word what its neighboring words may be. Multiple Python libraries like spaCy and gensim have built-in word vectors; so, while word embeddings have been criticized in the past on grounds of complexity, we don’t have to write the code from scratch. Unless you want to dig into the math of one-hot-encoding, neural nets and complex stuff, using word vectors today is as simple as using BoW. After all, you don’t need to know the theory of internal combustion engines to drive a car!

#cosine-similarity #bag-of-words #python #word-embeddings #recommendation-system