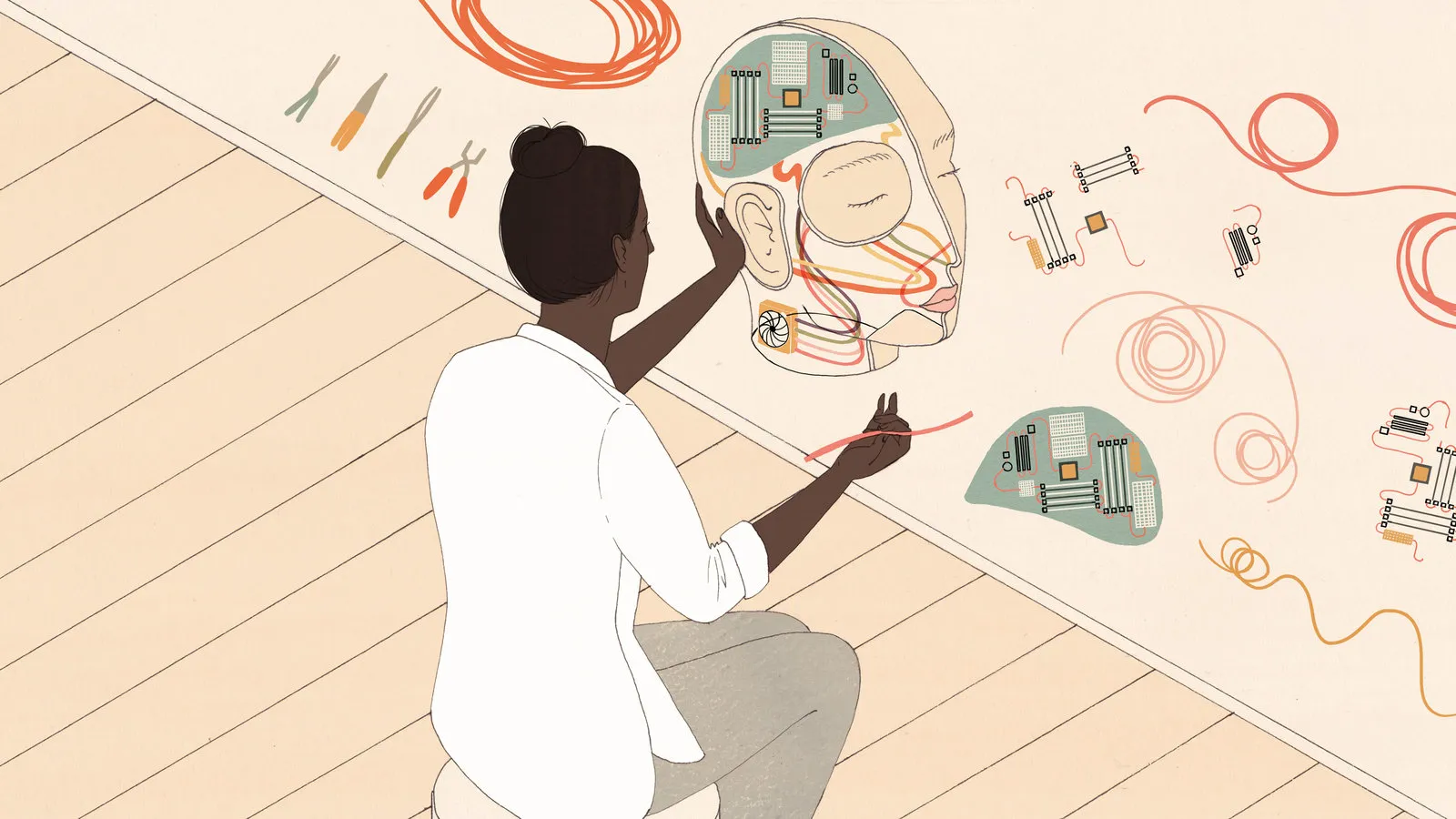

Artificial intelligence technologies like facial recognition, machine learning, and computer vision are driving some of the biggest innovations in the tech world today. But these AI tools have a major diversity problem.

Facial recognition programs consistently misidentify People of Color (POC). Earlier this year, Robert Julian-Borchak Williams, who is Black, was arrested for a crime he didn’t commit based on a failed match from a facial recognition database. Self-driving cars reportedly have trouble identifying darker-skinned pedestrians, putting them at risk of fatal injury. And bias is consistently baked into the artificial intelligence systems that increasingly make staffing, medical and lending decisions.

Fixing AI’s diversity problem is hard. In many cases, the problem comes down to data. Modern AI systems are often trained using copious amounts of data. If their underlying training data isn’t diverse, than the systems can’t be, either. This is especially problematic for fields like facial recognition. Most facial recognition datasets focus on white males. According to the New York Times, one popular database is 75% male, and 80% white.

This makeup likely reflects the biases of the databases’ creators. But it also reflects the fact that these databases are often trained on public images from the Internet, where, as Wired puts it “content skews white, male and Western”.

#synthetic-content #generative-adversarial #artificial-intelligence #generated-media #diversity