TL;DR: Entropy is a measure of chaos in a system. Because it is much more dynamic than other more rigid metrics like accuracy or even mean squared error, using flavors of entropy to optimize algorithms from decision trees to deep neural networks has shown to increase speed and performance.

It appears everywhere in machine learning: from the construction of decision trees to the training of deep neural networks, entropy is an essential measurement in machine learning.

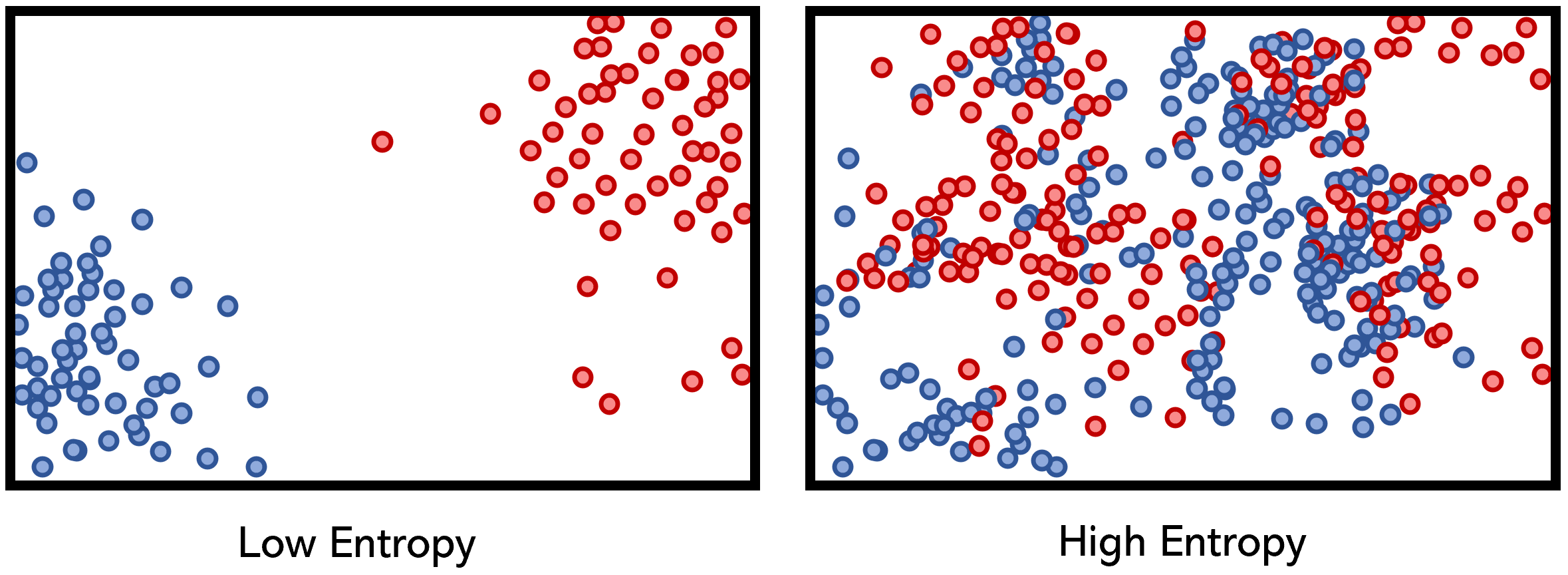

Entropy has roots in physics — it is a measure of disorder, or unpredictability, in a system. For instance, consider two gases in a box: initially, the system has low entropy, in that the two gasses are cleanly separable; after some time, however, the gasses intermingle and the system’s entropy increases. It is said that in an isolated system, the entropy never decreases — the chaos never dims down without external force.

Consider, for example, a coin toss — if the toss the coin four times and the events come up [tails, heads, heads, tails]. If you (or a machine learning algorithm) were to predict the next coin flip, you would be able to predict an outcome with any certainty — the system contains high entropy. On the other hand, a weighted coin with events [tails, tails, tails, tails] has very low entropy, and given the current information, we can almost definitively say that the next outcome will be tails.

Most scenarios applicable to data science are somewhere between astronomically high and perfectly low entropy. A high entropy means low information gain, and a low entropy means high information gain. Information gain can be thought of as the purity in a system: the amount of clean knowledge available in a system.

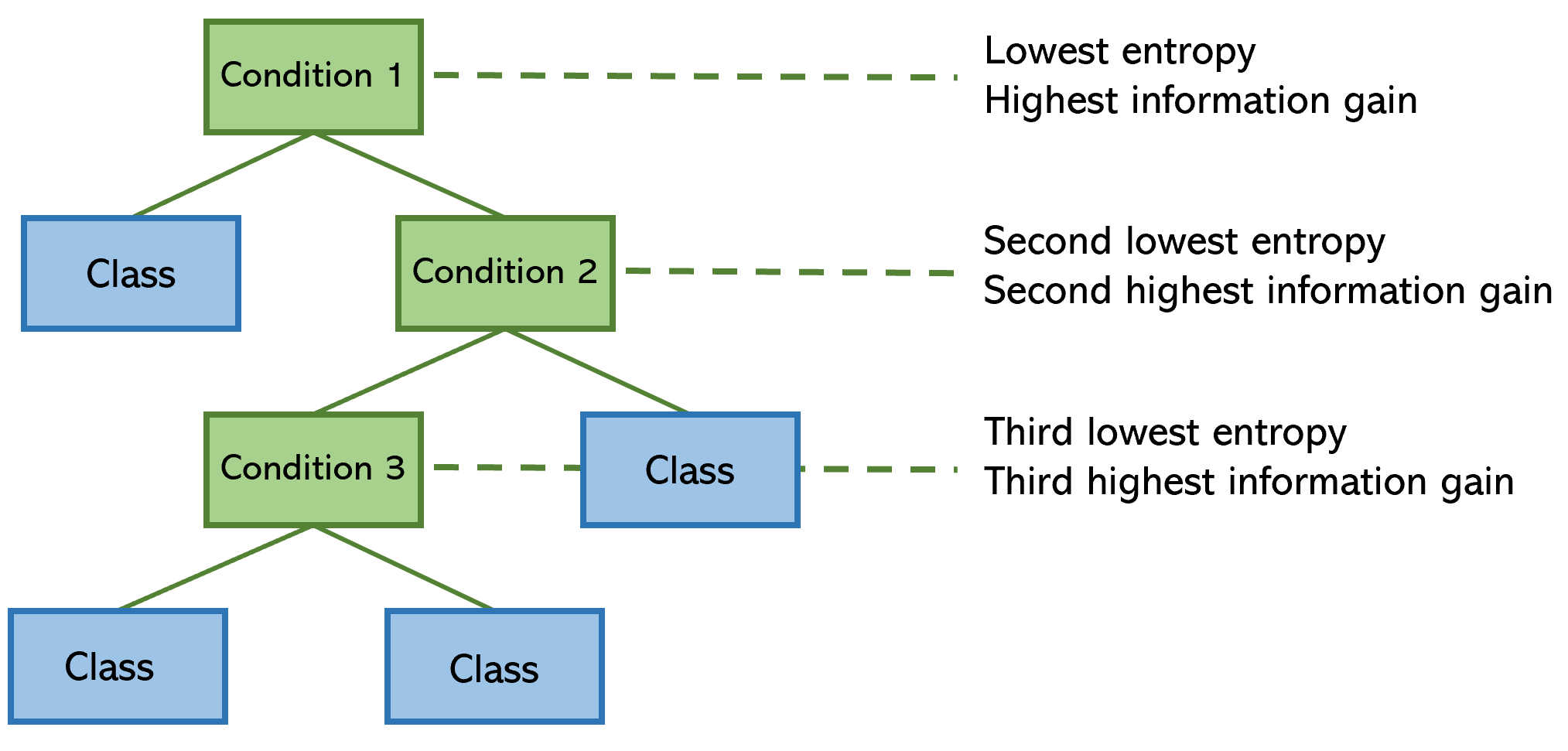

Decision trees use entropy in their construction: in order to be as effective as possible in directing inputs down a series of conditions to a correct outcome, feature splits (conditions) with lower entropy (higher information gain) are placed higher on the tree.

To illustrate the idea of low and high-entropy conditions, consider hypothetical features with class marked by color (red or blue) and the split marked by a vertical dashed line.

#artificial-intelligence #data-science #machine-learning #data-analysis #ai #data analysis