An explanation of how deep neural networks learn and adapt

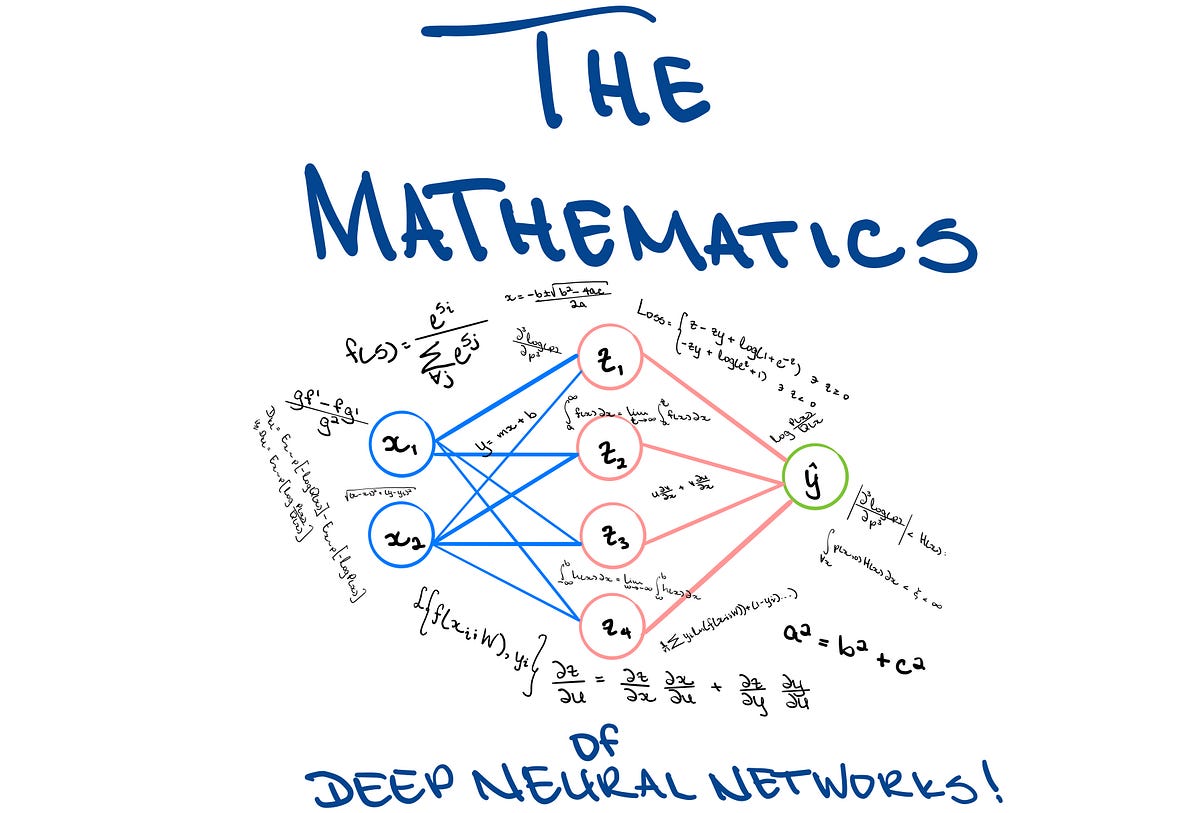

Deep neural networks (DNNs) are essentially formed by having multiple connected perceptrons, where a perceptron is a single neuron. Think of an artificial neural network (ANN) as a system which contains a set of inputs that are fed along weighted paths. These inputs are then processed, and an output is produced to perform some task. Over time, the ANN ‘learns’, and different paths are developed. Various paths can have different weightings, and paths that are found to be more important (or produce more desirable results) are assigned higher weightings within the model than those which produce fewer desirable results.

Within a DNN, if all the inputs are densely connected to all the outputs, then these layers are referred to as dense layers. Additionally, DNNs can contain multiple hidden layers. A hidden layer is basically the point between the input and output of the neural network, where the activation function does a transformation on the information being fed in. It is referred to as a hidden layer because it is not directly observable from the system’s inputs and outputs. The deeper the neural network, the more the network can recognize from data.

#data-science #towards-data-science #artificial-intelligence #deep-learning