Object Detection at a Glance

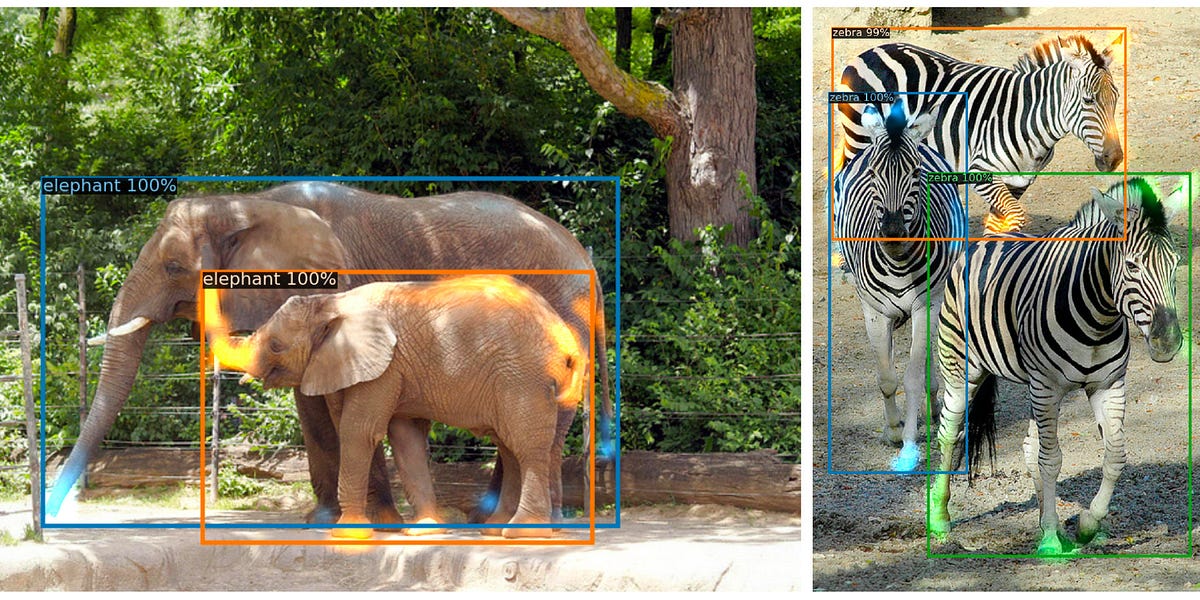

In Computer Vision, object detection is a task where we want our model to distinguish the foreground objects from the background and predict the locations and the categories for the objects present in the image.

There are many frameworks out there for object detection but the researchers at Facebook AI has come up with DETR, an innovative and efficient approach to solve the object detection problem.

Introduction

DETR treats an object detection problem as a direct set prediction problem with the help of an encoder-decoder architecture based on transformers. By set, I mean the set of bounding boxes. Transformers are the new breed of deep learning models that have performed outstandingly in the NLP domain. This is the first time when someone used transformers for object detection.

The authors of this paper have evaluated DETR on one of the most popular object detection datasets, COCO, against a very competitive Faster R-CNN baseline.

In the results, the DETR achieved comparable performances. More precisely, DETR demonstrates significantly better performance on large objects. However, it didn’t perform that well on small objects.

The DETR Model

Most modern object detection methods make predictions relative to some initial guesses. Two-stage detectors(R-CNN family) predict boxes w.r.t. proposals, whereas single-stage methods(YOLO) make predictions w.r.t. anchors or a grid of possible object centers. Recent work demonstrate that the final performance of these systems heavily depends on the exact way these initial guesses are set. In our model(DETR) we are able to remove this hand-crafted process and streamline the detection process by directly predicting the set of detections with absolute box prediction w.r.t. the input image rather than an anchor.

Two things are essential for direct set predictions in detection:

- A set prediction loss that forces unique matching between predicted and ground truth boxes

- An architecture that predicts (in a single pass) a set of objects and models their relation

The researcher at Facebook AI used bipartite matching between predicted and ground truth objects which ensures one-to-one mapping between predicted and ground truth objects/bounding boxes.

DETR infers a fixed-size set of N predictions, in a single pass through the decoder, where N is set to be significantly larger than the typical number of objects in an image. This N user has to decide according to their need. Suppose in an image maximum 5 object are there so we can define (N=7,8,…). let’s say N=7, so DETR infers a set of 7 prediction. Out of this 7 prediction 5 prediction will for object and 2 prediction are for ∅(no object) means they will assign to background. Each prediction is a kind of tuple containing class and bounding box (c,b).

#artificial-intelligence #machine-learning #object-detection #computer-vision #deep learning