Building a Multi-Label Classifier doesn’t seem a difficult task using Keras, but when you are dealing with a highly imbalanced dataset with more than 30 different labels and with multiple losses it can become quite tricky.

In this post, we’ll go through the definition of a multi-label classifier, multiple losses, text preprocessing and a step-by-step explanation on how to build a multi-output RNN-LSTM in Keras.

The dataset that we’ll be working on consists of natural disaster messages that are classified into 36 different classes. The dataset was provided by Figure Eight. Example of input messages:

['Weather update - a cold front from Cuba that could pass over Haiti',

'Is the Hurricane over or is it not over',

'Looking for someone but no name',

'UN reports Leogane 80-90 destroyed. Only Hospital St. Croix functioning. Needs supplies desperately.',

'says: west side of Haiti, rest of the country today and tonight']

What’s a Multi-Label Classification problem?

Before explaining what it is, let’s first go through the definition of a more common classification type: Multiclass. In a multiclass, the classes are mutually exclusive, i.e, you can only classify one class at a time. For example, if you have the classes: {Car, Person, Motorcycle}, your model will have to output: Car OR Person OR Motorcycle. For this kind of problem, a Softmax function is used for classification:

Softmax Classification function in a Neural Network

For the multi-label classification, a data sample can belong to multiple classes. From the example above, your model can classify, for the same sample, the classes: Car AND Person (imagining that each sample is an image that may contain these 3 classes).

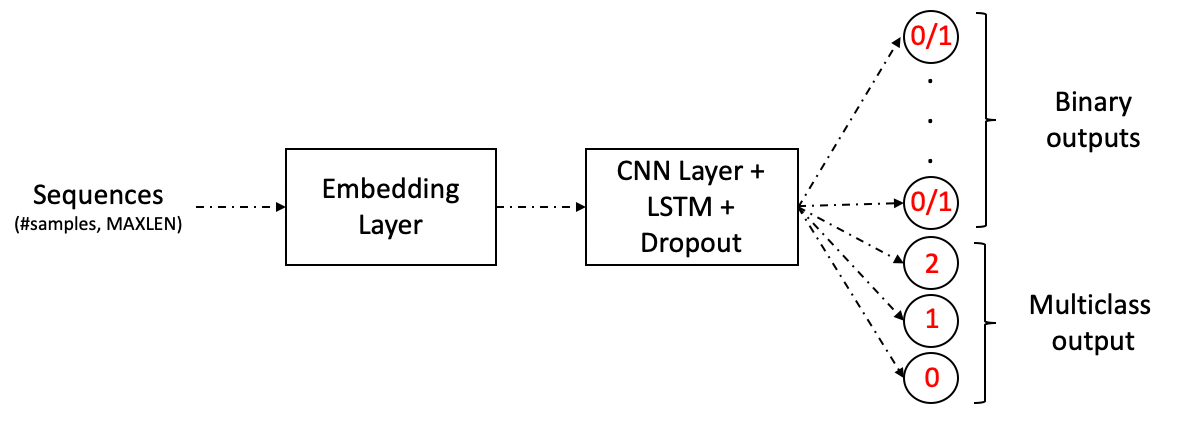

In the studied dataset, there are 36 different classes where 35 of them have a binary output: 0 or 1; and 1 of them has 3 possible classes** (a multiclass case):** 0, 1 or 2.

Multiple Losses

Using multiple loss functions in the same model means that you are doing different tasks and sharing part of your model between these tasks. Sometimes, you may think that maybe it’s better to build different models for each different type of output, but in some situations sharing some layers of your Neural Network helps the models generalize better.

#nlp #machine-learning #deep-learning #word-embeddings #keras #deep learning