Do Machine Learning models see language different to the way we do and how can we find out? This is the question raised following an earlier article about looking inside an NLP model to see what words were producing the output and asking: Why those words?

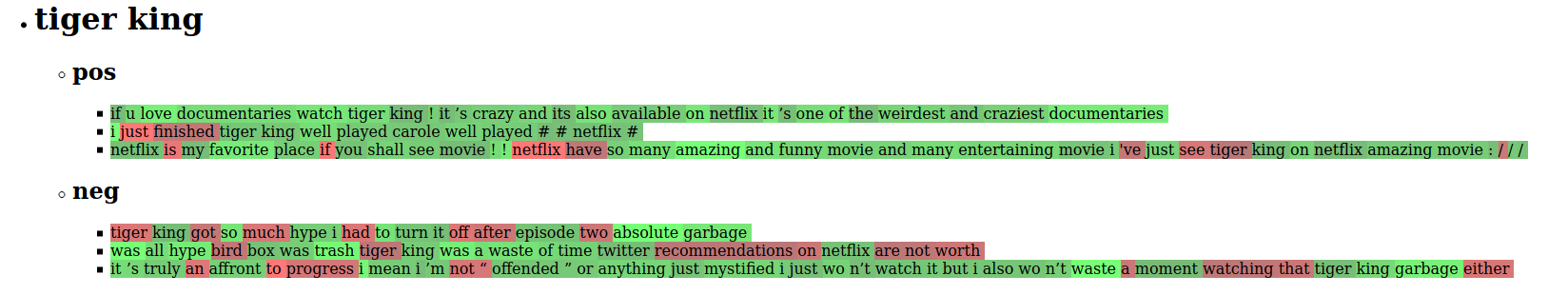

When talking through these results with other data scientists, we would start to interpret why each word was important to the model; and why was one word considered positive and another not? For instance, in a tweet about Netflix series ‘Tiger King’ the model would suggest obvious words as having a positive sentiment (‘loved’, ‘amazing’) but also suggest words like ‘documentary’ and ‘I’ as also being important for a positive sentiment.

Tweets with words highlighted by an NLP model. The brighter the green the greater the contribution to the sentiment.

I would hand-waveringly suggest “well documentaries are popular” and “maybe using the personal pronoun we are associating on a deeper level and will therefore be positive about it…”

Pop psychology at best. But it raised the question.

#nlp #data-science #machine-learning #deep learning