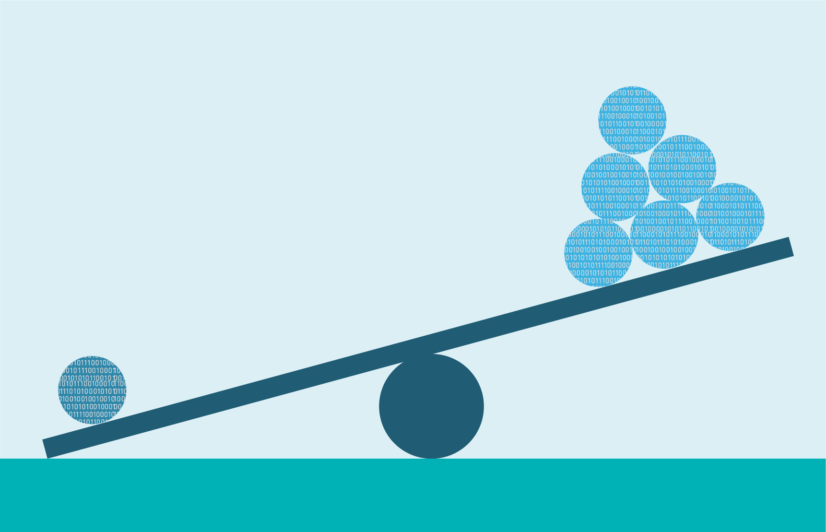

Let me paint a picture for you, you are a beginner to the field of Data Science and have started making your first ML model for predictions and found the accuracy using model.score() as 95%. You are jumping around thinking that you nailed it and maybe it was your destiny to become a Data Scientist. Well, I don’t want to burst the bubble but you can be horribly wrong. Do you know why? — Because accuracy is a very poor metric to measure the classifier performance especially in the case of Unbalanced Dataset. And unbalanced datasets are prevalent in a multitude of fields and sectors. From fraudulent transactions, identifying rare diseases, electrical pilferage to classifying search-relevant items in an e-commerce site, data scientists come across them in many contexts. The challenge appears when we have to make a machine learning model that can classify the very rare cases in the training dataset. Due to the disproportionality of classes in the variables, the conventional ML algorithm which doesn’t take into account the class disproportion or balances tends to classify into the class with more instances, the major class, while at the same time gives us a false notion of an extremely accurate model. Both the inability to predict rare events and the misleading accuracy can ruin the whole motive we are making the predictive models for.

Designed by Author

Let me give you an example, suppose you develop a classifier for predicting fraudulent transactions. And after you’ve finished the development, you measure its accuracy on the test set to be 97%. At first, it might seem to be too good to be true, right?

Now let’s compare it to a dummy classifier that always just predicts the most likely class which would be the non-fraudulent transactions. That is regardless of what the actual instance is, the dummy classifier will always predict that a transaction is non-fraudulent. So let’s assume we have testing data which contains 1,000 transaction details, and on average, about 999 of them will be non-fraudulent transactions. So our dummy classifier will correctly predict the non-fraudulent label for all of those 999 transactions. And so the accuracy of the dummy classifier will be 99.9%. So our own classifier’s performance isn’t great at all, as we thought and celebrated. It’s no better than just always guessing the majority class without even looking at the data.

Still not convinced huh! Then lets elaborate it by making a classifier with a real dataset. We shall be using the digits dataset, which contains the images of handwritten digits labeled from 0–9 (i.e. Ten classes).

First and foremost we shall import the necessary libraries and then the load_digits dataset. Now to check whether our dataset is balanced or not, we use the numpy’s bin count method to count the number of instances in each class.

#data #imbalanced-data #artificial-intelligence #classification #data-science #data analysisa