Podcasts are a fun way to learn new stuff about the topics you like. Podcast hosts have to find a way to explain complex ideas in simple terms because no one would understand them otherwise 🙂 In this article I present a few episodes to get you going.

In case you’ve missed my previous article about podcasts:

Top 5 apps for Data Scientists

The Kernel Trick and Support Vector Machines by Linear Digressions

Linear Digressions logo from SoundCloud

Katie and Ben explore machine learning and data science through interesting (and often very unusual) applications.

In this episode, Katie and Ben explain what is the kernel trick in Support Vector Machines (SVM). I really like the simple explanation of heavy machinery behind SVM. Don’t know what maximum margins classifiers are? Then listen first to supporting episode Maximal Margin Classifiers.

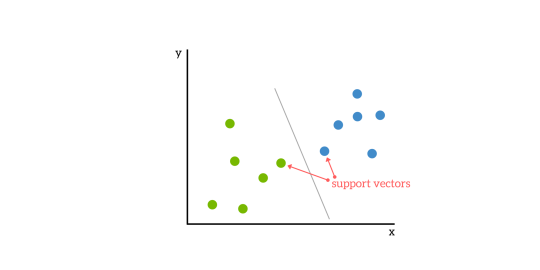

A Maximum Margin Classifier tries to find a line (a decision boundary) between the left and right side so that it maximizes the margin. The line is called a hyperplane because usually there are more 2 dimensions involved. The decision boundary is between support vectors.

Binary classification problem with support vectors

What is the kernel trick?

When you have 3 points in a 2-dimensional space, you can arrange points in a way, that they cannot be separated by a line. You can always separate them by putting them in 3 dimensions. One way to introduce a new dimension is to calculate the distance from the origin:

z = x^2 + y^2

This will push points farther from the origin more than the ones closer to the origin. Let’s look at a video below. This also makes a linear classifier non-linear, because it maps the boundary to less dimensional space.

SVM with polynomial kernel visualization from giphy

When there are more dimensions than the samples, we can always separate the points with a hyperplane — this is the main idea behind SVM. The polynomial kernel is one of the commonly used kernels with SVM (the most common is Radial basis function). The 2nd-degree polynomial kernel looks for all cross-terms between two features — useful when we would like to model interactions.

What is the kernel tricks? Popcorn that joined the army and they’ve made him a kernel

Should You Get a Ph.D. by Partially Derivative

Partially Derivative Logo from Twitter

Partially Derivative is hosted by data science super geeks. They talk about the everyday data of the world around us.

This episode may be interesting for students who are thinking about pursuing a Ph.D. In this episode, Chris talks about getting a Ph.D. from his personal perspective. He talks about how he wasn’t interested in math when he was young but was more into history. After college, he had enough of school and didn’t intend to pursue a Ph.D. He sent an application to UC Davis and was presented with a challenge. Enjoy listening to his adventure.

Tim, a two time Ph.D. dropout and a data scientist living in North Carolina, has a website dedicated to this topic: SHOULD I GET A PH.D.?

Let the adventure begin…

AI Decision-Making by Data Skeptic

Data Skeptic Logo from https://dataskeptic.com/

The Data Skeptic Podcast features interviews and discussion of topics related to data science, statistics and machine learning.

In this episode, Dongho Kim discusses how he and his team at Prowler have been building a platform for autonomous decision making based on probabilistic modeling, reinforcement learning, and game theory. The aim is so that an AI system could make decisions just as good as humans can.

#podcast #data #machine-learning #data-science #data analysis #data analysis