Support vector machines were first introduced by Vladmir Vapnik and his colleagues at Bell Labs in 1992. However, many are not aware that basics of support vector machines were already developed in 1960s with his PhD thesis at Moscow University. Over decades, SVM has been highly preferred by many since it uses less computational resources while allowing data scientists to achieve notable accuracy. Not to mention that it solves both classification and regression problems.

1. Basic Concept

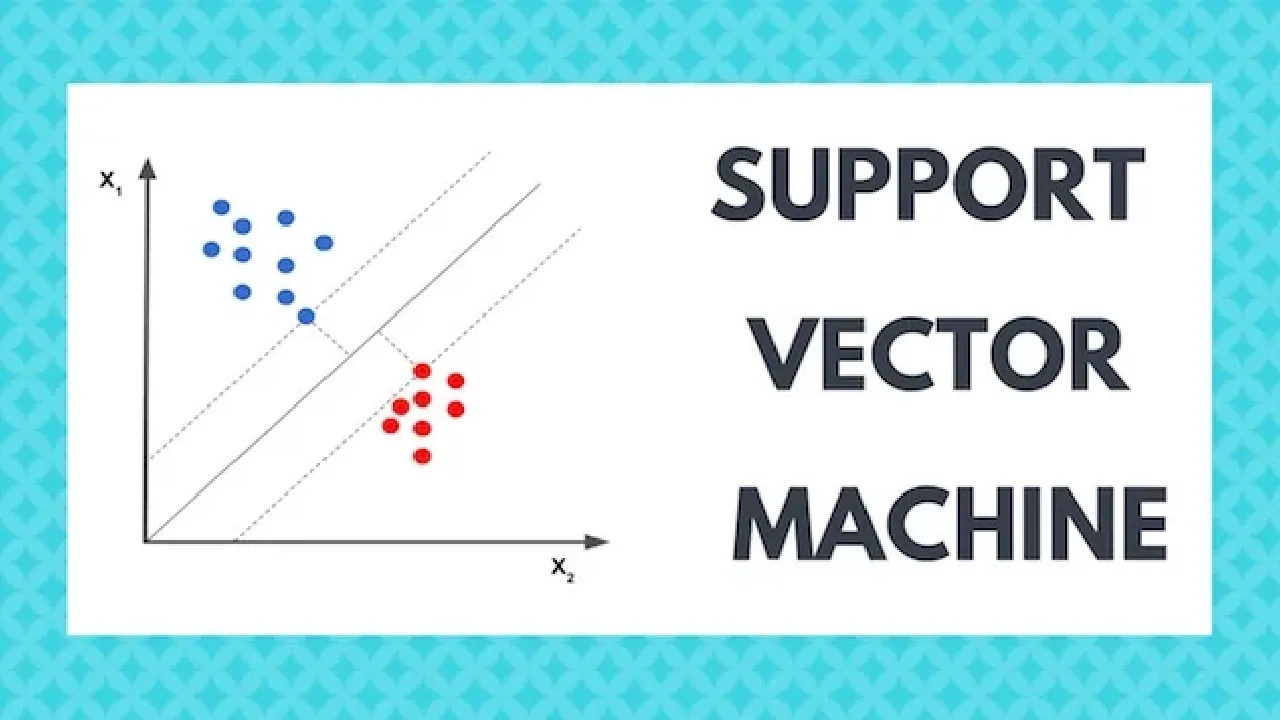

SVM can solve linear and non-linear problems and work well for many practical business problems. The principle idea of SVM is straight forward. The learning model draws a line which separates data points into multiple classes. In a binary problem, this decision boundary takes the widest street approach maximising the distance to the closest data points from each class.

In vector calculus, the dot product measures ‘how much’ one vector lies along another, and tells you the amount of force going in the direction of the displacement, or in the direction of another vector.

For instance, we have the unknown vector_ u_ and normal vector w which is perpendicular to the decision boundary. The dot product of w·u denotes the amount of force by u going in the direction of vector w. In this regard, if unknown vector u locates on the positive side of boundary, it can be described as below with the constant b.

The samples locating above the boundary that classifies positive samples (+1) or below the boundary that classifies negative samples (-1) can be accordingly expressed.

#supervised-learning #machine-learning #svm #data-science