Gradient Boost Implementation = pytorch optimization + sklearn decision tree regressor

In order to understand the Gradient Boosting Algorithm, effort has been made to implement it from first principles using **pytorch **to perform the necessary optimizations (minimize loss function) and calculate the residuals (partial derivatives with respect to predictions) of the loss function and decision tree regressor from sklearn to create the regression decision trees.

A line of trees

Algorithm Outline

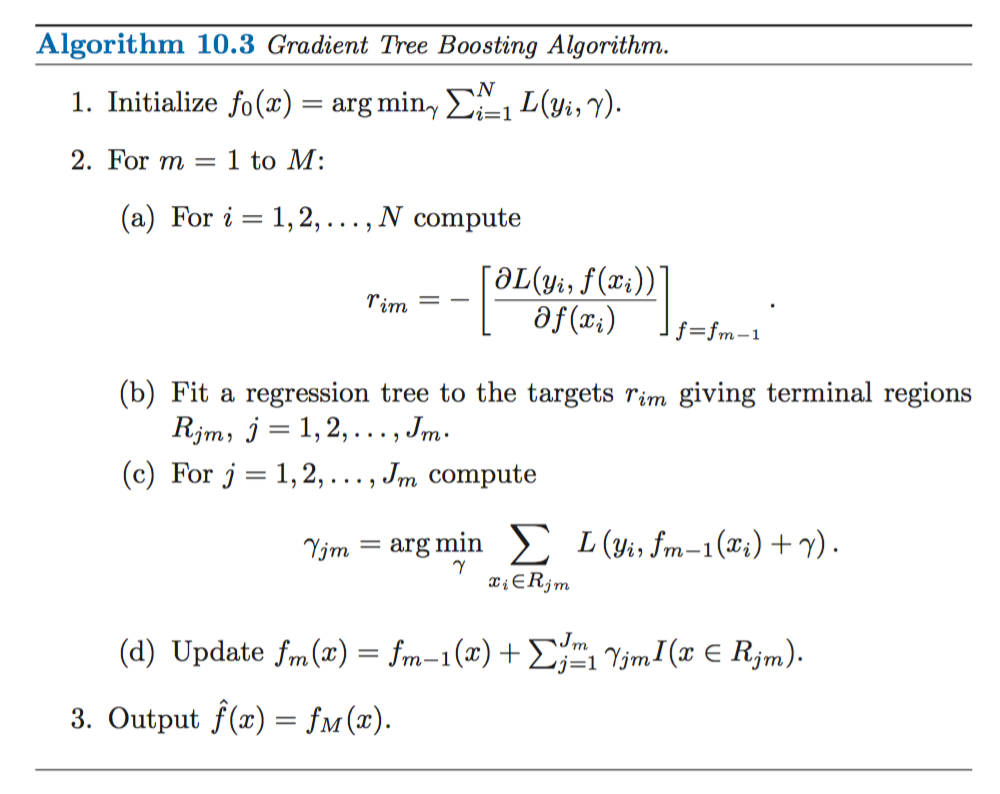

The outline of the algorithm can be seen in the following figure:

Steps of the algorithm

We will try to implement this step by step and also try to understand why the steps the algorithm takes make sense along the way.

Explanations + Code snippets

Loss function minimization

What is crucial is that the algorithm tries to minimize a loss function, be it square distance in the case of regression or binary cross entropy in the case of classification.

The loss function parameters are the predictions we make for the training examples : L(prediction_for_train_point_1, prediction_for_train_pont_2, …., prediction_for_train_point_m).

#pytorch #gradient-boosting #machine-learning #deep learning