In 2017, the paper “Attention is all you need”shocked the land of NLP (Natural Language Processing). It was shocking not only because it has a good paper title, but also because it introduced to the world a new model architecture called “Transformer”, which proved to perform much better than the traditional RNN type of networks and paved its way to the state of the art NLP model “BERT”.

This stone cast in the pond of NLP has created ripples in the pond of GANs (Generative Adversarial Networks). Many have been inspired by this and attempt to garner the magic power of attention. But when I first started reading papers about using attention in GANs, it appeared to me there are so many different meanings behind the same “attention” word. In case you are as confused as I was, let me be at your service and shed some light on what people really mean when they say they use “attention” in GANs.

Meaning 1: Self-attention

Self-attention in GANs is very similar to the mechanism in the NLP Transformer model. Basically, it addresses the difficulty of the AI model in understanding long-range dependency.

In NLP, the problem arises when there is a long sentence. Take the example of this Oscar Wilde’s quote _“To __live _is the rarest thing in the world. Most people exist, that is all.” The two words in bold (“live” and “exist”) have a relationship, but they are placed far apart from each other which makes it hard for the RNN type of AI model to capture the relationship.

It is almost the same in GANs, most of GANs use CNN structure which is good at capturing local features and may overlook the long-range dependency when it is outside of its receptive field. As a result, it is easy for GANs to generate realistic-looking furs on dogs, but it may make a mistake by generating a dog with 5 legs.

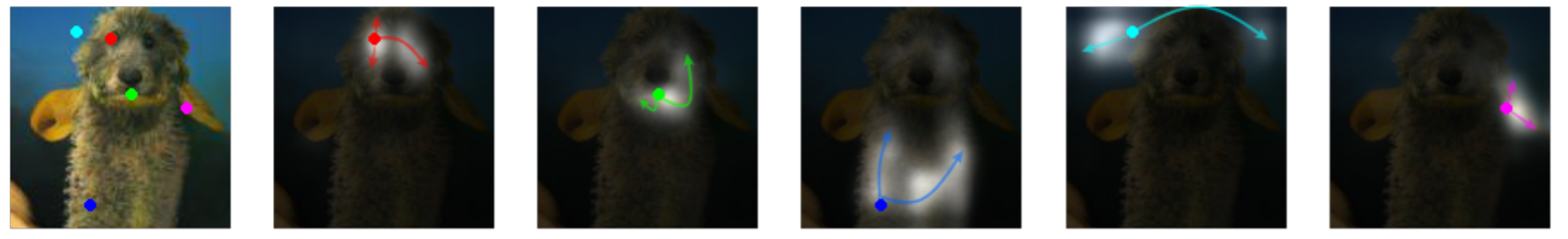

Self-Attention Generative Adversarial Networks(SAGAN) adds a self-attention module to guide the model to look at features at distant portions of the image. In the pictures below, the picture on the left is the generated image, with some sample locations labeled with color dots. The other images showing the corresponding attention map of the locations. I find the most interesting one is the 5th image with the cyan dot. It shows that when the model generates the left ear of the dog, it not only looks at the local region around the left ear but also looks at the right ear.

Visualization of the attention map for the color labeled locations. source

If you are interested in the technical details of SAGAN, other than reading the paper, I also recommend this post.

Meaning 2: Attention in the discriminator

GANs consist of a generator and a discriminator. In the GANs world, they are like two gods eternally at war, where the generator god tirelessly creates, and the discriminator god stands at the side and criticizes how bad these creations are. It may sound like the discriminator is the bad god, but that is not true. It is through these criticisms that the generator god knows how to improve.

If these “criticisms” from discriminator are so helpful, why not we pay more attention to them? Let’s see how this paper “U-GAT-IT: Unsupervised Generative Attentional Networks with Adaptive Layer-Instance Normalization for Image-to-Image Translation_” _does this.

The project U-GAT-IT tackles a difficult task — converts a human photo into a Japanese anime image. It is difficult because an anime character’s face is vastly different from a real person’s face. Take an example of the pair images below. The anime character on the right is deemed as a good conversion from the person on the left. But is we put ourselves in the computer’s shoes for a moment, we will see that the eyes, nose, and mouth in the two images are very different, the structure and proportion of the face also changes a lot. It is very hard for a computer to know what features to preserve and what to modify.

#machine-learning #gans #neural-networks #deep-learning #generative-model #deep learning