Why even talk about xAI?

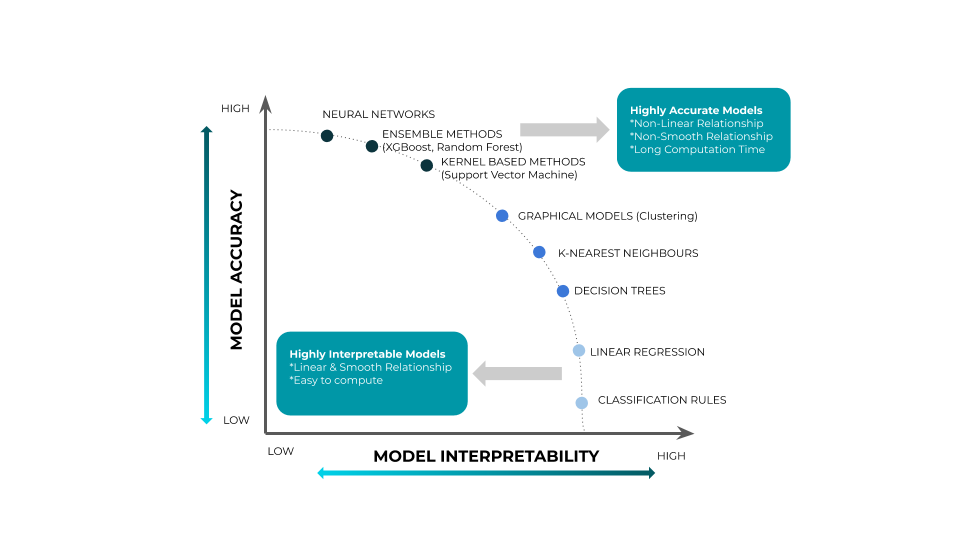

With the increasing complexity of AI models and the use of these models in real-world applications, the impact these AI models have on our lives is incalculable. It will not be a stretch to say that these AI models know us better than we know ourselves! While this is quite amazing, it is also, at the same time, quite frightening. With increasing algorithmic complexity, we are paying the cost of decreasing interpretability and trust. It means that the more complex a model is, the less likely we understand how it works. It is the main reason why these AI models are called black-boxes: we do not know the why behind these complex algorithms.

Accuracy vs. Interpretability. Source: ExplainX.ai Internal

The reality is that these algorithms are becoming a part of our daily lives: from the Facebook newsfeed to TikTok to our recent online credit card applications are interactions all driven by power AI algorithms — most of which are black-boxes. As we are actively using these algorithms in mission-critical use cases like predicting cancer or accidents or evaluating a candidate based on text representing them, we need frameworks and techniques to help us navigate and open up these black-boxes.

In short, model developers need to answer these five major questions:

- Why did my model give this prediction?

- Does my model prediction align with business/domain logic? If the answer is no, then identify and treat irrelevant features that have more weight.

- Is my model behaviour consistent among different subsets of data? If the answer is yes, then compare and contrast to identify where and how the behaviour varies.

- Is my model bias towards a specific feature within my dataset? If the answer is yes, then find where that bias is and get rid of it?

- What can I do to influence the prediction or what actions can I take to achieve a desirable outcome?

- Optional: does my model enable auditing to meet compliance with regulatory requirements? (relevant mainly for financial institutions)

The role of the data scientist or the model developer is to answer all these questions confidently. Responsibly building these algorithms, removing biases, understanding model behaviour and ensuring trust are the core of what a data scientist needs to accomplish.

#machine-learning #framework #data-scientist #explainx #explainable-ai