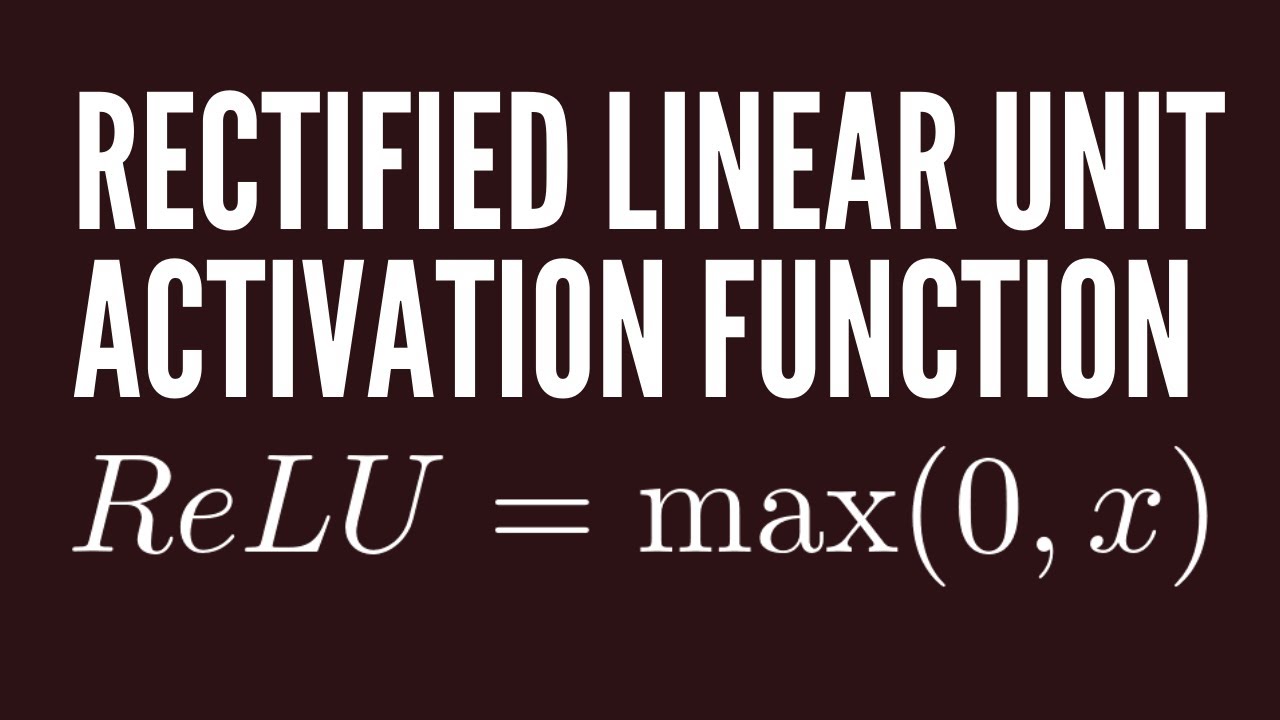

In this video, I’ll discuss about ReLU (Rectified Linear Unit) Activation Function. The ReLU activation is one of the most used activation function in the neural networks/deep learning.

#deep-learning #artificial-intelligence #machine-learning #data-science #developer

2.55 GEEK