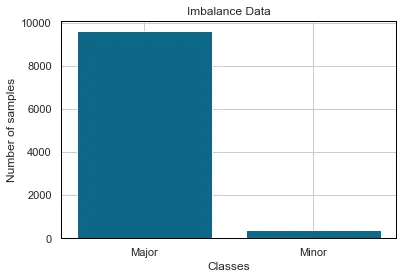

Imbalanced data refers to where the number of observations per class is not equally distributed and often there is a major class that has a much larger percentage of the dataset and minor classes which doesn’t have enough examples.

Small Training Sets also suffer from not having enough examples. Both of the problems are very common in real-world applications but luckily there are several ways to overcome this problem. This article will walk through many different techniques and perspectives to combat Imbalance data. In particular, you will learn about:

- Sampling Techniques

- Weighted Loss

- Data Augmentation Techniques

- Transfer Learning

How imbalanced data affects your model?

Imbalanced data is a common problem in data science. From image classification to fraud detection or medical diagnosis, data scientists face imbalanced datasets. Having an imbalanced dataset decreases the sensitivity of the model towards minority classes. Lets put this on with simple maths:

Imagine you have 10000 lung X-Ray images and only 100 of them are diagnosed with Pneumonia which is an infectious disease that inflames the air sacs in one or both lungs and fills them with liquid. If you train a model that predicts every example as healthy you will get 99% accuracy. Wow, how awesome is that? Wrong, you just killed many people with your model.

#resampling #tutorial #imbalanced-data #machine-learning #data-science