Introduction

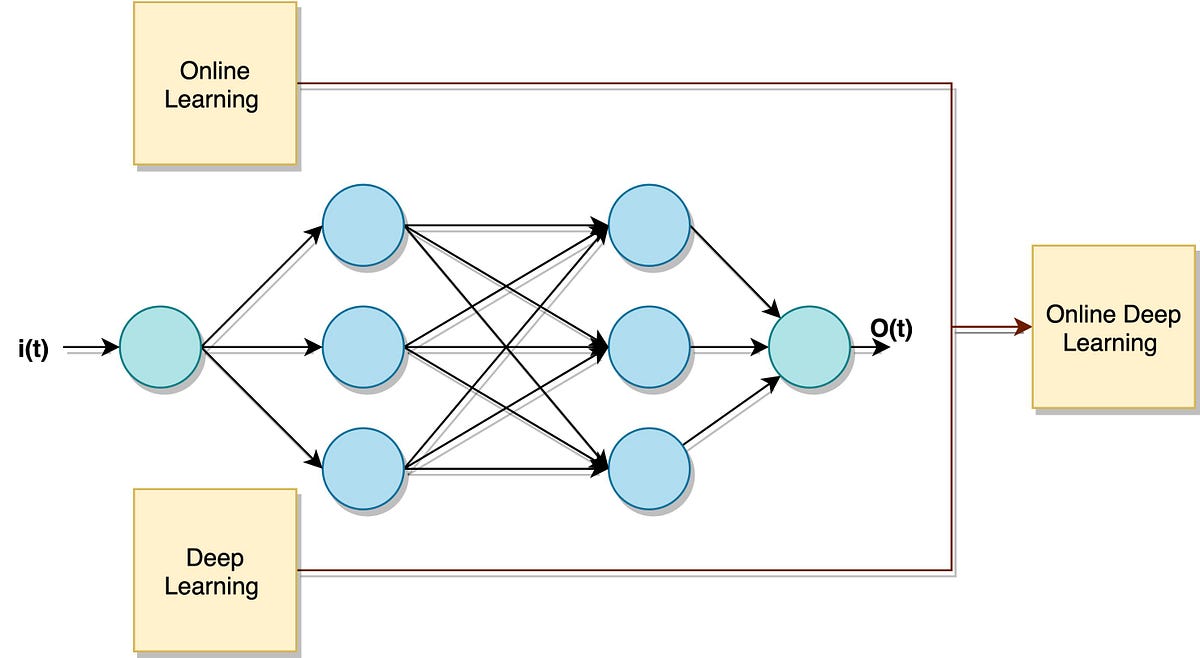

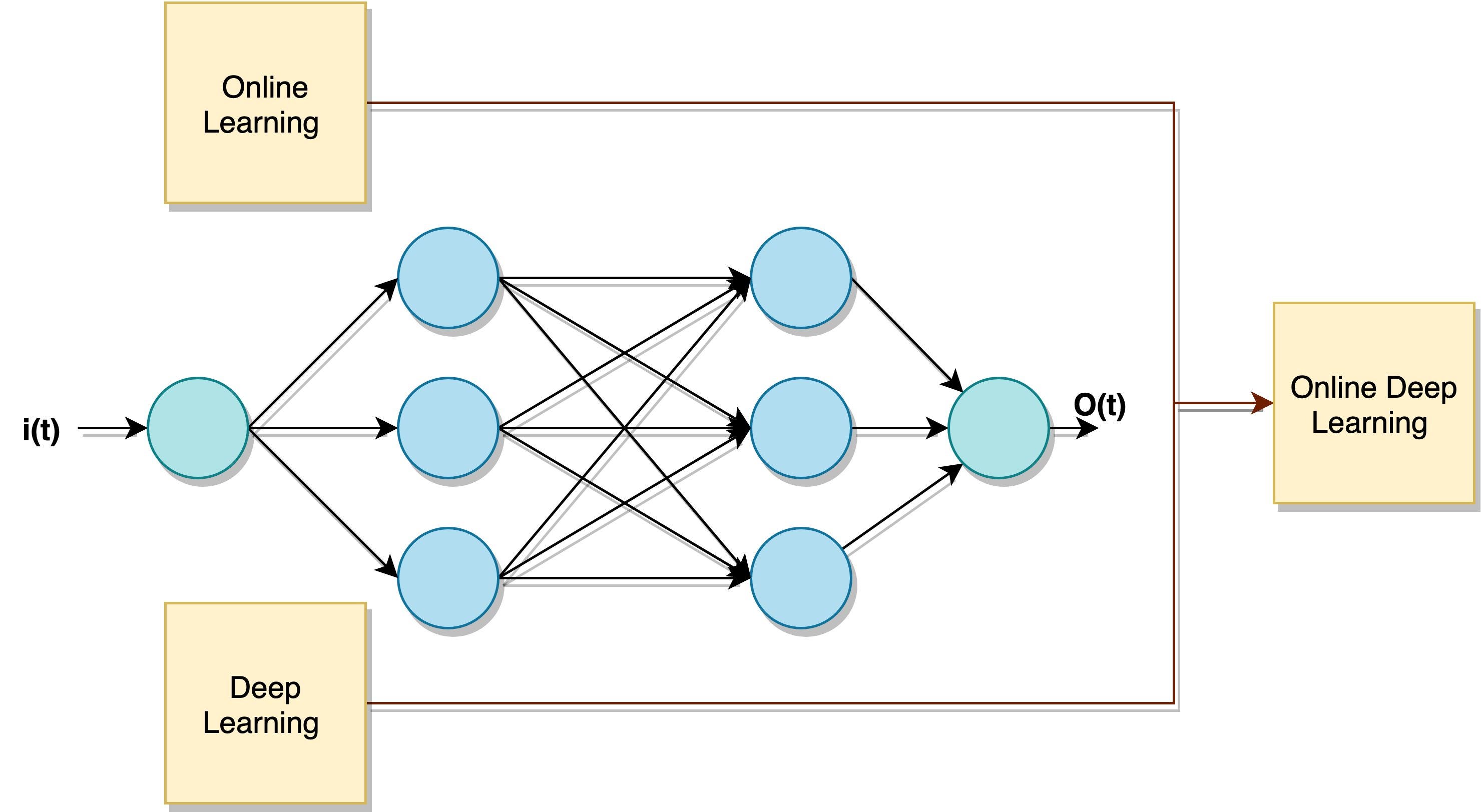

As the main concept of deep neural networks is to train through back-propagation in a batch setting, the data is required to be available in an offline setting. As a consequence, the scheme is irrelevant for many practical situations, in which the data arrives in sequence and cannot be stored. For example: stocks, vehicle position, and many more. ODL is very challenging as it cannot use back-propagation. Two years ago, Sahoo et al (2018) addressed the gap between online learning and deep learning, where they claimed that “without the power of depth, it would be difficult to learn complex patterns”. They presented a novel framework for ODL (to be reviewed later).

General Scheme (Or, 2020)

Overview of Online Learning (OL)

OL is an ML method in which data is available in sequential order, and we use it in order to predict future data at each time step. Moreover, in OL, we update the predictor in real-time. According to Shai Shalev-Shwartz: “OL is the process of answering a sequence of questions given (maybe partial) knowledge of the correct answers to previous questions and possibly additional available information”. The family of OL includes online convex optimization, (which leads to efficient algorithms), limited feedback model when the system observes the loss value but does not observe the actual real value and more (I recommend reading ref [2] for more information).

#artificial-intelligence #machine-learning #real-time-systems #online-learning #deep-learning #machine learning