As artificial intelligence (AI) models, especially those using deep learning, have gained prominence over the last eight or so years [8], they are now significantly impacting society, ranging from loan decisions to self-driving cars. Inherently though, a majority of these models are opaque, and hence following their recommendations blindly in human critical applications can raise issues such as fairness, safety, reliability, along with many others. This has led to the emergence of a subfield in AI called explainable AI (XAI) [7]. XAI is primarily concerned with understanding or interpreting the decisions made by these opaque or black-box models so that one can appropriate trust, and in some cases, have even better performance through human-machine collaboration [5].

While there are multiple views on what XAI is [12] and how explainability can be formalized [4, 6], it is still unclear as to what XAI truly is and why it is hard to formalize mathematically. The reason for this lack of clarity is that not only must the model and/or data be considered but also the final consumer of the explanation. Most XAI methods [11, 9, 3], given this intermingled view, try to meet all these requirements at the same time. For example, many methods try to identify a sparse set of features that replicate the decision of the model. The sparsity is a proxy for the consumer’s mental model. An important question asks whether we can disentangle the steps that XAI methods are trying to accomplish? This may help us better understand the truly challenging parts as well as the simpler parts of XAI, not to mention it may motivate different types of methods.

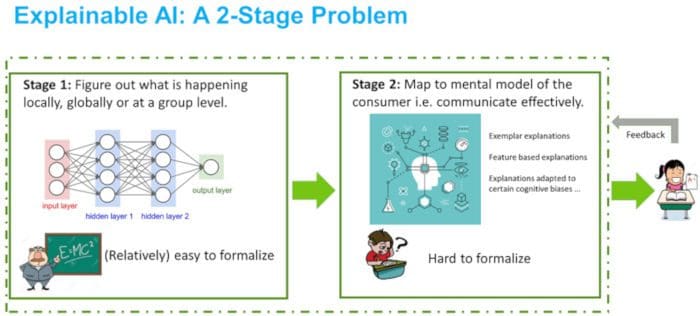

Two-Stages of XAI

We conjecture that the XAI process can be broadly disentangled into two parts, as depicted in Figure 1. The first part is uncovering what is truly happening in the model that we want to understand, while the second part is about conveying that information to the user in a consumable way. The first part is relatively easy to formalize as it mainly deals with analyzing how well a simple proxy model might generalize either locally or globally with respect to (w.r.t.) data that is generated using the black-box model. Rather than having generalization guarantees w.r.t. the underlying distribution, we now want them w.r.t. the (conditional) output distribution of the model. Once we have some way of figuring out what is truly important, a second step is to communicate this information. This second part is much less clear as we do not have an objective way of characterizing an individual’s mind. This part, we believe, is what makes explainability as a whole so challenging to formalize. A mainstay for a lot of XAI research over the last year or so has been to conduct user studies to evaluate new XAI methods.

#overviews #ai #explainability #explainable ai #xai