So, you’re training a highly complex model that takes hours to adjust. You don’t know how many epochs are enough and, even worse: it’s impossible to know which variant will deliver the most reliable results!

You want to take a break, but the longer it takes to find the right set of parameters, the less you have to deliver the project; So what to do? Let’s dive together.

Before we Start

To make it easier to understand, let’s go straight to the callback section, where we’ll adjust a Multilayer Perceptron. If you don’t know how to create a Deep Learning model, I suggest that you take a look at my article below; This will help you build your first Neural Network.

The Keras Callbacks API

From performing a checkpoint to an early stop, the Callbacks API presents useful methods while training our model. Today, let’s focus on just some of them:

- EarlyStoppingStop training when a definite metric no longer improves.

- LearningRateScheduler— The learning rate will be modified whenever a new epoch starts (based on a function).

- ReduceLROnPlateau— When a specific metric stop improving, decrease the learning rate.

- ModelCheckpoint— Saves a copy of the model or its weights for later use.

- TensorBoard— Enables TensorBoard when a model is training, saving its logs.

Every callback will be presented in the order: definition > code > parameters > output.

EarlyStopping

As the short explanation above suggests, our model will stop training when a specified event occurs; In our case, this will happen when the loss stops improving after three epochs; Let’s check our code.

from tensorflow.keras.callbacks import EarlyStopping

# Let's create our callback.

# EarlyStopping(monitor, patience, mode)

early_stopping = EarlyStopping('loss', patience=3, mode='min')

# Let's fit our model with the callback

# model.fit(X, y, batch_size, epochs, verbose, callbacks)

model.fit(X_train, y_train, 32, 100, 2, [early_stopping])

EarlyStopping works mainly with three parameters: monitor, patience, and mode; The first one defines what we are monitoring and, based on that, we will interrupt our training; The second is the number of epochs when we allow our training to continue running without modification before stopping; Finally, the mode is how we are monitoring: the maximum value? The minimal? You define here.

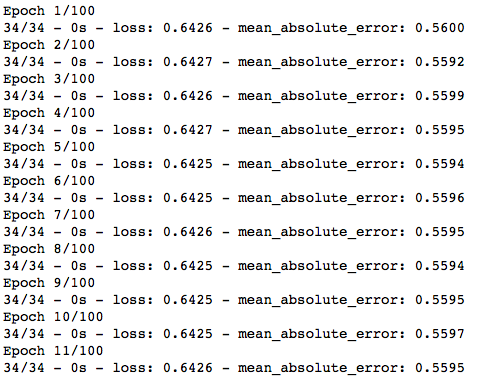

As you can see, our model stops training after a few epochs, even when we set it to 100. It’s useful for highly complex projects, which can take hours to complete.

#data-science #machine-learning #tensorflow #artificial-intelligence