A beginner’s guide to the basic concepts of Apache Airflow

This is a memo to share what I have learnt in Apache Airflow, capturing the learning objectives as well as my personal notes. The course is taught by Mike Metzger from DataCamp, and it includes 4 chapters:

Chapter 1. Intro to Airflow

Chapter 2. Implementing Airflow DAGs

Chapter 3. Maintaining and monitoring Airflow workflows

Chapter 4. Building production pipelines in Airflow

Photo by Jacek Dylag on Unsplash

A data engineer’s job includes writing scripts, adding complex CRON tasks, and trying various ways to meet an ever-changing set of requirements to deliver data on schedule. Airflow can do all these while adding scheduling, error handling, and reporting. This course will guide you in the basic concepts of Airflow and help you implement data engineering workflows in production. You’ll implement many different data engineering tasks in a predictable and repeatable fashion.

Chapter 1. Intro to Airflow

Data engineering is taking any action involving data and turning it into a reliable, repeatable, and maintainable process.

**Workflow **is a set of steps to accomplish a given data engineering task, such as downloading files, copying data, filtering information, writing to a database, etc.

**Airflow **is a platform to

· program workflows including: creation, scheduling, and monitoring

· implement workflow as DAGs (Directed Acyclic Graphs)

· be accessed via code, command-line (CLI), or web user interface (UI)

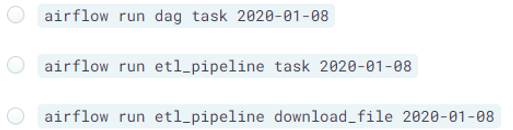

Running a task in Airflow

You’ve just started looking at using Airflow within your company and would like to try to run a task within the Airflow platform. You remember that you can use the airflow run command to execute a specific task within a workflow.

Note that an error while using airflow run will return airflow.exceptions.AirflowException: on the last line of output.

An Airflow DAG is set up for you with a dag_id of etl_pipeline. The task_id is download_file and the start_date is 2020-01-08. Which command would you enter in the console to run the desired task?

Answer: airflow run etl_pipeline download_file 2020–01–08

Syntax: airflow run <dag_id> <task_id> <start_date>

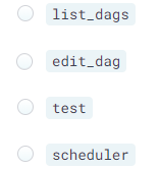

Examining Airflow commands

While researching how to use Airflow, you start to wonder about the airflow command in general. You realize that by simply running airflow you can get further information about various sub-commands that are available.

Which of the following is NOT an Airflow sub-command?

Answer: edit_dag

You can use the airflow -h command to obtain further information about any Airflow command.

Airflow DAGs

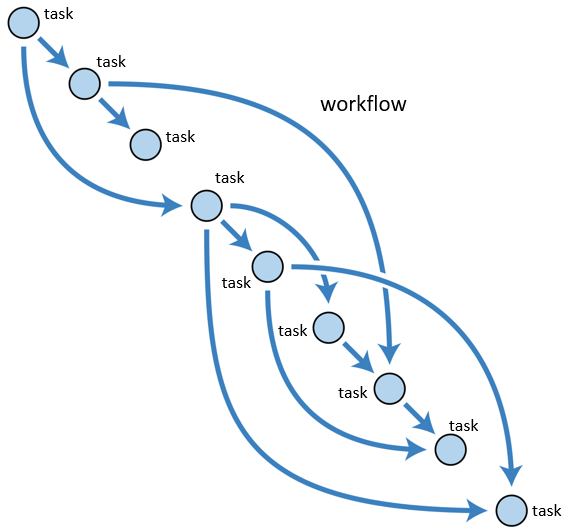

DAG (Directed Acyclic Graph) is

· Directed, an inherent flow, dependencies between components

· Acyclic, does not loop/cycle/repeat

· Graph, the actual set of components

Directed Acyclic Graph (DAG), image by the author

Defining a simple DAG

You’ve spent some time reviewing the Airflow components and are interested in testing out your own workflows. To start you decide to define the default arguments and create a DAG object for your workflow.

## Import the DAG object

from airflow.models import DAG

## Define the default_args dictionary

default_args = {

'owner': 'dsmith',

'start_date': datetime(2020, 1, 14),

'retries': 2

}

## Instantiate the DAG object

etl_dag = DAG('example_etl', default_args=default_args)

Syntax: dag_variable = DAG('dag_name', default_args=default_args)

#development #workflow #data-engineering #airflow #devops #python