Introduction

Linear Algebra is a branch of mathematics that is extremely useful in data science and machine learning. Most machine learning models can be expressed in matrix form. Because data science deals with real-world problems, matrices in data science must be real and symmetric. There are some exceptions to this. In advanced data science models such as image processing, Fourier analysis is heavily used. Hence one could easily encounter matrices that are defined over the space of complex numbers. Other than that, for most basic data science and machine learning problems, the matrices encountered are always real and symmetric.

In this article, we will consider three examples of real and symmetric matrix models that we often encounter in data science and machine learning, namely, the regression matrix (R); the covariance matrix, and the linear discriminant analysis matrix (L).

Example 1: Linear Regression Matrix

Suppose we have a dataset that has 4 predictor features and n observations as shown below.

Table 1. Features matrix with 4 variables and n observations. Column 5 is the target variable (y).

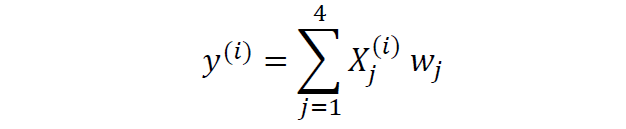

We would like to build a multi-regression model for predicting the y values (column 5). Our model can thus be expressed in the form

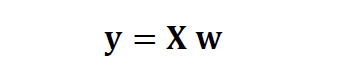

In matrix form, this equation can be written as

where X is the ( n x 4) features matrix, w is the (4 x 1) matrix representing the regression coefficients to be determined, and y is the (n x 1) matrix containing the n observations of the target variable y.

Note that X is a rectangular matrix, so we can’t solve the equation above by taking the inverse of X.

#data-science #mathematics #matrix #machine-learning #linear-regression