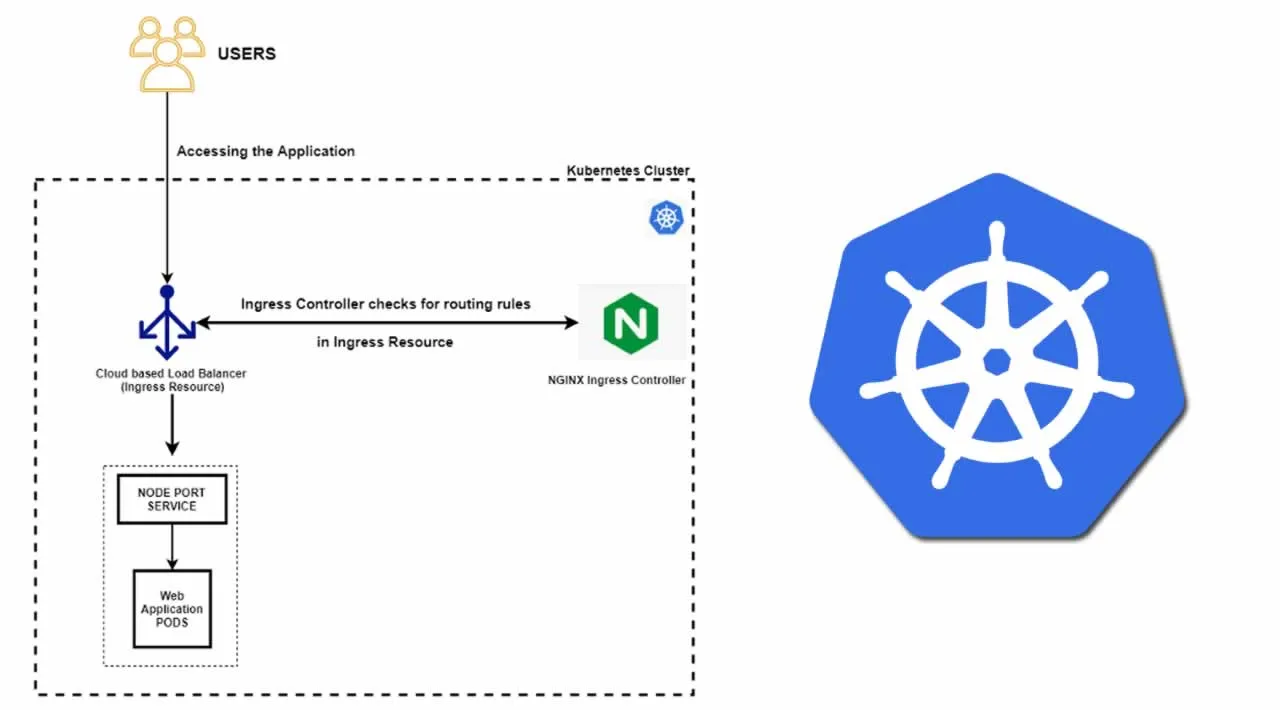

The demo aims at running an application in Kubernetes behind a Cloud-managed public load balancer also known as an HTTP(s) load balancer which is also known as an** Ingress resource** in Kubernetes dictionary. For this demo, I will be using Google Kubernetes Engine. Also, instead of using a default ingress controller that GCP makes of its own, I will be creating an NGINX ingress controller which will be used by the Ingress resource. Using this NGINX ingress controller we will be allowing IP addresses and eventually blocking others from accessing our application running in GKE. Before we start with the implementation, let us get some of our prerequisites revised.

What is Ingress in Kubernetes?

In Kubernetes, an Ingress is an object or a resource that allows access to your Kubernetes services from outside the Kubernetes cluster. One can configure access by creating a collection of rules that define which inbound connections can reach which services. In GKE, when we specify kind: Ingress in the resource manifest. GKE then creates an Ingress resource making appropriate Google Cloud API calls to create an external HTTP(S) load balancer. The load balancer’s URL maps host rules and path matches, to refer to one or more backend services, where each backend service corresponds to a GKE Service of type NodePort, as referenced in the Ingress.

But then……

What is Ingress Controller in Kubernetes?

For the Ingress resource to work, the cluster must have an ingress controller running. There multiple Ingress controllers available and they can be configured with the Ingress resource eg. NGINX Ingress Controller, HAproxy Ingress controller, Traefik, Contour, etc.

We will be using the NGINX Ingress Controller for the demo.

So now……

#kubernetes #load-balancer #ingress #nginx #ip