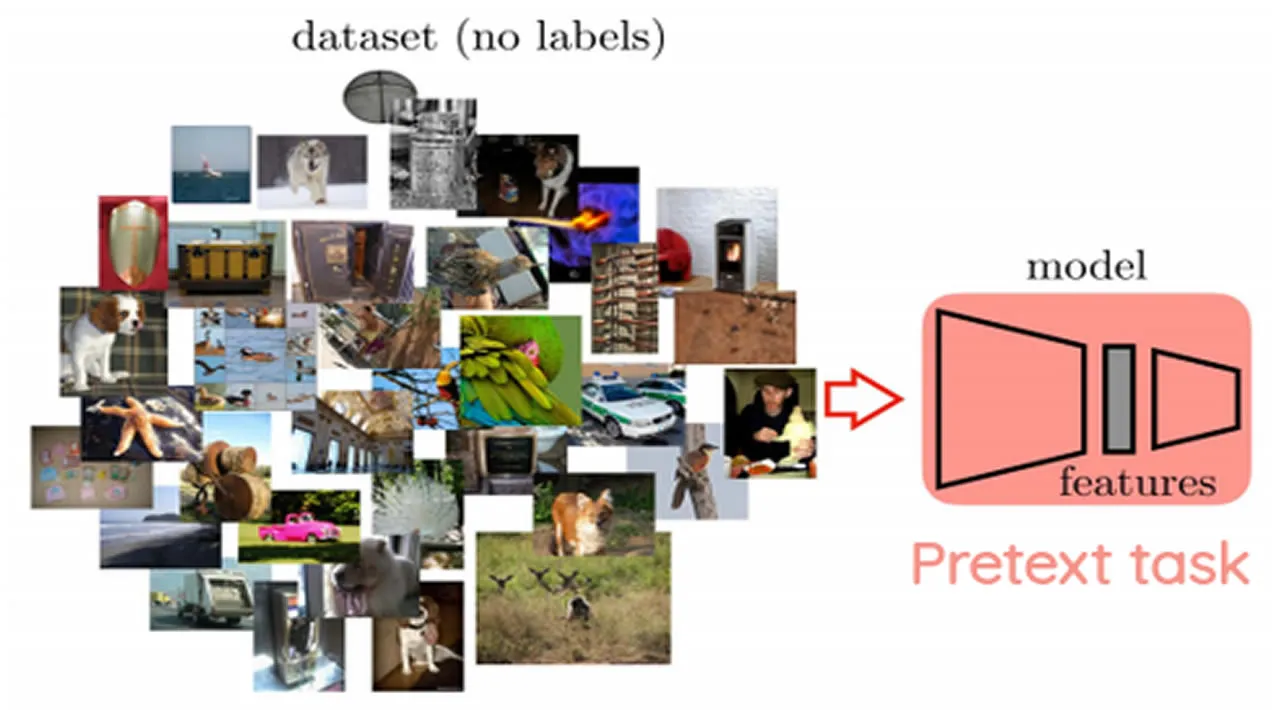

Self Supervised Learning is an interesting research area where the goal is to learn rich representations from unlabeled data without any human annotation.

This can be achieved by creatively formulating a problem such that you use parts of the data itself as labels and try to predict that. Such formulations are called pretext tasks.

For example, you can setup a pretext task to predict the color version of the image given the gray-scale version. Similarly, you could remove a part of the image and train a model to predict the part from the surrounding. There are many such pretext tasks.

#unsupervised-learning #self-supervised-learning #machine-learning #unsupervised-clustering #knowledge-distillation

1.85 GEEK