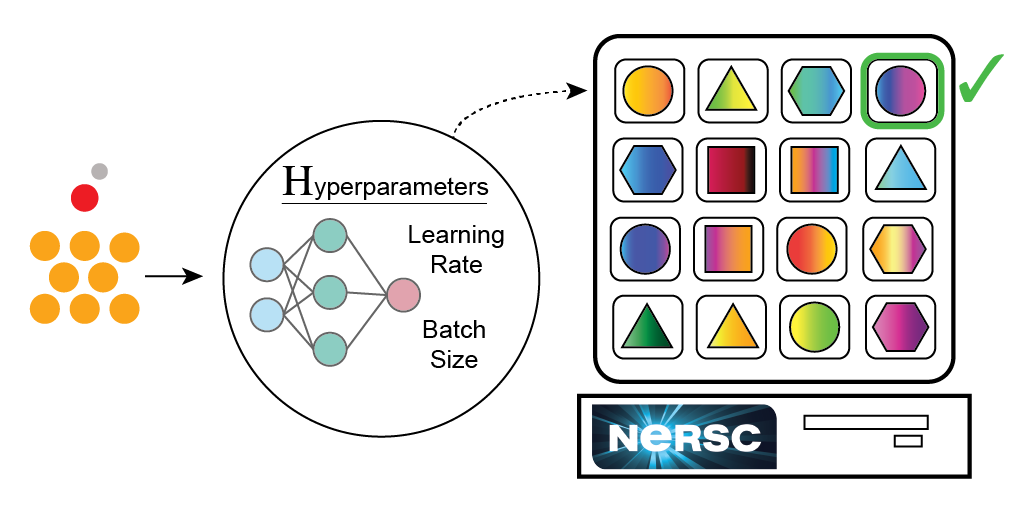

Despite the tremendous success of machine learning (ML), modern algorithms still depend on a variety of free non-trainable hyperparameters. Ultimately, our ability to select quality hyperparameters governs the performance for a given model. In the past, and even some currently, hyperparameters were hand selected through trial and error. An entire field has been dedicated to improving this selection process; it is referred to as hyperparameter optimization (HPO). Inherently, HPO requires testing many different hyperparameter configurations and as a result can benefit tremendously from massively parallel resources like the Perlmutter system we are building at the National Energy Research Scientific Computing Center (NERSC). As we prepare for Perlmutter, we wanted to explore the multitude of HPO frameworks and strategies that exist on a model of interest. This article is a product of that exploration and is intended to provide an introduction to HPO methods and guidance on running HPO at scale, based on my recent experiences and results.

Disclaimer; this article contains plenty of general non-software specific information about HPO, but there is a bias for free open source software that is applicable to our systems at NERSC.

In this article, we will cover …

- Scalable HPO with Ray Tune

- Schedulers vs Search Algorithms

- Not All Hyperparameters Can Be Treated the Same

- Time-to-Solution Study

- Optimal Scheduling with PBT

- Cheat Sheet for Selecting an HPO Strategy

- Technical Tips— Ray Tune, Dragonfly, Slurm, TB, W&B

- Key Takeaways

#editors-pick #machine-learning #hyperparameter #hyperparameter-tuning #deep-learning