Learn How To Build A Neural Network Model With No Machine Learning Package

One of the popular requests that I usually get from readers who love the “building algorithms from scratch” series is coverage on deep learning or neural networks. Neural network models can be quite complex but at their core, most architectures have a common base from which new logics have emerged. This core architecture (which I refer to as Vanilla Neural Network) will be the main focus of this post. As a result, this post aims to build a vanilla neural network WITHOUT any ML package but instead implement these mathematical concepts with Julia (a language I feel is the perfect companion for numerical computing). The goal is to implement a neural network model from scratch to solve a binary classification problem.

Brief Background On Neural Networks

So what is even a neural network? The genesis of neural networks is widely attributed to the paper of Warren McCulloch (neurologist) and Walter Pitts (mathematician) in 1934. In this paper, they modelled a simple neural network using electrical circuits with inspiration on how the human mind works.

What Is Even A Neural Network?

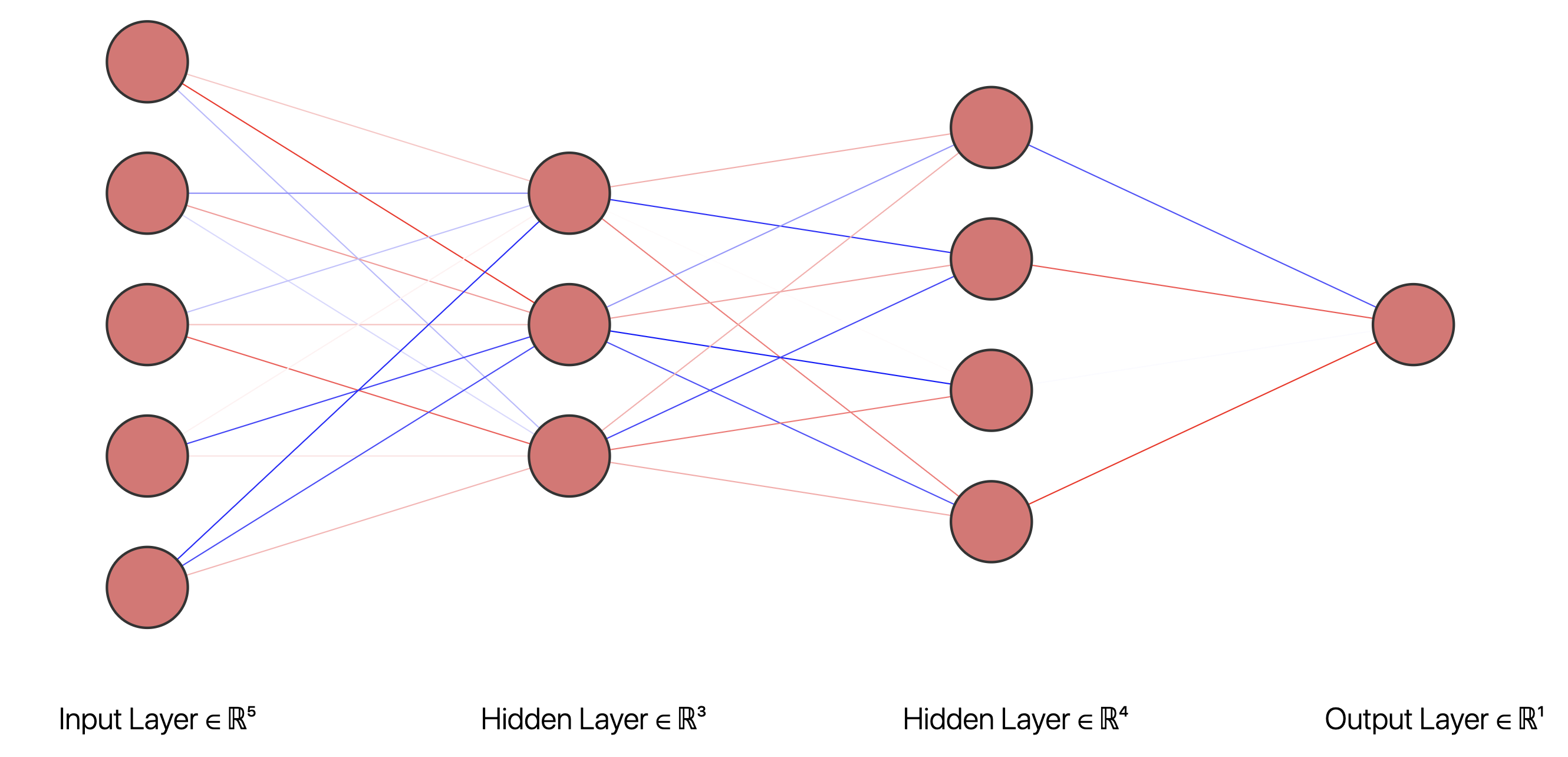

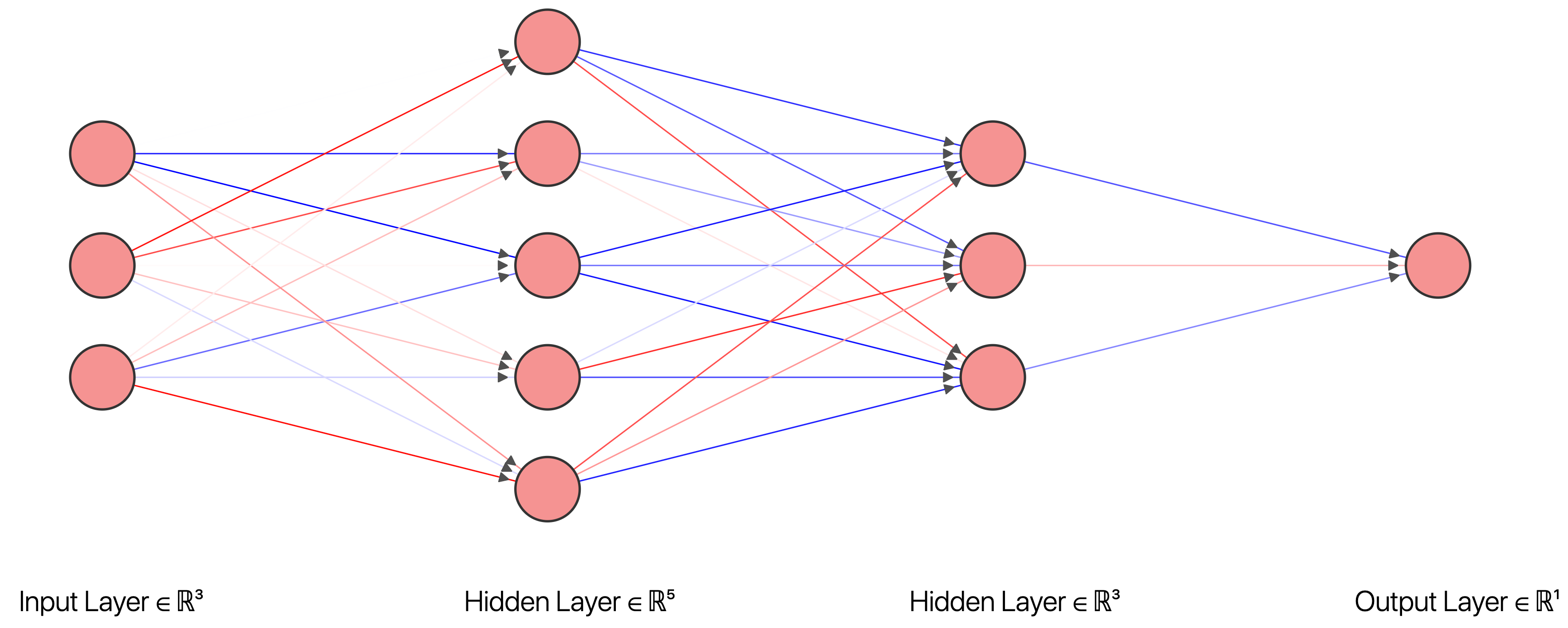

At some basic level, Neural networks can be seen as a system which tries to take inspiration from how biological neurons share information with each other in an attempt to discover patterns within some supplied data. Neural networks are typically composed of interconnected layers. The layers of a vanilla Neural Network are;

- Input Layer

- Hidden Layer(s)

- Output Layer(s)

The input layer accepts the raw data which are passed onto the hidden layer(s) whose job is to try to find some underlying patterns in this raw data. Finally, the output layer(s) combines these learned patterns and spits out a predicted value.

Image generated from NN-SVG

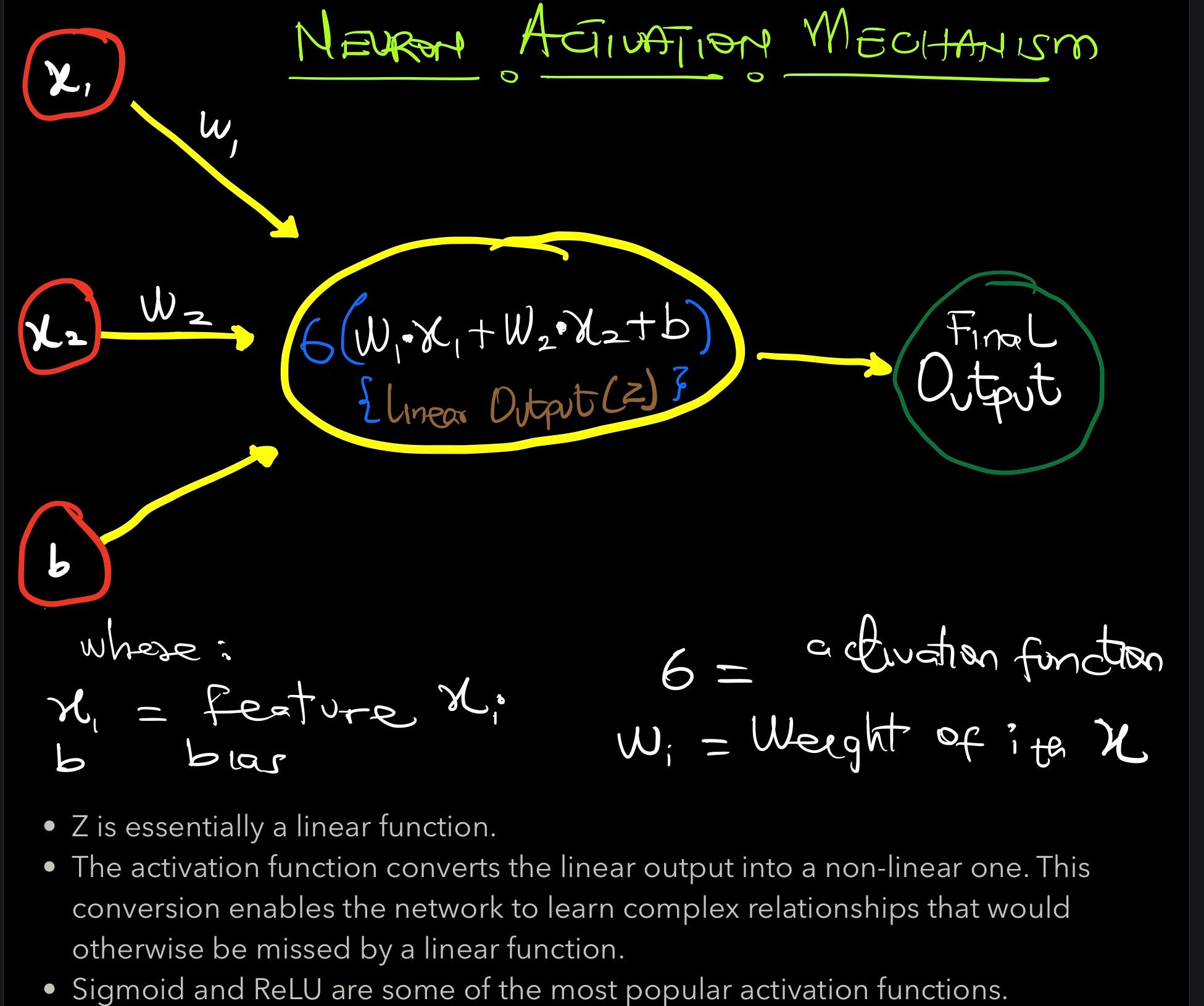

How do these interconnected layers share information? At the heart of neural networks is the concept of neurons. All these layers have one thing in common, they have are composed of neurons. These neurons are responsible for the transmission of information across the network but what sort of mechanism is used for this data transmission?

Neurons — The Building Block Of Neural Networks

Instead of pouring out some machine learning theories to explain this mechanism, let us simply explain it with a very simple yet practical example involving a single neuron. Assuming that we have a simple case where we want to train a neural network to distinguish between a dolphin and a shark. Luckily for us, we have thousands or millions of information gathered from dolphins and sharks around the world. For simplicity’s sake, let’s say the only variables recorded for each of the observed are ‘size(X1)’ and ‘weight(X2)’. Every neuron has its own parameters — ‘weights(W1)’ and ‘bias(b1)’ — which are used to process the incoming data (X1 & X2) with these parameters and then share the output of this process with the next neuron. This process is visually represented below;

Image copyrights owned by author

A typical network is composed of so many layers of neurons (billions, in the case of GPT-3). The aggregate of these neurons make up the main parameters of a vanilla neural network. Ok, we know how a neuron works, but how does an army of neurons learn how to make good predictions on some supplied data?

Main Components Of A Vanilla Neural Network

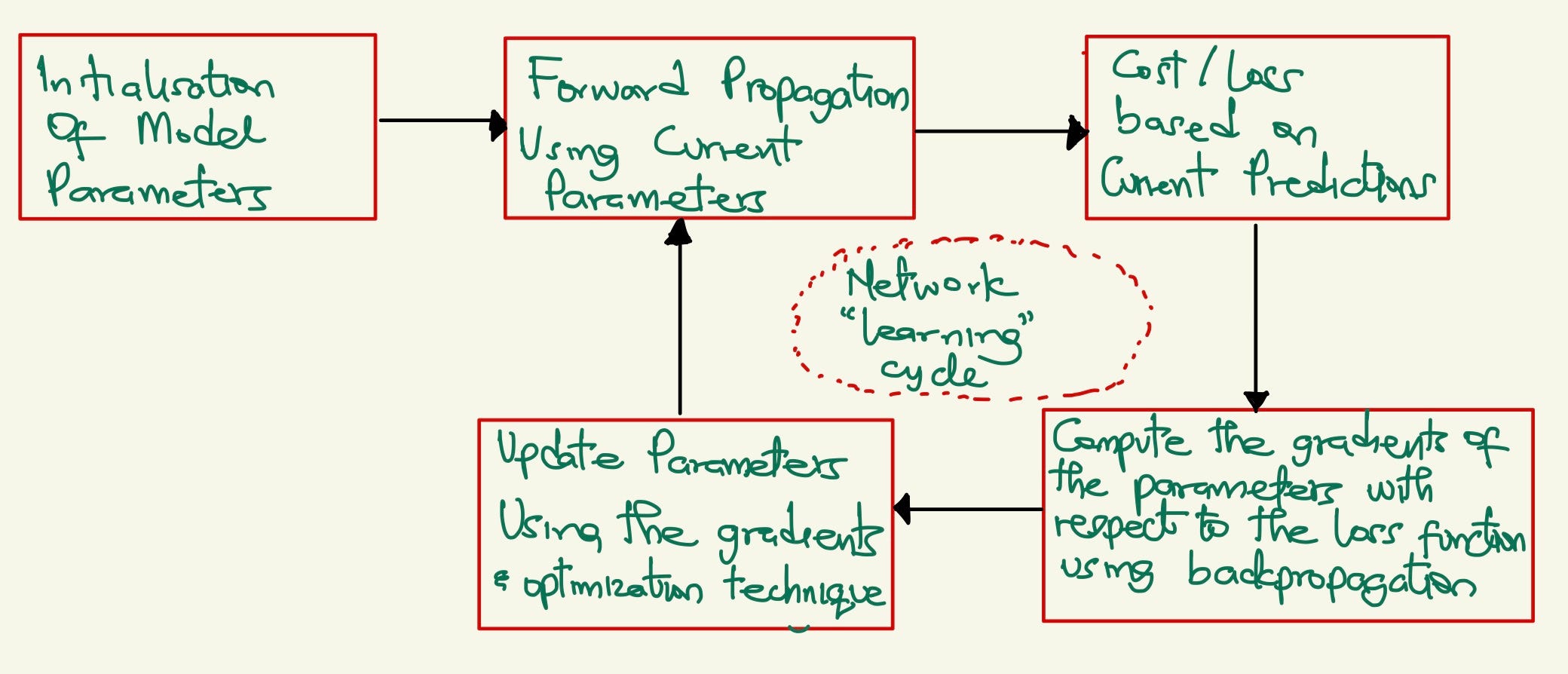

To understand this learning process, we will compose our own neural network architecture to solve the task at hand. To do this, we would need to breakdown the sequence of operations within a typical vanilla network via 5 components;

Image copyrights owned by author

The nature of the problem for which a network is designed to solve normally dictates the range of choices available in these 5 components. In a way, these networks are like LEGO systems where users plug in different activation functions, loss/cost functions, optimization techniques. It is of no surprise that this deep learning is one of the most experimental fields in scientific computing. With all these in mind, let us now move over the fun part and implement our network to solve the binary classification task.

NB: The implementation will be vectorized with input data of dimensions **(n, m)_ examples mapping some ouptut target of dimensions _(m, 1)_ where _n_ is the number is the number of features and _m**_ is the number of training examples._

Image generated from NN-SVG

- Initialisation Of Model Parameters

These networks are typically composed by stacking up many layers of neurons. The number of layers and neurons are fixed and the elements of these neurons (weights and bias) initially normally populated some random values (for symmetry-breaking since we have an optimisation problem). This snippet randomly initialiases the parameters of a given network dimensions:

"""

Funtion to initialise the parameters or weights of the desired network.

"""

function initialise_model_weights(layer_dims, seed)

params = Dict()

## Build a dictionary of initialised weights and bias units

for l=2:length(layer_dims)

params[string("W_", (l-1))] = rand(StableRNG(seed), layer_dims[l], layer_dims[l-1]) * sqrt(2 / layer_dims[l-1])

params[string("b_", (l-1))] = zeros(layer_dims[l], 1)

end

return params

end

#data-science #deep-learning #julia #artificial-intelligence #developer