Step by step tutorial on how to build a simple neural network from scratch

Introduction

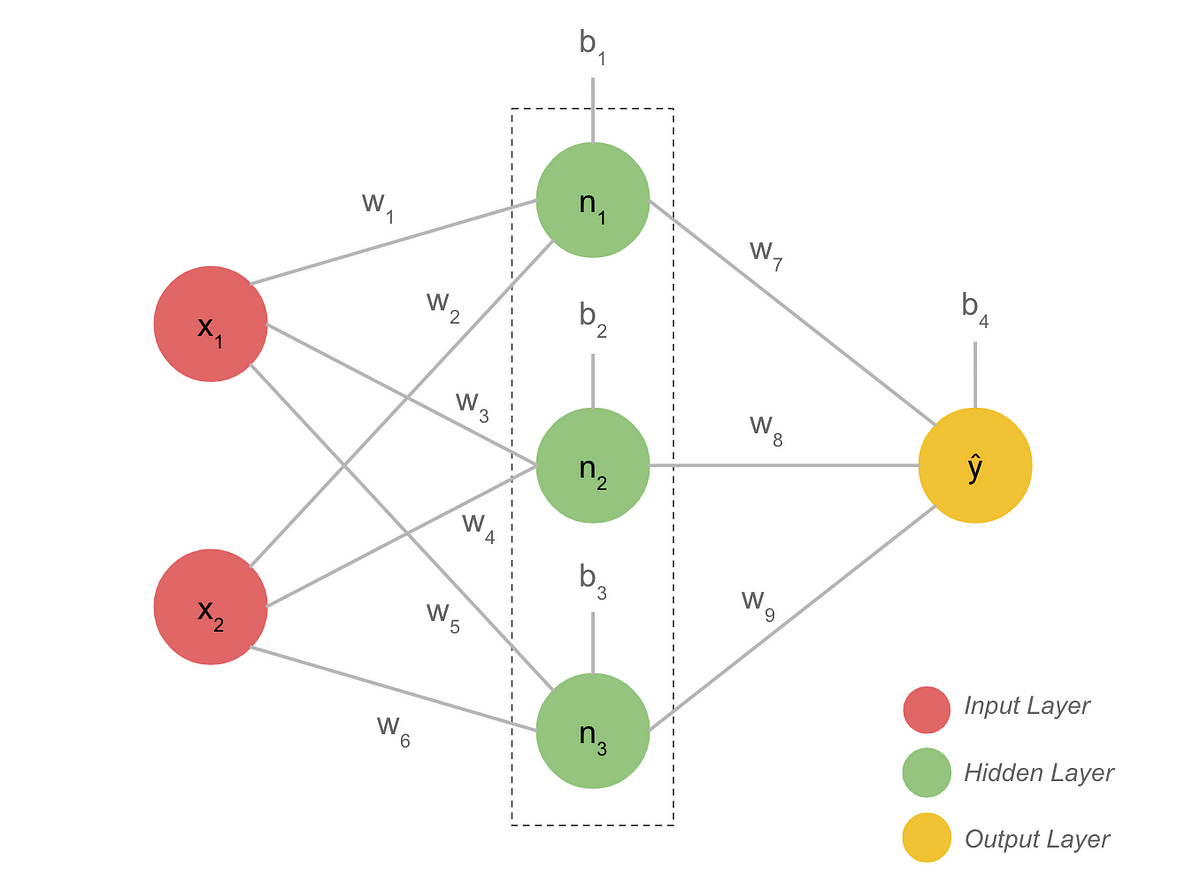

In this post, we will build our own neural network from scratch with one hidden layer and a sigmoid activation function. We will take a closer look at the derivatives and a chain rule to have a clear picture of the backpropagation implementation. Our network would be able to solve a linear regression task with the same accuracy as a Keras analog.

The code for this project you can find in this GitHub repository.

What is a Neural Network?

We used to see neural networks as interconnected layers of neurons, where we have an input layer on the left, hidden layers in the middle and output layer on the right side. It’s easier to digest visually, but ultimately, the neural network is just one big function that takes other functions as an input; and depends on the depth of the network those inner functions could also take other functions as input and so on. Those inner functions are in fact “layers”.

#gradient-descent #neural-networks #backpropagation #keras