How to teach drone to see what is below and segment the object with high resolution

Introduction

Drone uses already gain popularity in the past few years, it provides high resolution images compare to satellite imagery_ with lower cost_, flexibility and low-flying altitude thus leading to increasing interest in the field or even it can _carry various sensor _such as magnetic sensor.

Drone (Unsplash)

Teaching drone to see is quite challenges due to bird’s eye view and most of pre-trained models are trained in normal images we see (point of view) in daily basis (ImageNet, PASCAL VOC, COCO). In this project I want to experiment how to train drone datasets, the aims are:

- Model that light weight (less parameters)

- High score (I hope so)

- Fast inference latency.

Datasets

_[2] The Semantic Drone Datasets focuses on semantic understanding of urban scenes for increasing the safety of autonomous drone flight and landing procedures. The imagery depicts more than 20 houses from nadir (bird’s eye) view acquired at an altitude of 5 to 30 meters above ground. A high resolution camera was used to acquire images at a size of 6000x4000px (24Mpx). The training set contains __400 publicly available images _and the test set is made up of 200 private images.

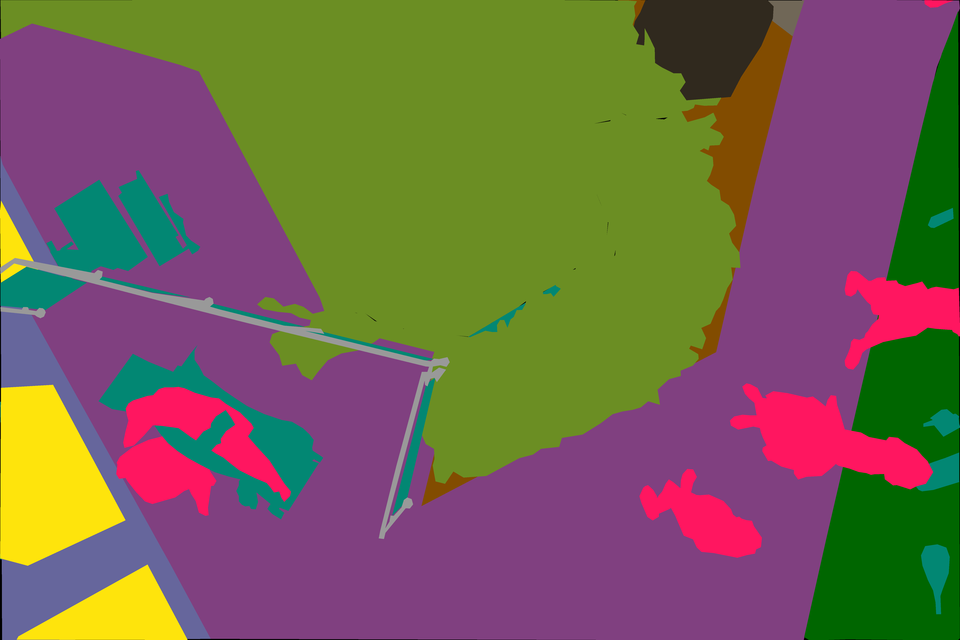

Sample Image from Datasets

The complexity of the datasets is limited to 20 classes (but actually it has 23 classes in its mask) as listed in the following: tree, grass, other vegetation, dirt, gravel, rocks, water, paved area, pool, person, dog, car, bicycle, roof, wall, fence, fence-pole, window, door, obstacle.

Methods

Preprocessing

I resize the image with the same ratio like the original input to 704 x 1056, I don’t crop the image into patches because a few reasons, the object is not too small, it doesn’t take much memory and save my training time. I split the datasets into tree parts training(306), validation(54) and test(40) sets and applied HorizontalFlip, VerticalFlip, GridDistortion, Random Brightness Contrast and add GaussNoise into training data, with mini-batch size 3.

Model Architecture

I use 2 model architectures, I purposely use light model as backbone like MobileNet and EfficientNet for computational efficiency.

- U-Net with MobileNet_V2 and EfficientNet-B3 as backbone

- **FPN **(Feature Pyramid Network) with EfficientNet-B3 backbone

I seeParmar’s paper_[3] _for the model choices (I already trained different models before and these choices seem work)

#computer-vision #remote-sensing #drones #deep-learning #deep learning