Introduction

Deep learning is one of the most interesting and promising areas of artificial intelligence (AI) and machine learning currently. With great advances in technology and algorithms in recent years, deep learning has opened the door to a new era of AI applications.

In many of these applications, deep learning algorithms performed equal to human experts and sometimes surpassed them.

Python has become the go-to language for Machine Learning and many of the most popular and powerful deep learning libraries and frameworks like TensorFlow, Keras, and PyTorch are built in Python.

In this series, we’ll be using Keras to perform Exploratory Data Analysis (EDA), Data Preprocessing and finally, build a Deep Learning Model and evaluate it.

In this stage, we will build a deep neural-network model that we will train and then use to predict house prices.

Defining the Model

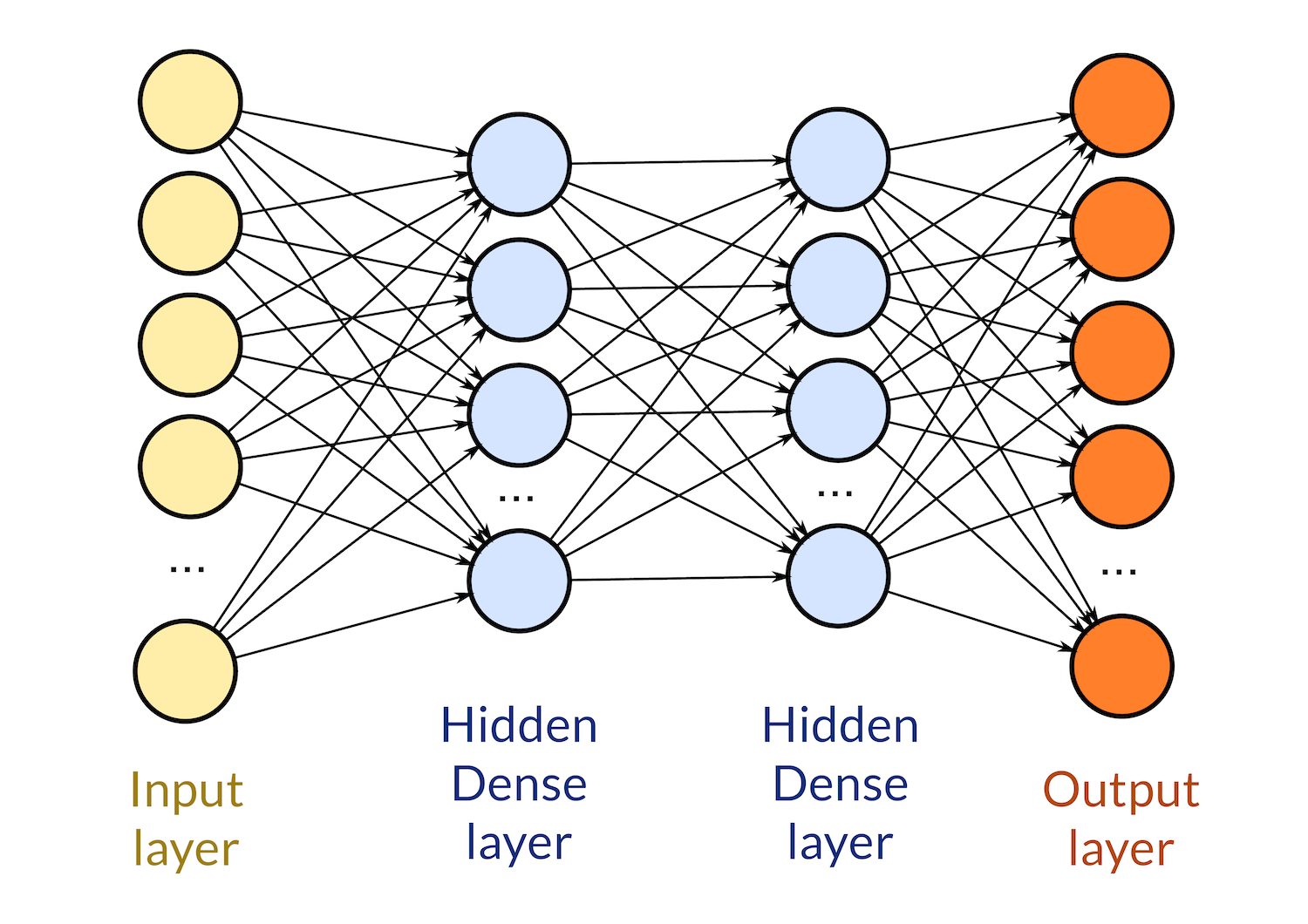

A deep learning neural network is just a neural network with many hidden layers.

Defining the model can be broken down into a few characteristics:

- Number of Layers

- Types of these Layers

- Number of units (neurons) in each Layer

- Activation Functions of each Layer

- Input and output size

Deep Learning Layers

There are many types of layers for deep learning models. Convolutional and pooling layers are used in CNNs that classify images or do object detection, while recurrent layers are used in RNNs that are common in natural language processing and speech recognition.

We’ll be using Dense and Dropout layers. Dense layers are the most common and popular type of layer - it’s just a regular neural network layer where each of its neurons is connected to the neurons of the previous and next layer.

Each dense layer has an activation function that determines the output of its neurons based on the inputs and the weights of the synapses.

Dropout layers are just regularization layers that randomly drop some of the input units to 0. This helps in reducing the chance of overfitting the neural network.

Activation Functions

There are also many types of activation functions that can be applied to layers. Each of them links the neuron’s input and weights in a different way and makes the network behave differently.

Really common functions are ReLU (Rectified Linear Unit), the Sigmoid function and the Linear function. We’ll be mixing a couple of different functions.

Input and Output layers

In addition to hidden layers, models have an input layer and an output layer:

#python #machine learning #deep learning #ai #artificial intelligence #keras